Maofu Liu

CADFormer: Fine-Grained Cross-modal Alignment and Decoding Transformer for Referring Remote Sensing Image Segmentation

Mar 30, 2025

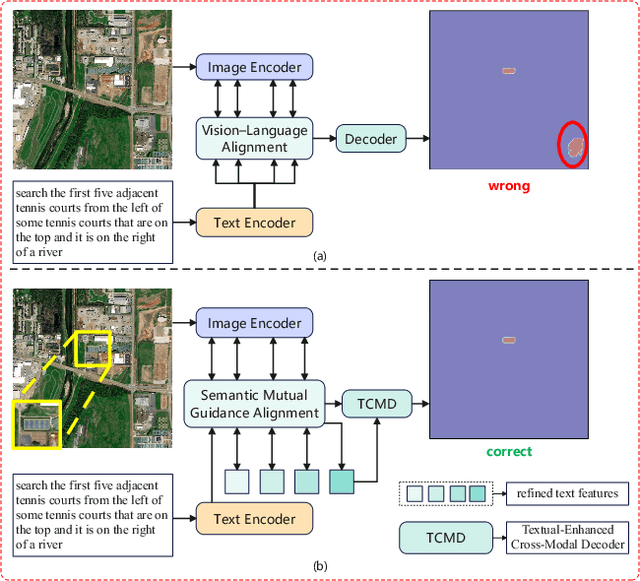

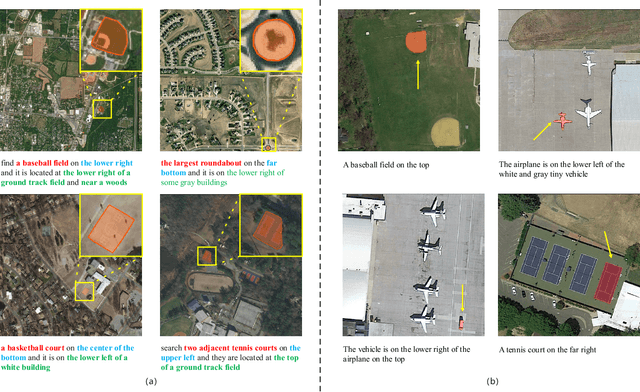

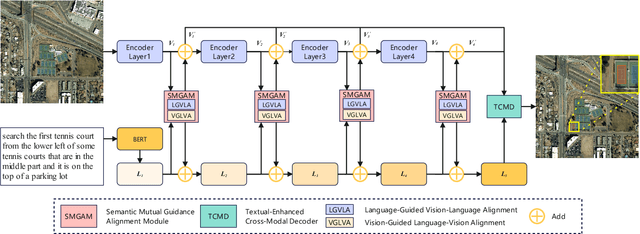

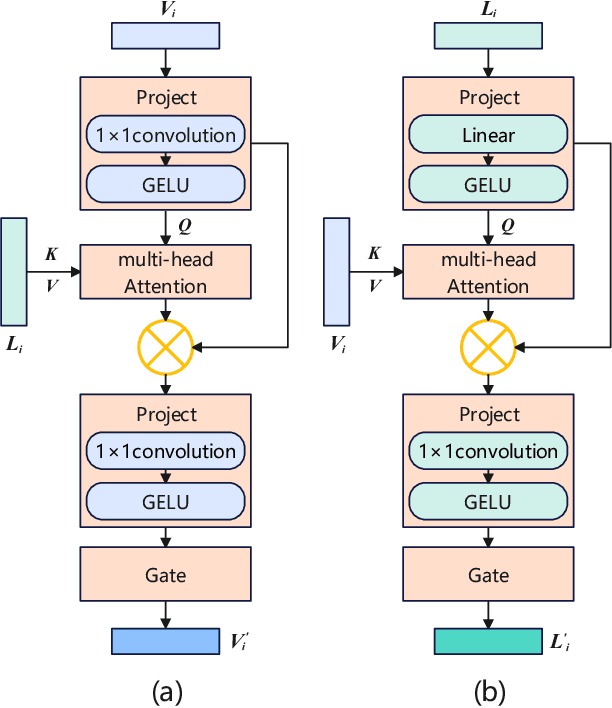

Abstract:Referring Remote Sensing Image Segmentation (RRSIS) is a challenging task, aiming to segment specific target objects in remote sensing (RS) images based on a given language expression. Existing RRSIS methods typically employ coarse-grained unidirectional alignment approaches to obtain multimodal features, and they often overlook the critical role of language features as contextual information during the decoding process. Consequently, these methods exhibit weak object-level correspondence between visual and language features, leading to incomplete or erroneous predicted masks, especially when handling complex expressions and intricate RS image scenes. To address these challenges, we propose a fine-grained cross-modal alignment and decoding Transformer, CADFormer, for RRSIS. Specifically, we design a semantic mutual guidance alignment module (SMGAM) to achieve both vision-to-language and language-to-vision alignment, enabling comprehensive integration of visual and textual features for fine-grained cross-modal alignment. Furthermore, a textual-enhanced cross-modal decoder (TCMD) is introduced to incorporate language features during decoding, using refined textual information as context to enhance the relationship between cross-modal features. To thoroughly evaluate the performance of CADFormer, especially for inconspicuous targets in complex scenes, we constructed a new RRSIS dataset, called RRSIS-HR, which includes larger high-resolution RS image patches and semantically richer language expressions. Extensive experiments on the RRSIS-HR dataset and the popular RRSIS-D dataset demonstrate the effectiveness and superiority of CADFormer. Datasets and source codes will be available at https://github.com/zxk688.

Semantic-Spatial Feature Fusion with Dynamic Graph Refinement for Remote Sensing Image Captioning

Mar 30, 2025

Abstract:Remote sensing image captioning aims to generate semantically accurate descriptions that are closely linked to the visual features of remote sensing images. Existing approaches typically emphasize fine-grained extraction of visual features and capturing global information. However, they often overlook the complementary role of textual information in enhancing visual semantics and face challenges in precisely locating objects that are most relevant to the image context. To address these challenges, this paper presents a semantic-spatial feature fusion with dynamic graph refinement (SFDR) method, which integrates the semantic-spatial feature fusion (SSFF) and dynamic graph feature refinement (DGFR) modules. The SSFF module utilizes a multi-level feature representation strategy by leveraging pre-trained CLIP features, grid features, and ROI features to integrate rich semantic and spatial information. In the DGFR module, a graph attention network captures the relationships between feature nodes, while a dynamic weighting mechanism prioritizes objects that are most relevant to the current scene and suppresses less significant ones. Therefore, the proposed SFDR method significantly enhances the quality of the generated descriptions. Experimental results on three benchmark datasets demonstrate the effectiveness of the proposed method. The source code will be available at https://github.com/zxk688}{https://github.com/zxk688.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge