Manuel Bouillon

Are You Tampering With My Data?

Aug 21, 2018

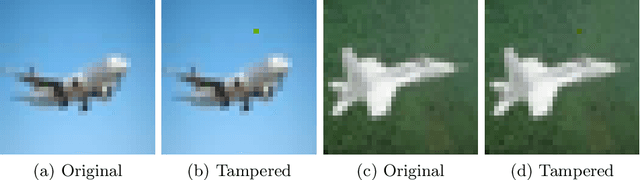

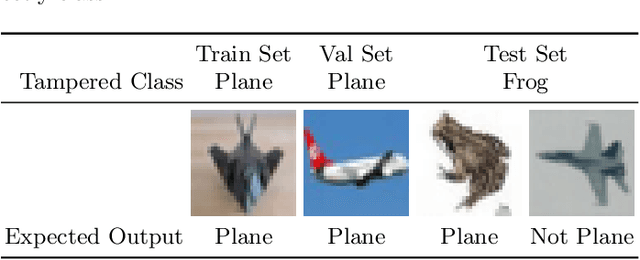

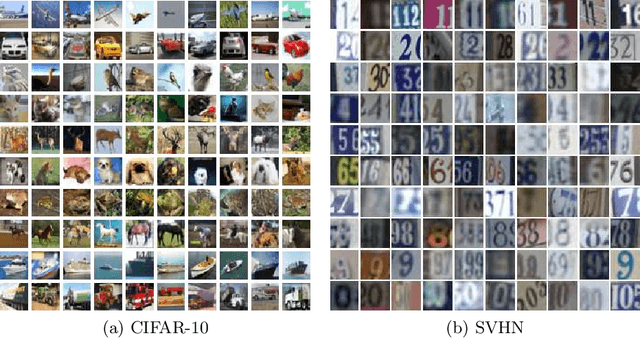

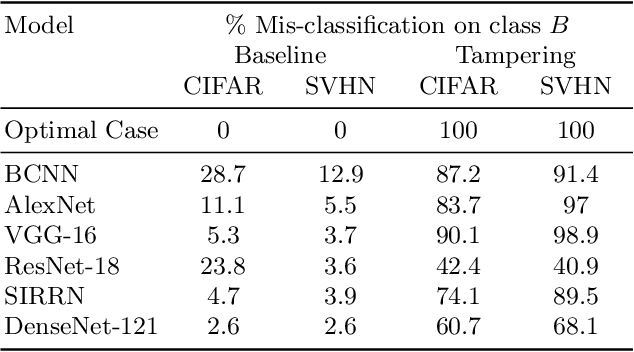

Abstract:We propose a novel approach towards adversarial attacks on neural networks (NN), focusing on tampering the data used for training instead of generating attacks on trained models. Our network-agnostic method creates a backdoor during training which can be exploited at test time to force a neural network to exhibit abnormal behaviour. We demonstrate on two widely used datasets (CIFAR-10 and SVHN) that a universal modification of just one pixel per image for all the images of a class in the training set is enough to corrupt the training procedure of several state-of-the-art deep neural networks causing the networks to misclassify any images to which the modification is applied. Our aim is to bring to the attention of the machine learning community, the possibility that even learning-based methods that are personally trained on public datasets can be subject to attacks by a skillful adversary.

* 18 pages

Open Evaluation Tool for Layout Analysis of Document Images

Nov 23, 2017

Abstract:This paper presents an open tool for standardizing the evaluation process of the layout analysis task of document images at pixel level. We introduce a new evaluation tool that is both available as a standalone Java application and as a RESTful web service. This evaluation tool is free and open-source in order to be a common tool that anyone can use and contribute to. It aims at providing as many metrics as possible to investigate layout analysis predictions, and also provide an easy way of visualizing the results. This tool evaluates document segmentation at pixel level, and support multi-labeled pixel ground truth. Finally, this tool has been successfully used for the ICDAR2017 competition on Layout Analysis for Challenging Medieval Manuscripts.

* The 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), HIP: 4th International Workshop on Historical Document Imaging and Processing, Kyoto, Japan, 2017

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge