Mansoor Yousefi

Telecom Paris, Palaiseau, France

Low Complexity Convolutional Neural Networks for Equalization in Optical Fiber Transmission

Oct 11, 2022

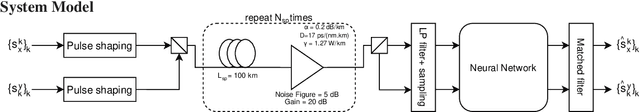

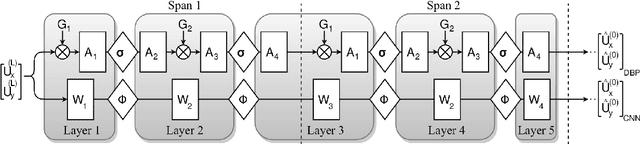

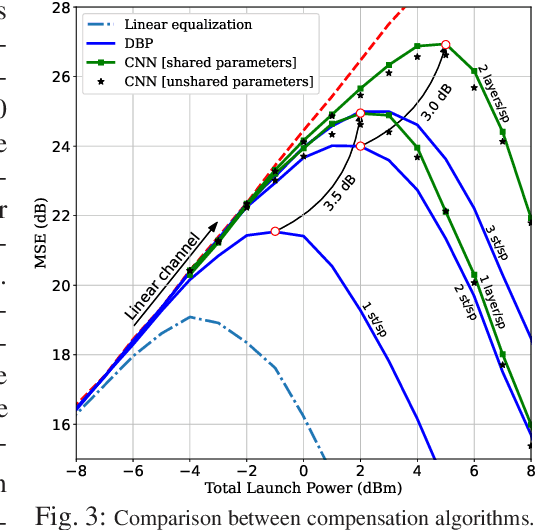

Abstract:A convolutional neural network is proposed to mitigate fiber transmission effects, achieving a five-fold reduction in trainable parameters compared to alternative equalizers, and 3.5 dB improvement in MSE compared to DBP with comparable complexity.

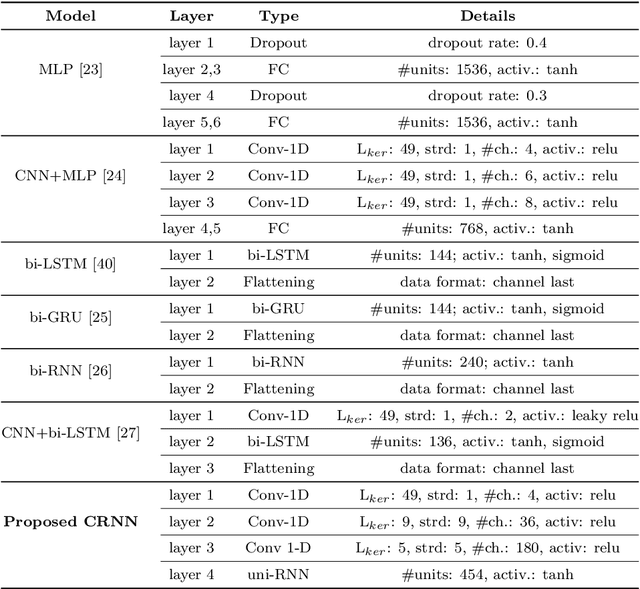

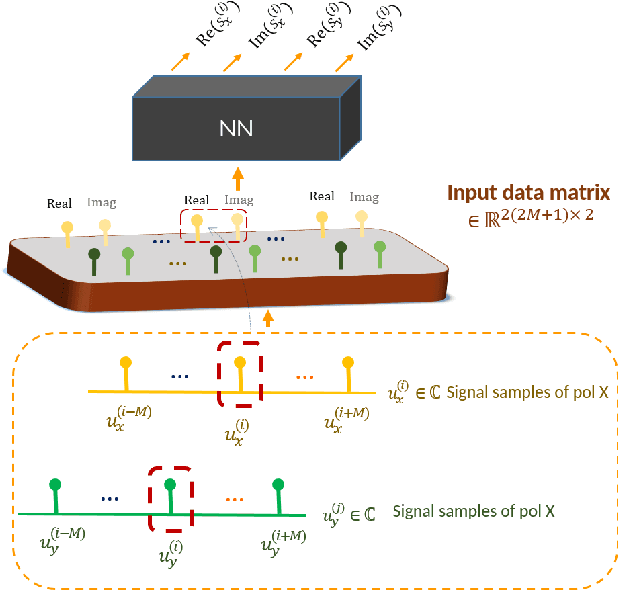

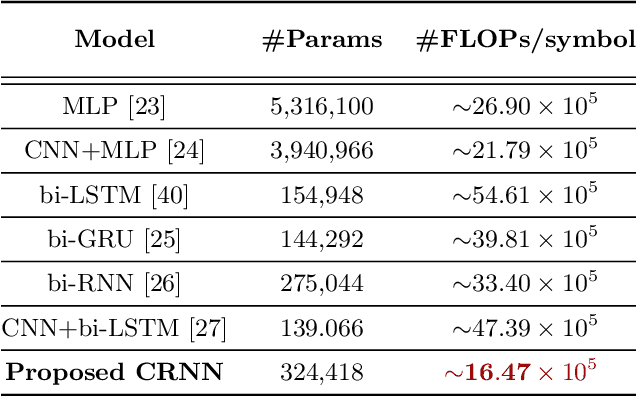

Complexity Reduction over Bi-RNN-Based Nonlinearity Mitigation in Dual-Pol Fiber-Optic Communications via a CRNN-Based Approach

Jul 25, 2022

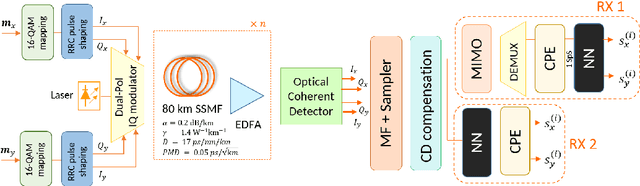

Abstract:Bidirectional recurrent neural networks (bi-RNNs), in particular, bidirectional long short term memory (bi-LSTM), bidirectional gated recurrent unit, and convolutional bi-LSTM models have recently attracted attention for nonlinearity mitigation in fiber-optic communication. The recently adopted approaches based on these models, however, incur a high computational complexity which may impede their real-time functioning. In this paper, by addressing the sources of complexity in these methods, we propose a more efficient network architecture, where a convolutional neural network encoder and a unidirectional many-to-one vanilla RNN operate in tandem, each best capturing one set of channel impairments while compensating for the shortcomings of the other. We deploy this model in two different receiver configurations. In one, the neural network is placed after a linear equalization chain and is merely responsible for nonlinearity mitigation; in the other, the neural network is directly placed after the chromatic dispersion compensation and is responsible for joint nonlinearity and polarization mode dispersion compensation. For a 16-QAM 64 GBd dual-polarization optical transmission over 14x80 km standard single-mode fiber, we demonstrate that the proposed hybrid model achieves the bit error probability of the state-of-the-art bi-RNN-based methods with greater than 50% lower complexity, in both receiver configurations.

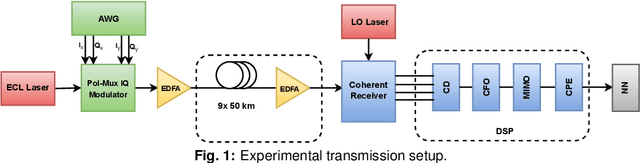

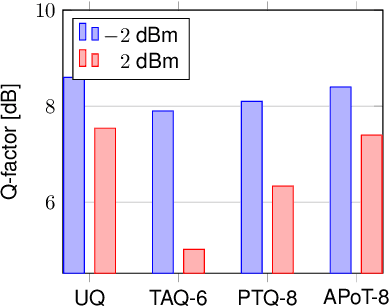

Few-bit Quantization of Neural Networks for Nonlinearity Mitigation in a Fiber Transmission Experiment

May 25, 2022

Abstract:A neural network is quantized for the mitigation of nonlinear and components distortions in a 16-QAM 9x50km dual-polarization fiber transmission experiment. Post-training additive power-of-two quantization at 6 bits incurs a negligible Q-factor penalty. At 5 bits, the model size is reduced by 85%, with 0.8 dB penalty.

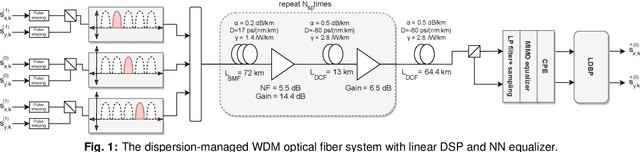

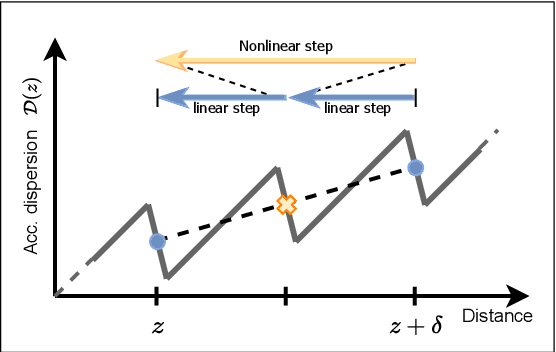

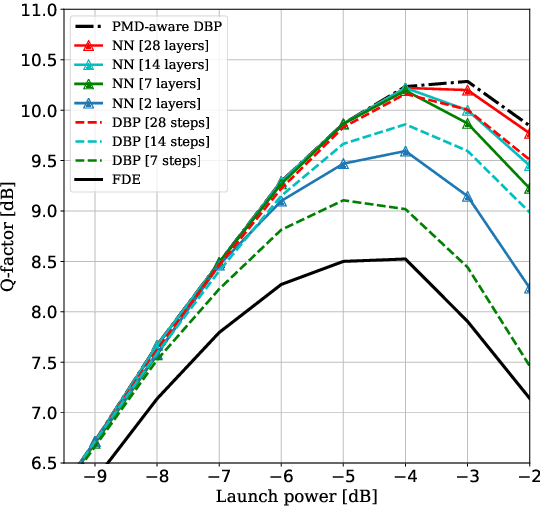

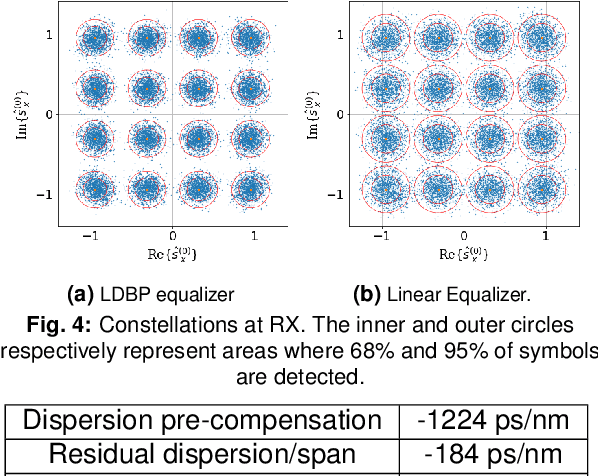

Learned Digital Back-Propagation for Dual-Polarization Dispersion Managed Systems

May 23, 2022

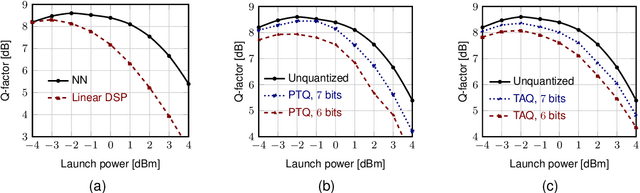

Abstract:Digital back-propagation (DBP) and learned DBP (LDBP) are proposed for nonlinearity mitigation in WDM dual-polarization dispersion-managed systems. LDBP achieves Q-factor improvement of 1.8 dB and 1.2 dB, respectively, over linear equalization and a variant of DBP adapted to DM systems.

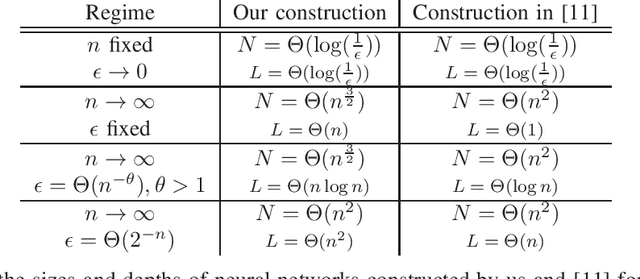

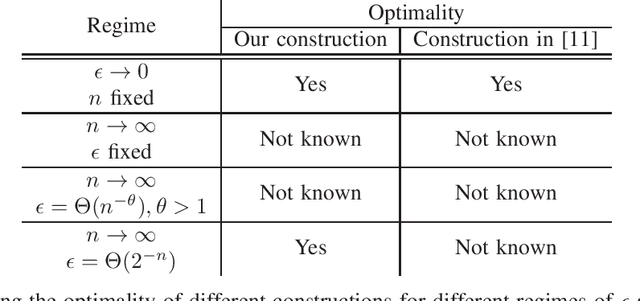

Approximating Probability Distributions by ReLU Networks

Jan 25, 2021

Abstract:How many neurons are needed to approximate a target probability distribution using a neural network with a given input distribution and approximation error? This paper examines this question for the case when the input distribution is uniform, and the target distribution belongs to the class of histogram distributions. We obtain a new upper bound on the number of required neurons, which is strictly better than previously existing upper bounds. The key ingredient in this improvement is an efficient construction of the neural nets representing piecewise linear functions. We also obtain a lower bound on the minimum number of neurons needed to approximate the histogram distributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge