Manjira Sinha

StayLTC: A Cost-Effective Multimodal Framework for Hospital Length of Stay Forecasting

Apr 08, 2025

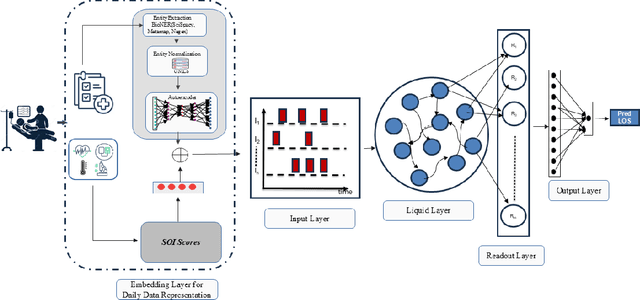

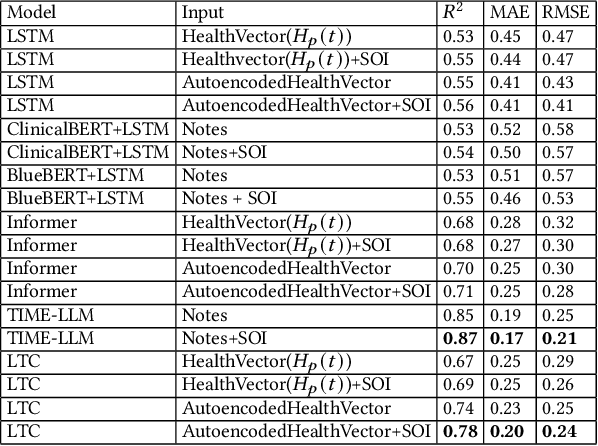

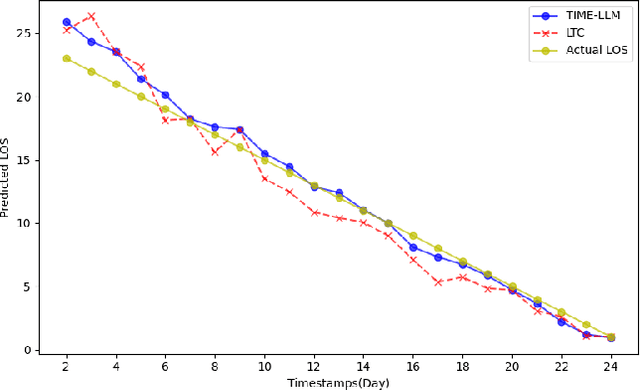

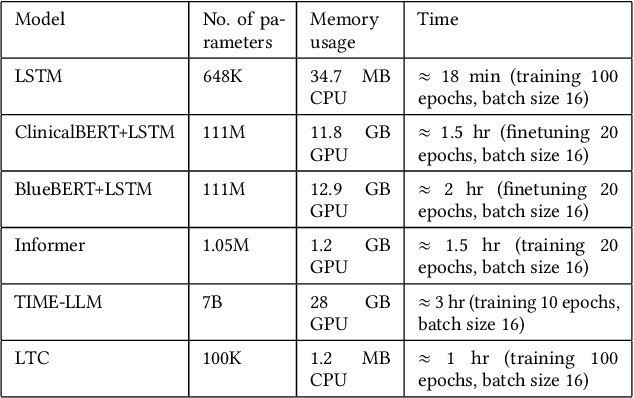

Abstract:Accurate prediction of Length of Stay (LOS) in hospitals is crucial for improving healthcare services, resource management, and cost efficiency. This paper presents StayLTC, a multimodal deep learning framework developed to forecast real-time hospital LOS using Liquid Time-Constant Networks (LTCs). LTCs, with their continuous-time recurrent dynamics, are evaluated against traditional models using structured data from Electronic Health Records (EHRs) and clinical notes. Our evaluation, conducted on the MIMIC-III dataset, demonstrated that LTCs significantly outperform most of the other time series models, offering enhanced accuracy, robustness, and efficiency in resource utilization. Additionally, LTCs demonstrate a comparable performance in LOS prediction compared to time series large language models, while requiring significantly less computational power and memory, underscoring their potential to advance Natural Language Processing (NLP) tasks in healthcare.

Non-Euclidean Hierarchical Representational Learning using Hyperbolic Graph Neural Networks for Environmental Claim Detection

Feb 19, 2025Abstract:Transformer-based models dominate NLP tasks like sentiment analysis, machine translation, and claim verification. However, their massive computational demands and lack of interpretability pose challenges for real-world applications requiring efficiency and transparency. In this work, we explore Graph Neural Networks (GNNs) and Hyperbolic Graph Neural Networks (HGNNs) as lightweight yet effective alternatives for Environmental Claim Detection, reframing it as a graph classification problem. We construct dependency parsing graphs to explicitly model syntactic structures, using simple word embeddings (word2vec) for node features with dependency relations encoded as edge features. Our results demonstrate that these graph-based models achieve comparable or superior performance to state-of-the-art transformers while using 30x fewer parameters. This efficiency highlights the potential of structured, interpretable, and computationally efficient graph-based approaches.

Deep Learning for Slum Mapping in Remote Sensing Images: A Meta-analysis and Review

Jun 12, 2024Abstract:The major Sustainable Development Goals (SDG) 2030, set by the United Nations Development Program (UNDP), include sustainable cities and communities, no poverty, and reduced inequalities. However, millions of people live in slums or informal settlements with poor living conditions in many major cities around the world, especially in less developed countries. To emancipate these settlements and their inhabitants through government intervention, accurate data about slum location and extent is required. While ground survey data is the most reliable, such surveys are costly and time-consuming. An alternative is remotely sensed data obtained from very high-resolution (VHR) imagery. With the advancement of new technology, remote sensing based mapping of slums has emerged as a prominent research area. The parallel rise of Artificial Intelligence, especially Deep Learning has added a new dimension to this field as it allows automated analysis of satellite imagery to identify complex spatial patterns associated with slums. This article offers a detailed review and meta-analysis of research on slum mapping using remote sensing imagery from 2014 to 2024, with a special focus on deep learning approaches. Our analysis reveals a trend towards increasingly complex neural network architectures, with advancements in data preprocessing and model training techniques significantly enhancing slum identification accuracy. We have attempted to identify key methodologies that are effective across diverse geographic contexts. While acknowledging the transformative impact Convolutional Neural Networks (CNNs) in slum detection, our review underscores the absence of a universally optimal model, suggesting the need for context-specific adaptations. We also identify prevailing challenges in this field, such as data limitations and a lack of model explainability and suggest potential strategies for overcoming these.

Optimizing Odia Braille Literacy: The Influence of Speed on Error Reduction and Enhanced Comprehension

Oct 12, 2023

Abstract:This study aims to conduct an extensive detailed analysis of the Odia Braille reading comprehension among students with visual disability. Specifically, the study explores their reading speed and hand or finger movements. The study also aims to investigate any comprehension difficulties and reading errors they may encounter. Six students from the 9th and 10th grades, aged between 14 and 16, participated in the study. We observed participants hand movements to understand how reading errors were connected to hand movement and identify the students reading difficulties. We also evaluated the participants Odia Braille reading skills, including their reading speed (in words per minute), errors, and comprehension. The average speed of Odia Braille reader is 17.64wpm. According to the study, there was a noticeable correlation between reading speed and reading errors. As reading speed decreased, the number of reading errors tended to increase. Moreover, the study established a link between reduced Braille reading errors and improved reading comprehension. In contrast, the study found that better comprehension was associated with increased reading speed. The researchers concluded with some interesting findings about preferred Braille reading patterns. These findings have important theoretical, developmental, and methodological implications for instruction.

SepHRNet: Generating High-Resolution Crop Maps from Remote Sensing imagery using HRNet with Separable Convolution

Jul 11, 2023Abstract:The accurate mapping of crop production is crucial for ensuring food security, effective resource management, and sustainable agricultural practices. One way to achieve this is by analyzing high-resolution satellite imagery. Deep Learning has been successful in analyzing images, including remote sensing imagery. However, capturing intricate crop patterns is challenging due to their complexity and variability. In this paper, we propose a novel Deep learning approach that integrates HRNet with Separable Convolutional layers to capture spatial patterns and Self-attention to capture temporal patterns of the data. The HRNet model acts as a backbone and extracts high-resolution features from crop images. Spatially separable convolution in the shallow layers of the HRNet model captures intricate crop patterns more effectively while reducing the computational cost. The multi-head attention mechanism captures long-term temporal dependencies from the encoded vector representation of the images. Finally, a CNN decoder generates a crop map from the aggregated representation. Adaboost is used on top of this to further improve accuracy. The proposed algorithm achieves a high classification accuracy of 97.5\% and IoU of 55.2\% in generating crop maps. We evaluate the performance of our pipeline on the Zuericrop dataset and demonstrate that our results outperform state-of-the-art models such as U-Net++, ResNet50, VGG19, InceptionV3, DenseNet, and EfficientNet. This research showcases the potential of Deep Learning for Earth Observation Systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge