Mandar Pitale

HySafe-AI: Hybrid Safety Architectural Analysis Framework for AI Systems: A Case Study

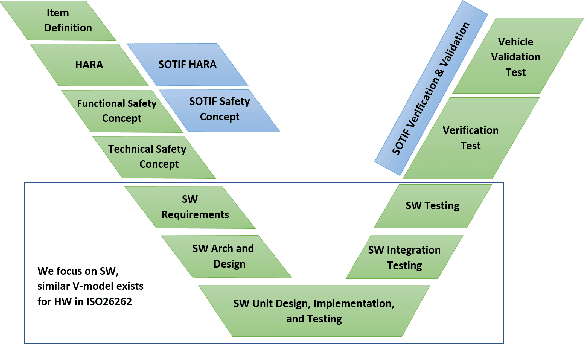

Jul 23, 2025Abstract:AI has become integral to safety-critical areas like autonomous driving systems (ADS) and robotics. The architecture of recent autonomous systems are trending toward end-to-end (E2E) monolithic architectures such as large language models (LLMs) and vision language models (VLMs). In this paper, we review different architectural solutions and then evaluate the efficacy of common safety analyses such as failure modes and effect analysis (FMEA) and fault tree analysis (FTA). We show how these techniques can be improved for the intricate nature of the foundational models, particularly in how they form and utilize latent representations. We introduce HySAFE-AI, Hybrid Safety Architectural Analysis Framework for AI Systems, a hybrid framework that adapts traditional methods to evaluate the safety of AI systems. Lastly, we offer hints of future work and suggestions to guide the evolution of future AI safety standards.

Inherent Diverse Redundant Safety Mechanisms for AI-based Software Elements in Automotive Applications

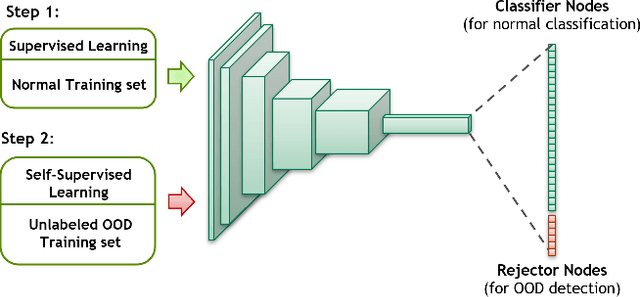

Feb 29, 2024Abstract:This paper explores the role and challenges of Artificial Intelligence (AI) algorithms, specifically AI-based software elements, in autonomous driving systems. These AI systems are fundamental in executing real-time critical functions in complex and high-dimensional environments. They handle vital tasks like multi-modal perception, cognition, and decision-making tasks such as motion planning, lane keeping, and emergency braking. A primary concern relates to the ability (and necessity) of AI models to generalize beyond their initial training data. This generalization issue becomes evident in real-time scenarios, where models frequently encounter inputs not represented in their training or validation data. In such cases, AI systems must still function effectively despite facing distributional or domain shifts. This paper investigates the risk associated with overconfident AI models in safety-critical applications like autonomous driving. To mitigate these risks, methods for training AI models that help maintain performance without overconfidence are proposed. This involves implementing certainty reporting architectures and ensuring diverse training data. While various distribution-based methods exist to provide safety mechanisms for AI models, there is a noted lack of systematic assessment of these methods, especially in the context of safety-critical automotive applications. Many methods in the literature do not adapt well to the quick response times required in safety-critical edge applications. This paper reviews these methods, discusses their suitability for safety-critical applications, and highlights their strengths and limitations. The paper also proposes potential improvements to enhance the safety and reliability of AI algorithms in autonomous vehicles in the context of rapid and accurate decision-making processes.

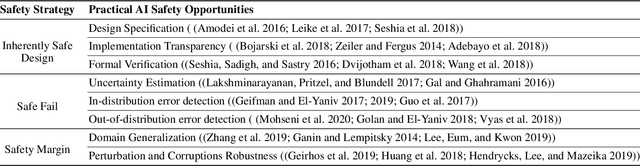

Practical Solutions for Machine Learning Safety in Autonomous Vehicles

Dec 20, 2019

Abstract:Autonomous vehicles rely on machine learning to solve challenging tasks in perception and motion planning. However, automotive software safety standards have not fully evolved to address the challenges of machine learning safety such as interpretability, verification, and performance limitations. In this paper, we review and organize practical machine learning safety techniques that can complement engineering safety for machine learning based software in autonomous vehicles. Our organization maps safety strategies to state-of-the-art machine learning techniques in order to enhance dependability and safety of machine learning algorithms. We also discuss security limitations and user experience aspects of machine learning components in autonomous vehicles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge