Madeleine Weinstein

$O(k)$-Equivariant Dimensionality Reduction on Stiefel Manifolds

Sep 19, 2023

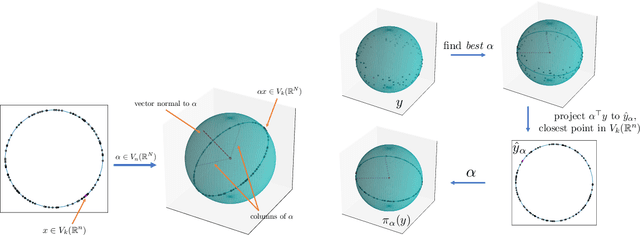

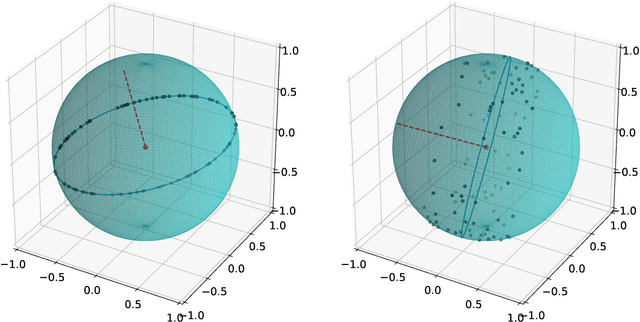

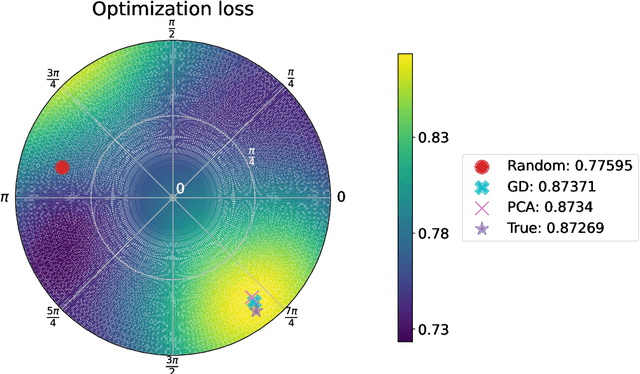

Abstract:Many real-world datasets live on high-dimensional Stiefel and Grassmannian manifolds, $V_k(\mathbb{R}^N)$ and $Gr(k, \mathbb{R}^N)$ respectively, and benefit from projection onto lower-dimensional Stiefel (respectively, Grassmannian) manifolds. In this work, we propose an algorithm called Principal Stiefel Coordinates (PSC) to reduce data dimensionality from $ V_k(\mathbb{R}^N)$ to $V_k(\mathbb{R}^n)$ in an $O(k)$-equivariant manner ($k \leq n \ll N$). We begin by observing that each element $\alpha \in V_n(\mathbb{R}^N)$ defines an isometric embedding of $V_k(\mathbb{R}^n)$ into $V_k(\mathbb{R}^N)$. Next, we optimize for such an embedding map that minimizes data fit error by warm-starting with the output of principal component analysis (PCA) and applying gradient descent. Then, we define a continuous and $O(k)$-equivariant map $\pi_\alpha$ that acts as a ``closest point operator'' to project the data onto the image of $V_k(\mathbb{R}^n)$ in $V_k(\mathbb{R}^N)$ under the embedding determined by $\alpha$, while minimizing distortion. Because this dimensionality reduction is $O(k)$-equivariant, these results extend to Grassmannian manifolds as well. Lastly, we show that the PCA output globally minimizes projection error in a noiseless setting, but that our algorithm achieves a meaningfully different and improved outcome when the data does not lie exactly on the image of a linearly embedded lower-dimensional Stiefel manifold as above. Multiple numerical experiments using synthetic and real-world data are performed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge