M. R. C. Mahdy

CQ CNN: A Hybrid Classical Quantum Convolutional Neural Network for Alzheimer's Disease Detection Using Diffusion Generated and U Net Segmented 3D MRI

Mar 04, 2025Abstract:The detection of Alzheimer disease (AD) from clinical MRI data is an active area of research in medical imaging. Recent advances in quantum computing, particularly the integration of parameterized quantum circuits (PQCs) with classical machine learning architectures, offer new opportunities to develop models that may outperform traditional methods. However, quantum machine learning (QML) remains in its early stages and requires further experimental analysis to better understand its behavior and limitations. In this paper, we propose an end to end hybrid classical quantum convolutional neural network (CQ CNN) for AD detection using clinically formatted 3D MRI data. Our approach involves developing a framework to make 3D MRI data usable for machine learning, designing and training a brain tissue segmentation model (Skull Net), and training a diffusion model to generate synthetic images for the minority class. Our converged models exhibit potential quantum advantages, achieving higher accuracy in fewer epochs than classical models. The proposed beta8 3 qubit model achieves an accuracy of 97.50%, surpassing state of the art (SOTA) models while requiring significantly fewer computational resources. In particular, the architecture employs only 13K parameters (0.48 MB), reducing the parameter count by more than 99.99% compared to current SOTA models. Furthermore, the diffusion-generated data used to train our quantum models, in conjunction with real samples, preserve clinical structural standards, representing a notable first in the field of QML. We conclude that CQCNN architecture like models, with further improvements in gradient optimization techniques, could become a viable option and even a potential alternative to classical models for AD detection, especially in data limited and resource constrained clinical settings.

A Deep Learning Approach to Predict the Fall [of Price] of Cryptocurrency Long Before its Actual Fall

Nov 20, 2024Abstract:In modern times, the cryptocurrency market is one of the world's most rapidly rising financial markets. The cryptocurrency market is regarded to be more volatile and illiquid than traditional markets such as equities, foreign exchange, and commodities. The risk of this market creates an uncertain condition among the investors. The purpose of this research is to predict the magnitude of the risk factor of the cryptocurrency market. Risk factor is also called volatility. Our approach will assist people who invest in the cryptocurrency market by overcoming the problems and difficulties they experience. Our approach starts with calculating the risk factor of the cryptocurrency market from the existing parameters. In twenty elements of the cryptocurrency market, the risk factor has been predicted using different machine learning algorithms such as CNN, LSTM, BiLSTM, and GRU. All of the models have been applied to the calculated risk factor parameter. A new model has been developed to predict better than the existing models. Our proposed model gives the highest RMSE value of 1.3229 and the lowest RMSE value of 0.0089. Following our model, it will be easier for investors to trade in complicated and challenging financial assets like bitcoin, Ethereum, dogecoin, etc. Where the other existing models, the highest RMSE was 14.5092, and the lower was 0.02769. So, the proposed model performs much better than models with proper generalization. Using our approach, it will be easier for investors to trade in complicated and challenging financial assets like Bitcoin, Ethereum, and Dogecoin.

Edge Detection Quantumized: A Novel Quantum Algorithm For Image Processing

Apr 10, 2024Abstract:Quantum image processing is a research field that explores the use of quantum computing and algorithms for image processing tasks such as image encoding and edge detection. Although classical edge detection algorithms perform reasonably well and are quite efficient, they become outright slower when it comes to large datasets with high-resolution images. Quantum computing promises to deliver a significant performance boost and breakthroughs in various sectors. Quantum Hadamard Edge Detection (QHED) algorithm, for example, works at constant time complexity, and thus detects edges much faster than any classical algorithm. However, the original QHED algorithm is designed for Quantum Probability Image Encoding (QPIE) and mainly works for binary images. This paper presents a novel protocol by combining the Flexible Representation of Quantum Images (FRQI) encoding and a modified QHED algorithm. An improved edge outline method has been proposed in this work resulting in a better object outline output and more accurate edge detection than the traditional QHED algorithm.

Bridging Classical and Quantum Machine Learning: Knowledge Transfer From Classical to Quantum Neural Networks Using Knowledge Distillation

Nov 23, 2023Abstract:Very recently, studies have shown that quantum neural networks surpass classical neural networks in tasks like image classification when a similar number of learnable parameters are used. However, the development and optimization of quantum models are currently hindered by issues such as qubit instability and limited qubit availability, leading to error-prone systems with weak performance. In contrast, classical models can exhibit high-performance owing to substantial resource availability. As a result, more studies have been focusing on hybrid classical-quantum integration. A line of research particularly focuses on transfer learning through classical-quantum integration or quantum-quantum approaches. Unlike previous studies, this paper introduces a new method to transfer knowledge from classical to quantum neural networks using knowledge distillation, effectively bridging the gap between classical machine learning and emergent quantum computing techniques. We adapt classical convolutional neural network (CNN) architectures like LeNet and AlexNet to serve as teacher networks, facilitating the training of student quantum models by sending supervisory signals during backpropagation through KL-divergence. The approach yields significant performance improvements for the quantum models by solely depending on classical CNNs, with quantum models achieving an average accuracy improvement of 0.80% on the MNIST dataset and 5.40% on the more complex Fashion MNIST dataset. Applying this technique eliminates the cumbersome training of huge quantum models for transfer learning in resource-constrained settings and enables re-using existing pre-trained classical models to improve performance.Thus, this study paves the way for future research in quantum machine learning (QML) by positioning knowledge distillation as a core technique for advancing QML applications.

Improving Malaria Parasite Detection from Red Blood Cell using Deep Convolutional Neural Networks

Jul 23, 2019

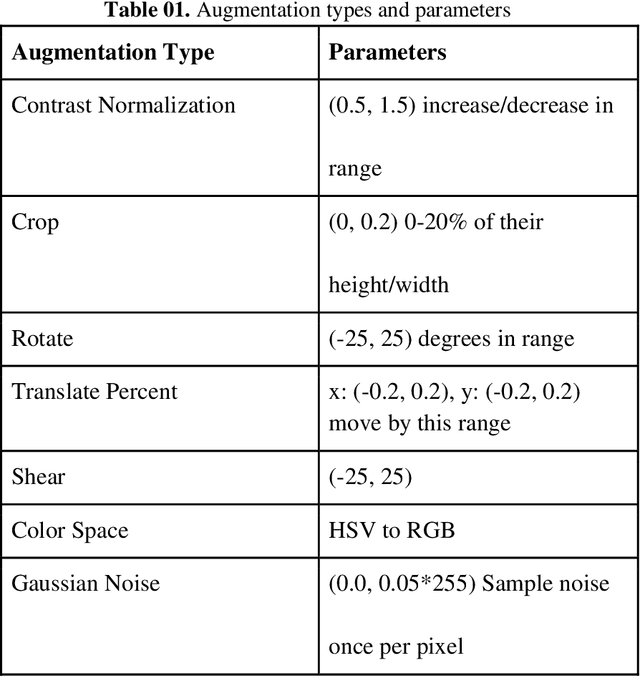

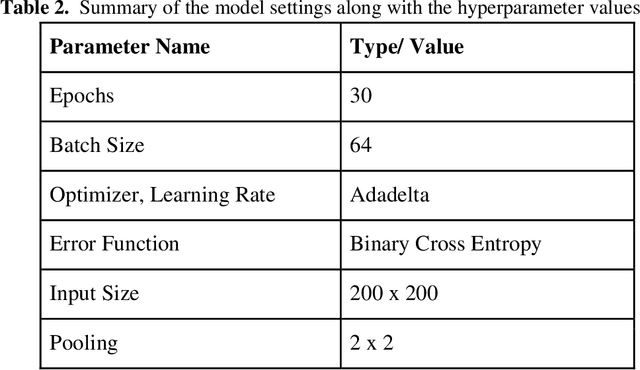

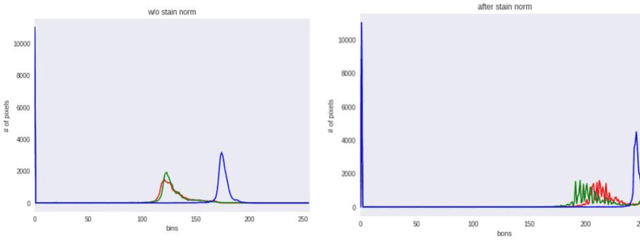

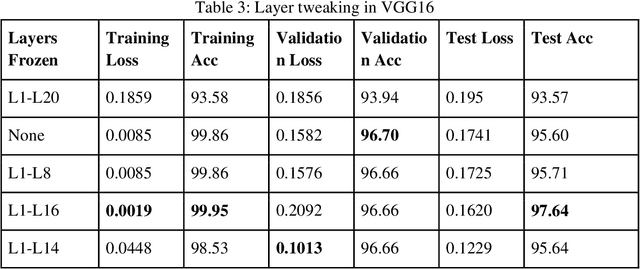

Abstract:Malaria is a female anopheles mosquito-bite inflicted life-threatening disease which is considered endemic in many parts of the world. This article focuses on improving malaria detection from patches segmented from microscopic images of red blood cell smears by introducing a deep convolutional neural network. Compared to the traditional methods that use tedious hand engineering feature extraction, the proposed method uses deep learning in an end-to-end arrangement that performs both feature extraction and classification directly from the raw segmented patches of the red blood smears. The dataset used in this study was taken from National Institute of Health named NIH Malaria Dataset. The evaluation metric accuracy and loss along with 5-fold cross validation was used to compare and select the best performing architecture. To maximize the performance, existing standard pre-processing techniques from the literature has also been experimented. In addition, several other complex architectures have been implemented and tested to pick the best performing model. A holdout test has also been conducted to verify how well the proposed model generalizes on unseen data. Our best model achieves an accuracy of almost 97.77%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge