M. Janina Sarol

UIUC_BioNLP at SemEval-2021 Task 11: A Cascade of Neural Models for Structuring Scholarly NLP Contributions

May 12, 2021

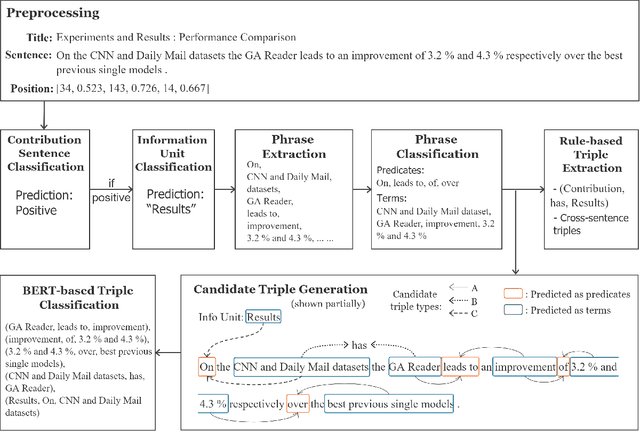

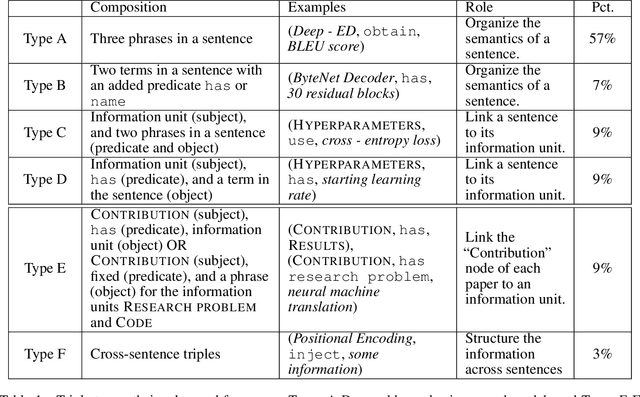

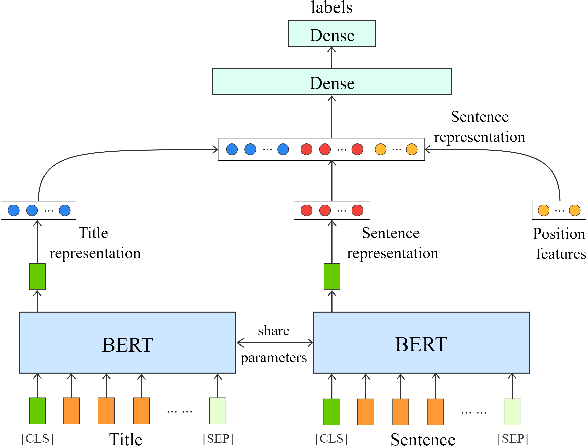

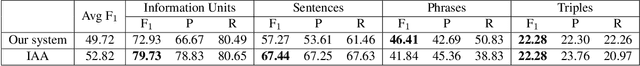

Abstract:We propose a cascade of neural models that performs sentence classification, phrase recognition, and triple extraction to automatically structure the scholarly contributions of NLP publications. To identify the most important contribution sentences in a paper, we used a BERT-based classifier with positional features (Subtask 1). A BERT-CRF model was used to recognize and characterize relevant phrases in contribution sentences (Subtask 2). We categorized the triples into several types based on whether and how their elements were expressed in text, and addressed each type using separate BERT-based classifiers as well as rules (Subtask 3). Our system was officially ranked second in Phase 1 evaluation and first in both parts of Phase 2 evaluation. After fixing a submission error in Pharse 1, our approach yields the best results overall. In this paper, in addition to a system description, we also provide further analysis of our results, highlighting its strengths and limitations. We make our code publicly available at https://github.com/Liu-Hy/nlp-contrib-graph.

An Empirical Methodology for Detecting and Prioritizing Needs during Crisis Events

Jun 02, 2020

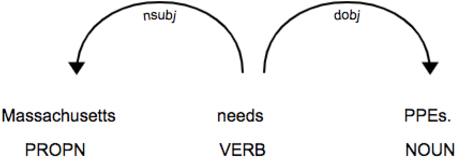

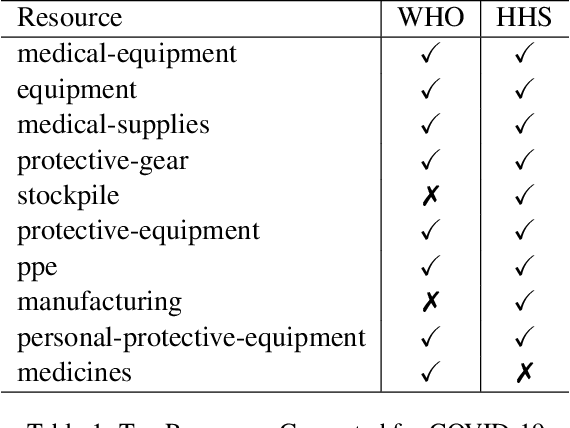

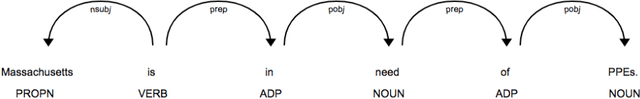

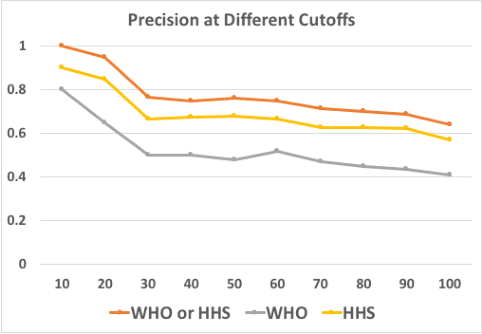

Abstract:In times of crisis, identifying the essential needs is a crucial step to providing appropriate resources and services to affected entities. Social media platforms such as Twitter contain vast amount of information about the general public's needs. However, the sparsity of the information as well as the amount of noisy content present a challenge to practitioners to effectively identify shared information on these platforms. In this study, we propose two novel methods for two distinct but related needs detection tasks: the identification of 1) a list of resources needed ranked by priority, and 2) sentences that specify who-needs-what resources. We evaluated our methods on a set of tweets about the COVID-19 crisis. For task 1 (detecting top needs), we compared our results against two given lists of resources and achieved 64% precision. For task 2 (detecting who-needs-what), we compared our results on a set of 1,000 annotated tweets and achieved a 68% F1-score.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge