M. Amin Alandihallaj

CubeSat Orbit Insertion Maneuvering Using J2 Perturbation

Jul 17, 2025Abstract:The precise insertion of CubeSats into designated orbits is a complex task, primarily due to the limited propulsion capabilities and constrained fuel reserves onboard, which severely restrict the scope for large orbital corrections. This limitation necessitates the development of more efficient maneuvering techniques to ensure mission success. In this paper, we propose a maneuvering sequence that exploits the natural J2 perturbation caused by the Earth's oblateness. By utilizing the secular effects of this perturbation, it is possible to passively influence key orbital parameters such as the argument of perigee and the right ascension of the ascending node, thereby reducing the need for extensive propulsion-based corrections. The approach is designed to optimize the CubeSat's orbital insertion and minimize the total fuel required for trajectory adjustments, making it particularly suitable for fuel-constrained missions. The proposed methodology is validated through comprehensive numerical simulations that examine different initial orbital conditions and perturbation environments. Case studies are presented to demonstrate the effectiveness of the J2-augmented strategy in achieving accurate orbital insertion, showing a major reduction in fuel consumption compared to traditional methods. The results underscore the potential of this approach to extend the operational life and capabilities of CubeSats, offering a viable solution for future low-Earth orbit missions.

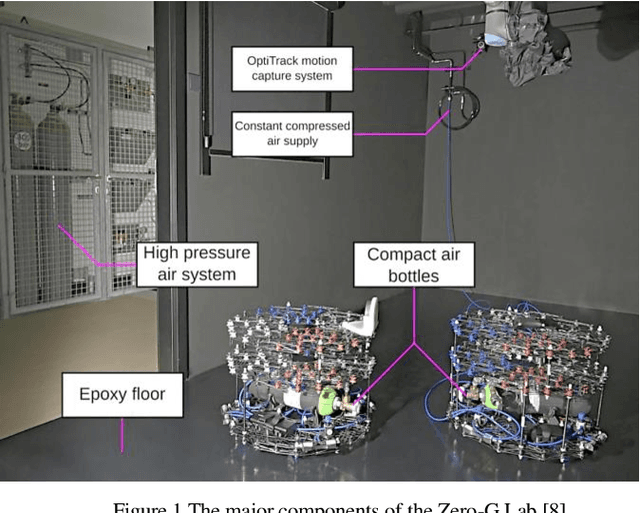

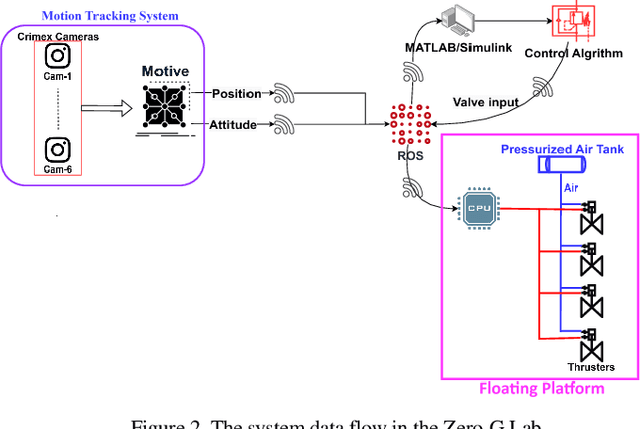

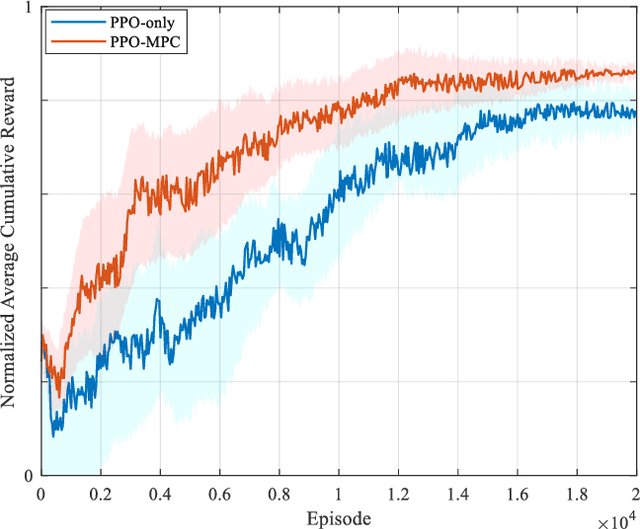

PPO-based Dynamic Control of Uncertain Floating Platforms in the Zero-G Environment

Jul 03, 2024

Abstract:In the field of space exploration, floating platforms play a crucial role in scientific investigations and technological advancements. However, controlling these platforms in zero-gravity environments presents unique challenges, including uncertainties and disturbances. This paper introduces an innovative approach that combines Proximal Policy Optimization (PPO) with Model Predictive Control (MPC) in the zero-gravity laboratory (Zero-G Lab) at the University of Luxembourg. This approach leverages PPO's reinforcement learning power and MPC's precision to navigate the complex control dynamics of floating platforms. Unlike traditional control methods, this PPO-MPC approach learns from MPC predictions, adapting to unmodeled dynamics and disturbances, resulting in a resilient control framework tailored to the zero-gravity environment. Simulations and experiments in the Zero-G Lab validate this approach, showcasing the adaptability of the PPO agent. This research opens new possibilities for controlling floating platforms in zero-gravity settings, promising advancements in space exploration.

Safe Hierarchical Reinforcement Learning for CubeSat Task Scheduling Based on Energy Consumption

Sep 21, 2023

Abstract:This paper presents a Hierarchical Reinforcement Learning methodology tailored for optimizing CubeSat task scheduling in Low Earth Orbits (LEO). Incorporating a high-level policy for global task distribution and a low-level policy for real-time adaptations as a safety mechanism, our approach integrates the Similarity Attention-based Encoder (SABE) for task prioritization and an MLP estimator for energy consumption forecasting. Integrating this mechanism creates a safe and fault-tolerant system for CubeSat task scheduling. Simulation results validate the Hierarchical Reinforcement Learning superior convergence and task success rate, outperforming both the MADDPG model and traditional random scheduling across multiple CubeSat configurations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge