Luka Čehovin Zajc

Performance Evaluation Methodology for Long-Term Visual Object Tracking

Jun 19, 2019

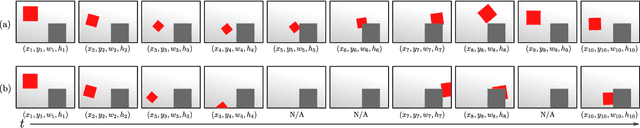

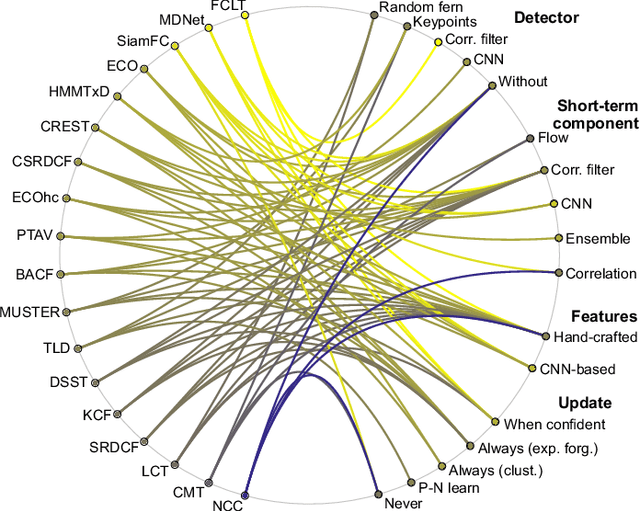

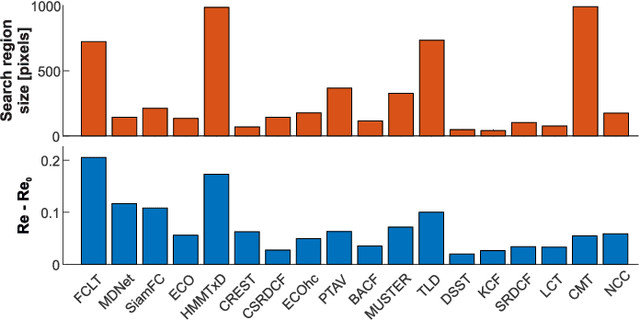

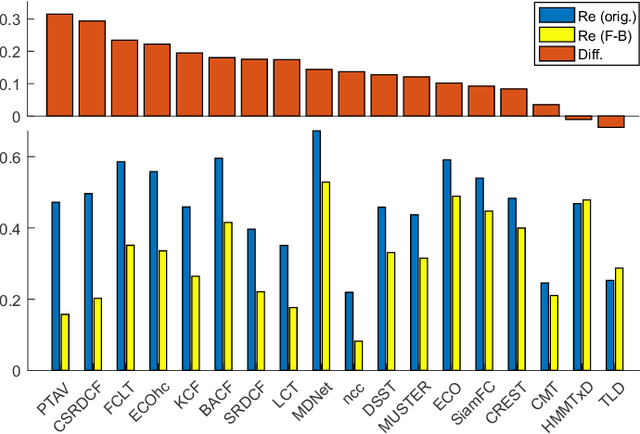

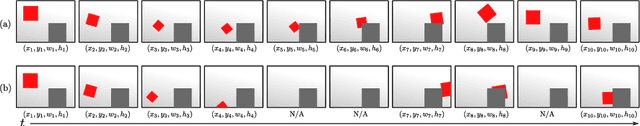

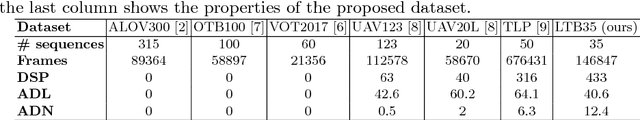

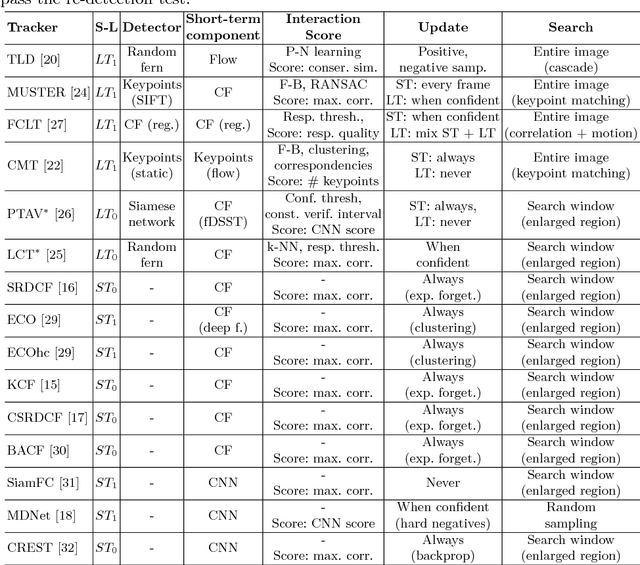

Abstract:A long-term visual object tracking performance evaluation methodology and a benchmark are proposed. Performance measures are designed by following a long-term tracking definition to maximize the analysis probing strength. The new measures outperform existing ones in interpretation potential and in better distinguishing between different tracking behaviors. We show that these measures generalize the short-term performance measures, thus linking the two tracking problems. Furthermore, the new measures are highly robust to temporal annotation sparsity and allow annotation of sequences hundreds of times longer than in the current datasets without increasing manual annotation labor. A new challenging dataset of carefully selected sequences with many target disappearances is proposed. A new tracking taxonomy is proposed to position trackers on the short-term/long-term spectrum. The benchmark contains an extensive evaluation of the largest number of long-term tackers and comparison to state-of-the-art short-term trackers. We analyze the influence of tracking architecture implementations to long-term performance and explore various re-detection strategies as well as influence of visual model update strategies to long-term tracking drift. The methodology is integrated in the VOT toolkit to automate experimental analysis and benchmarking and to facilitate future development of long-term trackers.

Now you see me: evaluating performance in long-term visual tracking

Apr 19, 2018

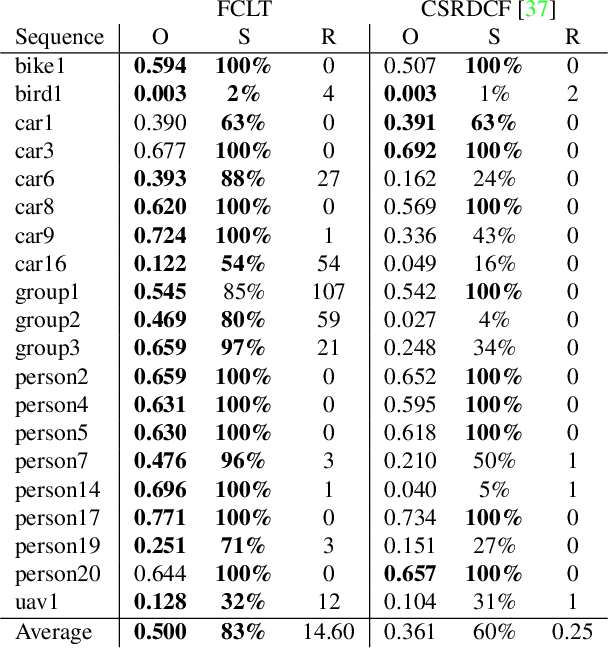

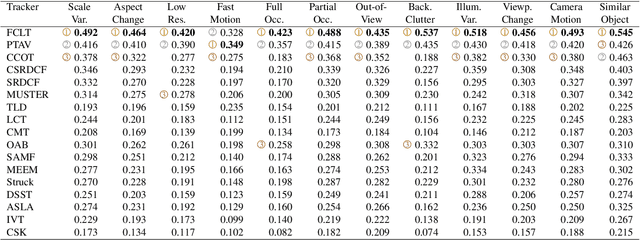

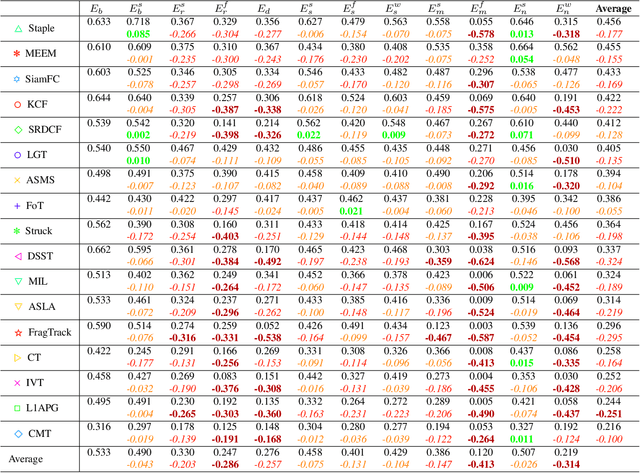

Abstract:We propose a new long-term tracking performance evaluation methodology and present a new challenging dataset of carefully selected sequences with many target disappearances. We perform an extensive evaluation of six long-term and nine short-term state-of-the-art trackers, using new performance measures, suitable for evaluating long-term tracking - tracking precision, recall and F-score. The evaluation shows that a good model update strategy and the capability of image-wide re-detection are critical for long-term tracking performance. We integrated the methodology in the VOT toolkit to automate experimental analysis and benchmarking and to facilitate the development of long-term trackers.

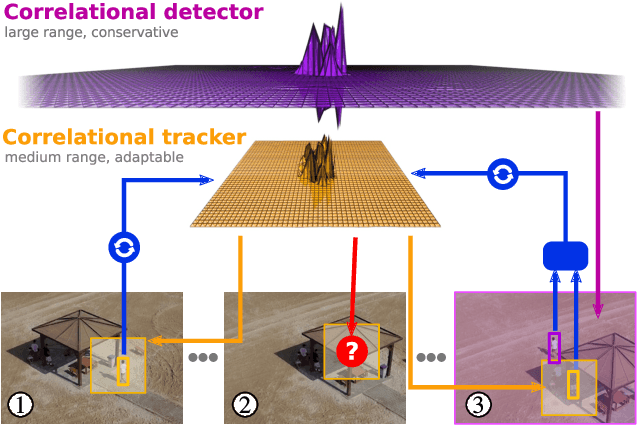

FCLT - A Fully-Correlational Long-Term Tracker

Nov 27, 2017

Abstract:We propose FCLT - a fully-correlational long-term tracker. The two main components of FCLT are a short-term tracker which localizes the target in each frame and a detector which re-detects the target when it is lost. Both the short-term tracker and the detector are based on correlation filters. The detector exploits properties of the recent constrained filter learning and is able to re-detect the target in the whole image efficiently. A failure detection mechanism based on correlation response quality is proposed. The FCLT is tested on recent short-term and long-term benchmarks. It achieves state-of-the-art results on the short-term benchmarks and it outperforms the current best-performing tracker on the long-term benchmark by over 18%.

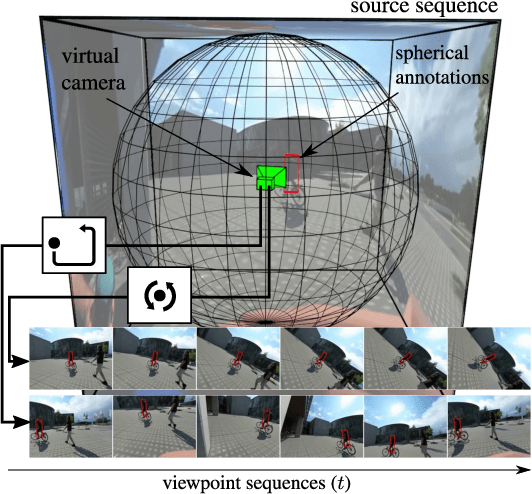

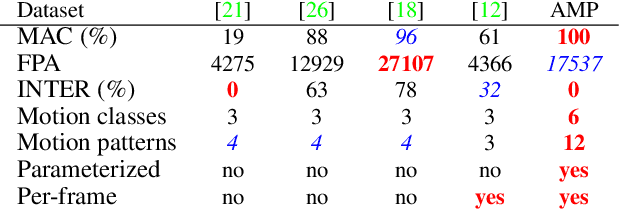

Beyond standard benchmarks: Parameterizing performance evaluation in visual object tracking

Mar 25, 2017

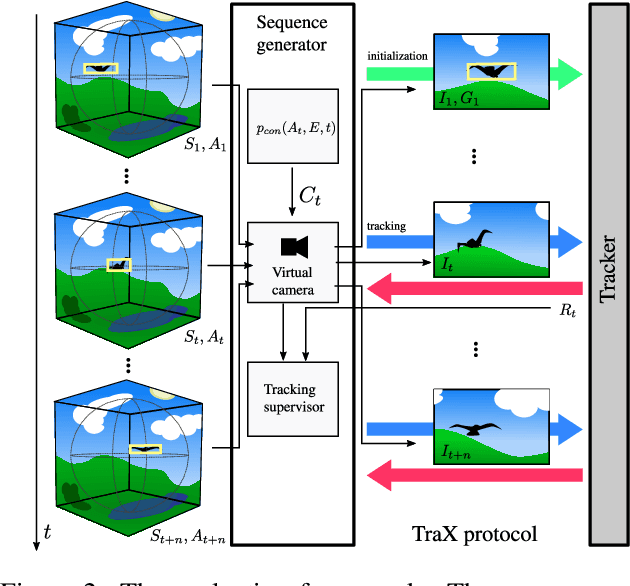

Abstract:Object-to-camera motion produces a variety of apparent motion patterns that significantly affect performance of short-term visual trackers. Despite being crucial for designing robust trackers, their influence is poorly explored in standard benchmarks due to weakly defined, biased and overlapping attribute annotations. In this paper we propose to go beyond pre-recorded benchmarks with post-hoc annotations by presenting an approach that utilizes omnidirectional videos to generate realistic, consistently annotated, short-term tracking scenarios with exactly parameterized motion patterns. We have created an evaluation system, constructed a fully annotated dataset of omnidirectional videos and the generators for typical motion patterns. We provide an in-depth analysis of major tracking paradigms which is complementary to the standard benchmarks and confirms the expressiveness of our evaluation approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge