Luiz Schirmer

Geometric implicit neural representations for signed distance functions

Nov 10, 2025Abstract:\textit{Implicit neural representations} (INRs) have emerged as a promising framework for representing signals in low-dimensional spaces. This survey reviews the existing literature on the specialized INR problem of approximating \textit{signed distance functions} (SDFs) for surface scenes, using either oriented point clouds or a set of posed images. We refer to neural SDFs that incorporate differential geometry tools, such as normals and curvatures, in their loss functions as \textit{geometric} INRs. The key idea behind this 3D reconstruction approach is to include additional \textit{regularization} terms in the loss function, ensuring that the INR satisfies certain global properties that the function should hold -- such as having unit gradient in the case of SDFs. We explore key methodological components, including the definition of INR, the construction of geometric loss functions, and sampling schemes from a differential geometry perspective. Our review highlights the significant advancements enabled by geometric INRs in surface reconstruction from oriented point clouds and posed images.

High-Resolution Detection of Earth Structural Heterogeneities from Seismic Amplitudes using Convolutional Neural Networks with Attention layers

Apr 15, 2024

Abstract:Earth structural heterogeneities have a remarkable role in the petroleum economy for both exploration and production projects. Automatic detection of detailed structural heterogeneities is challenging when considering modern machine learning techniques like deep neural networks. Typically, these techniques can be an excellent tool for assisted interpretation of such heterogeneities, but it heavily depends on the amount of data to be trained. We propose an efficient and cost-effective architecture for detecting seismic structural heterogeneities using Convolutional Neural Networks (CNNs) combined with Attention layers. The attention mechanism reduces costs and enhances accuracy, even in cases with relatively noisy data. Our model has half the parameters compared to the state-of-the-art, and it outperforms previous methods in terms of Intersection over Union (IoU) by 0.6% and precision by 0.4%. By leveraging synthetic data, we apply transfer learning to train and fine-tune the model, addressing the challenge of limited annotated data availability.

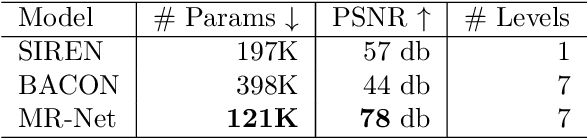

Multiresolution Neural Networks for Imaging

Aug 27, 2022

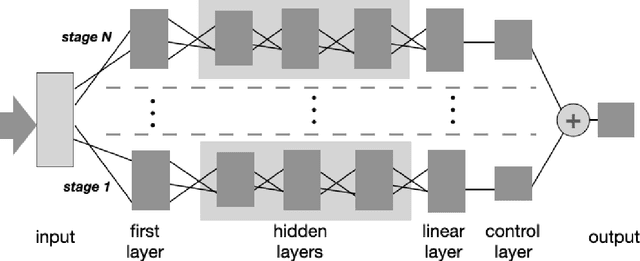

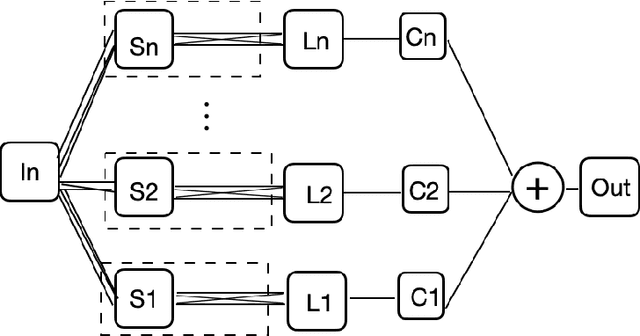

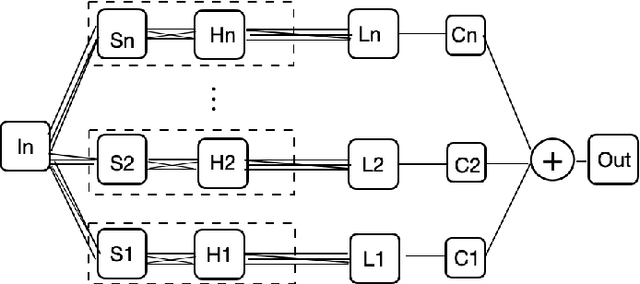

Abstract:We present MR-Net, a general architecture for multiresolution neural networks, and a framework for imaging applications based on this architecture. Our coordinate-based networks are continuous both in space and in scale as they are composed of multiple stages that progressively add finer details. Besides that, they are a compact and efficient representation. We show examples of multiresolution image representation and applications to texturemagnification, minification, and antialiasing. This document is the extended version of the paper [PNS+22]. It includes additional material that would not fit the page limitations of the conference track for publication.

Neural Implicit Surfaces in Higher Dimension

Jan 26, 2022

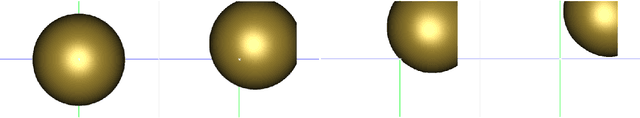

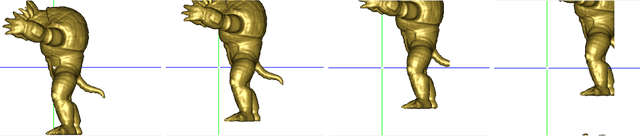

Abstract:This work investigates the use of neural networks admitting high-order derivatives for modeling dynamic variations of smooth implicit surfaces. For this purpose, it extends the representation of differentiable neural implicit surfaces to higher dimensions, which opens up mechanisms that allow to exploit geometric transformations in many settings, from animation and surface evolution to shape morphing and design galleries. The problem is modeled by a $k$-parameter family of surfaces $S_c$, specified as a neural network function $f : \mathbb{R}^3 \times \mathbb{R}^k \rightarrow \mathbb{R}$, where $S_c$ is the zero-level set of the implicit function $f(\cdot, c) : \mathbb{R}^3 \rightarrow \mathbb{R} $, with $c \in \mathbb{R}^k$, with variations induced by the control variable $c$. In that context, restricted to each coordinate of $\mathbb{R}^k$, the underlying representation is a neural homotopy which is the solution of a general partial differential equation.

Differential Geometry in Neural Implicits

Jan 26, 2022

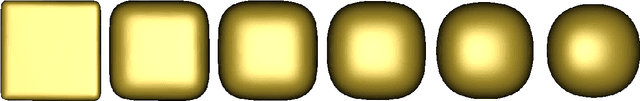

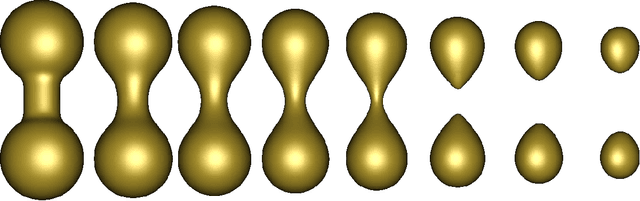

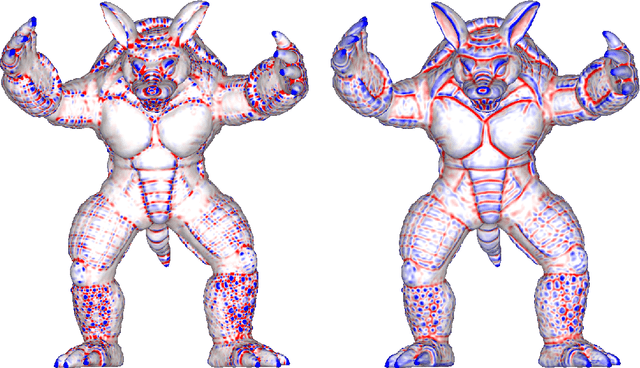

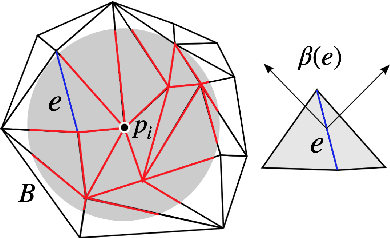

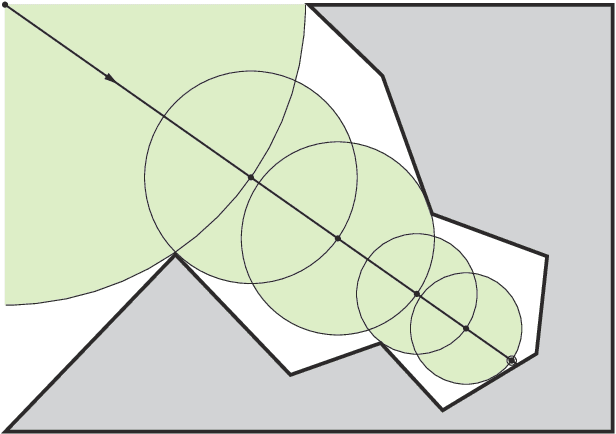

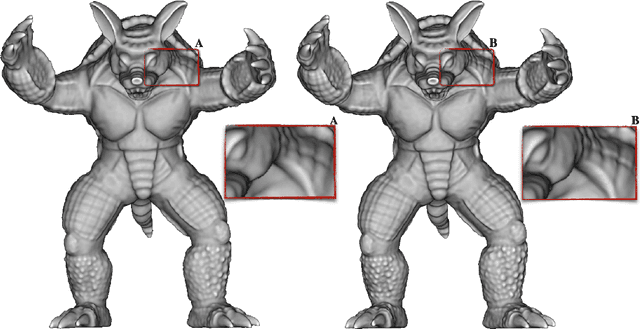

Abstract:We introduce a neural implicit framework that bridges discrete differential geometry of triangle meshes and continuous differential geometry of neural implicit surfaces. It exploits the differentiable properties of neural networks and the discrete geometry of triangle meshes to approximate them as the zero-level sets of neural implicit functions. To train a neural implicit function, we propose a loss function that allows terms with high-order derivatives, such as the alignment between the principal directions, to learn more geometric details. During training, we consider a non-uniform sampling strategy based on the discrete curvatures of the triangle mesh to access points with more geometric details. This sampling implies faster learning while preserving geometric accuracy. We present the analytical differential geometry formulas for neural surfaces, such as normal vectors and curvatures. We use them to render the surfaces using sphere tracing. Additionally, we propose a network optimization based on singular value decomposition to reduce the number of parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge