Differential Geometry in Neural Implicits

Paper and Code

Jan 26, 2022

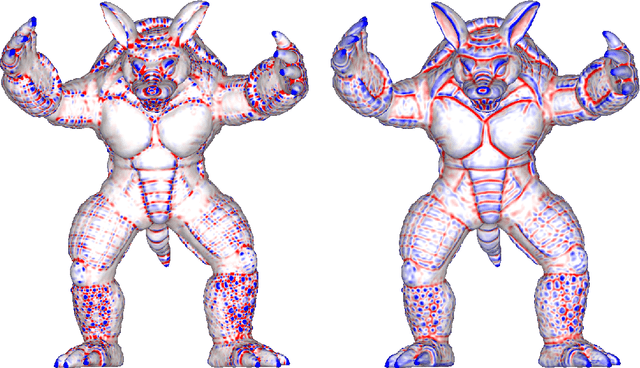

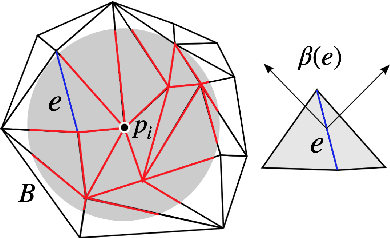

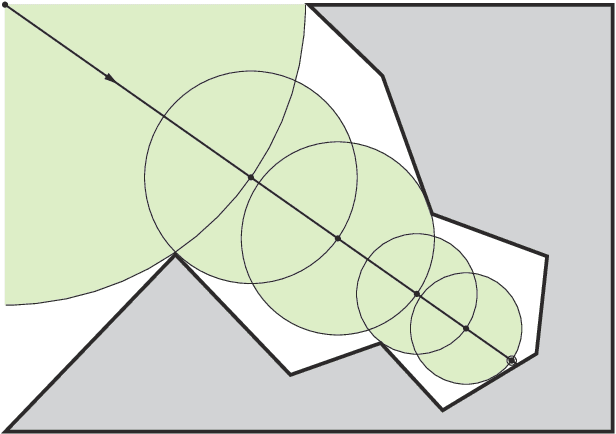

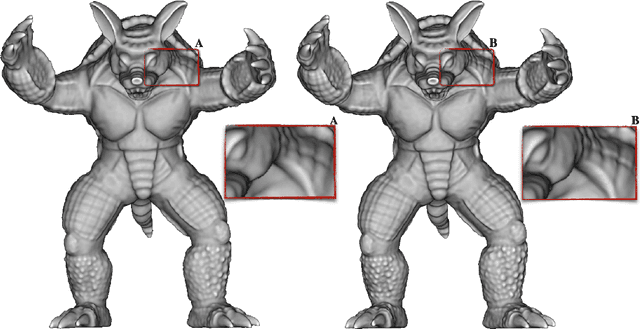

We introduce a neural implicit framework that bridges discrete differential geometry of triangle meshes and continuous differential geometry of neural implicit surfaces. It exploits the differentiable properties of neural networks and the discrete geometry of triangle meshes to approximate them as the zero-level sets of neural implicit functions. To train a neural implicit function, we propose a loss function that allows terms with high-order derivatives, such as the alignment between the principal directions, to learn more geometric details. During training, we consider a non-uniform sampling strategy based on the discrete curvatures of the triangle mesh to access points with more geometric details. This sampling implies faster learning while preserving geometric accuracy. We present the analytical differential geometry formulas for neural surfaces, such as normal vectors and curvatures. We use them to render the surfaces using sphere tracing. Additionally, we propose a network optimization based on singular value decomposition to reduce the number of parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge