Luis Montano

Fast and scalable multi-robot deployment planning under connectivity constraints

May 20, 2025Abstract:In this paper we develop a method to coordinate the deployment of a multi-robot team to reach some locations of interest, so-called primary goals, and to transmit the information from these positions to a static Base Station (BS), under connectivity constraints. The relay positions have to be established for some robots to maintain the connectivity at the moment in which the other robots visit the primary goals. Once every robot reaches its assigned goal, they are again available to cover new goals, dynamically re-distributing the robots to the new tasks. The contribution of this work is a two stage method to deploy the team. Firstly, clusters of relay and primary positions are computed, obtaining a tree formed by chains of positions that have to be visited. Secondly, the order for optimally assigning and visiting the goals in the clusters is computed. We analyze different heuristics for sequential and parallel deployment in the clusters, obtaining sub-optimal solutions in short time for different number of robots and for a large amount of goals.

Traversability-aware path planning in dynamic environments

May 20, 2025Abstract:Planning in environments with moving obstacles remains a significant challenge in robotics. While many works focus on navigation and path planning in obstacle-dense spaces, traversing such congested regions is often avoidable by selecting alternative routes. This paper presents Traversability-aware FMM (Tr-FMM), a path planning method that computes paths in dynamic environments, avoiding crowded regions. The method operates in two steps: first, it discretizes the environment, identifying regions and their distribution; second, it computes the traversability of regions, aiming to minimize both obstacle risks and goal deviation. The path is then computed by propagating the wavefront through regions with higher traversability. Simulated and real-world experiments demonstrate that the approach enhances significant safety by keeping the robot away from regions with obstacles while reducing unnecessary deviations from the goal.

Multi-agent coordination for data gathering with periodic requests and deliveries

Mar 24, 2025Abstract:In this demo work we develop a method to plan and coordinate a multi-agent team to gather information on demand. The data is periodically requested by a static Operation Center (OC) from changeable goals locations. The mission of the team is to reach these locations, taking measurements and delivering the data to the OC. Due to the limited communication range as well as signal attenuation because of the obstacles, the agents must travel to the OC, to upload the data. The agents can play two roles: ones as workers gathering data, the others as collectors traveling invariant paths for collecting the data of the workers to re-transmit it to the OC. The refreshing time of the delivered information depends on the number of available agents as well as of the scenario. The proposed algorithm finds out the best balance between the number of collectors-workers and the partition of the scenario into working areas in the planning phase, which provides the minimum refreshing time and will be the one executed by the agents.

Communication-aware planning for robot teams deployment

Mar 24, 2025Abstract:In the present work we address the problem of deploying a team of robots in a scenario where some locations of interest must be reached. Thus, a planning for a deployment is required, before sending the robots. The obstacles, the limited communication range, and the need of communicating to a base station, constrain the connectivity of the team and the deployment planning. We propose a method consisting of three algorithms: a distributed path planner to obtain communication-aware trajectories; a deployment planner providing dual-use of the robots, visiting primary goals and performing connectivity tasks; and a clustering algorithm to allocate the tasks to robots, and obtain the best goal visit order for the mission.

Multi-agent coordination for on-demand data gathering with periodic information upload

Mar 14, 2025Abstract:In this paper we develop a method for planning and coordinating a multi-agent team deployment to periodically gather information on demand. A static operation center (OC) periodically requests information from changing goal locations. The objective is to gather data in the goals and to deliver it to the OC, balancing the refreshing time and the total number of information packages. The system automatically splits the team in two roles: workers to gather data, or collectors to retransmit the data to the OC. The proposed three step method: 1) finds out the best area partition for the workers; 2) obtains the best balance between workers and collectors, and with whom the workers must to communicate, a collector or the OC; 3) computes the best tour for the workers to visit the goals and deliver them to the OC or to a collector in movement. The method is tested in simulations in different scenarios, providing the best area partition algorithm and the best balance between collectors and workers.

Multi-robot coordination for connectivity recovery after unpredictable environment changes

Mar 14, 2025Abstract:In the present paper we develop a distributed method to reconnect a multi-robot team after connectivity failures, caused by unpredictable environment changes, i.e. appearance of new obstacles. After the changes, the team is divided into different groups of robots. The groups have a limited communication range and only a partial information in their field of view about the current scenario. Their objective is to form a chain from a static base station to a goal location. In the proposed distributed replanning approach, the robots predict new plans for the other groups from the new observed information by each robot in the changed scenario, to restore the connectivity with a base station and reach the initial joint objective. If a solution exists, the method achieves the reconnection of all the groups in a unique chain. The proposed method is compared with other two cases: 1) when all the agents have full information of the environment, and 2) when some robots must move to reach other waiting robots for reconnection. Numerical simulations are provided to evaluate the proposed approach in the presence of unpredictable scenario changes.

DWA-3D: A Reactive Planner for Robust and Efficient Autonomous UAV Navigation

Sep 09, 2024

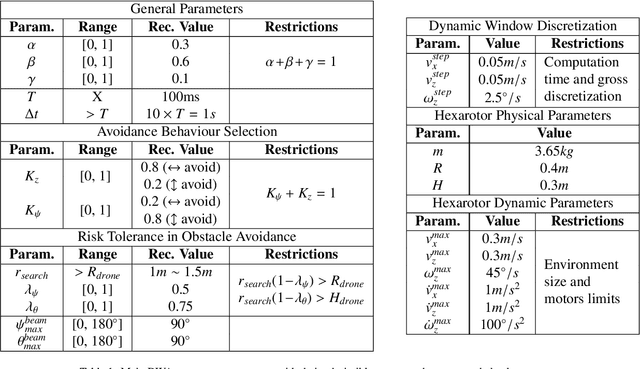

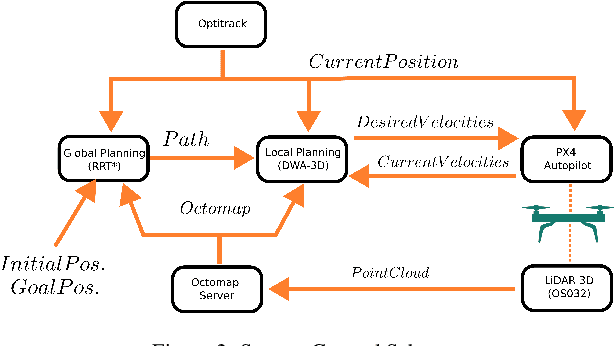

Abstract:Despite the growing impact of Unmanned Aerial Vehicles (UAVs) across various industries, most of current available solutions lack for a robust autonomous navigation system to deal with the appearance of obstacles safely. This work presents an approach to perform autonomous UAV planning and navigation in scenarios in which a safe and high maneuverability is required, due to the cluttered environment and the narrow rooms to move. The system combines an RRT* global planner with a newly proposed reactive planner, DWA-3D, which is the extension of the well known DWA method for 2D robots. We provide a theoretical-empirical method for adjusting the parameters of the objective function to optimize, easing the classical difficulty for tuning them. An onboard LiDAR provides a 3D point cloud, which is projected on an Octomap in which the planning and navigation decisions are made. There is not a prior map; the system builds and updates the map online, from the current and the past LiDAR information included in the Octomap. Extensive real-world experiments were conducted to validate the system and to obtain a fine tuning of the involved parameters. These experiments allowed us to provide a set of values that ensure safe operation across all the tested scenarios. Just by weighting two parameters, it is possible to prioritize either horizontal path alignment or vertical (height) tracking, resulting in enhancing vertical or lateral avoidance, respectively. Additionally, our DWA-3D proposal is able to navigate successfully even in absence of a global planner or with one that does not consider the drone's size. Finally, the conducted experiments show that computation time with the proposed parameters is not only bounded but also remains stable around 40 ms, regardless of the scenario complexity.

Occupation-aware planning method for robotic monitoring missions in dynamic environments

Aug 01, 2024

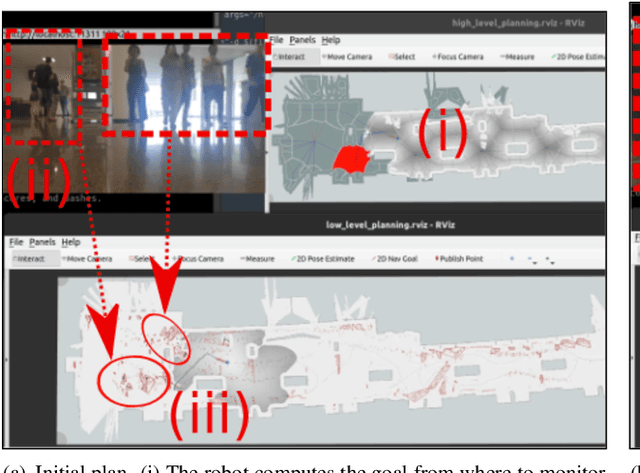

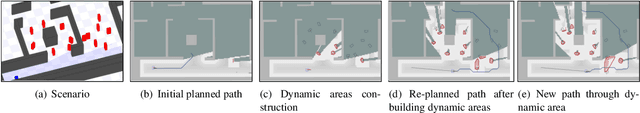

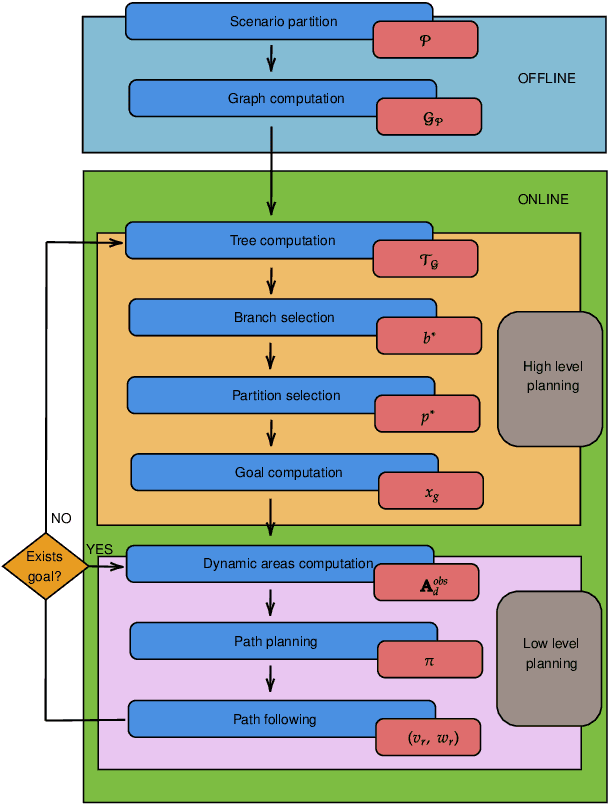

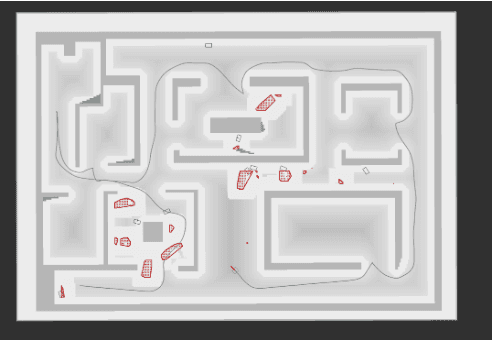

Abstract:This paper presents a method for robotic monitoring missions in the presence of moving obstacles. Although the scenario map is known, the robot lacks information about the movement of dynamic obstacles during the monitoring mission. Numerous local planners have been developed in recent years for navigating highly dynamic environments. However, the absence of a global planner for these environments can result in unavoidable collisions or the inability to successfully complete missions in densely populated areas, such as a scenario monitoring in our case. This work addresses the development and evaluation of a global planner, $MADA$ (Monitoring Avoiding Dynamic Areas), aimed at enhancing the deployment of robots in such challenging conditions. The robot plans and executes the mission using the proposed two-step approach. The first step involves selecting the observation goal based on the environment's distribution and estimated monitoring costs. In the second step, the robot identifies areas with moving obstacles and obtains paths avoiding densely occupied dynamic regions based on their occupation. Quantitative and qualitative results based on simulations and on real-world experimentation, confirm that the proposed method allows the robot to effectively monitor most of the environment while avoiding densely occupied dynamic areas.

From Underground Mines to Offices: A Versatile and Robust Framework for Range-Inertial SLAM

Jul 20, 2024

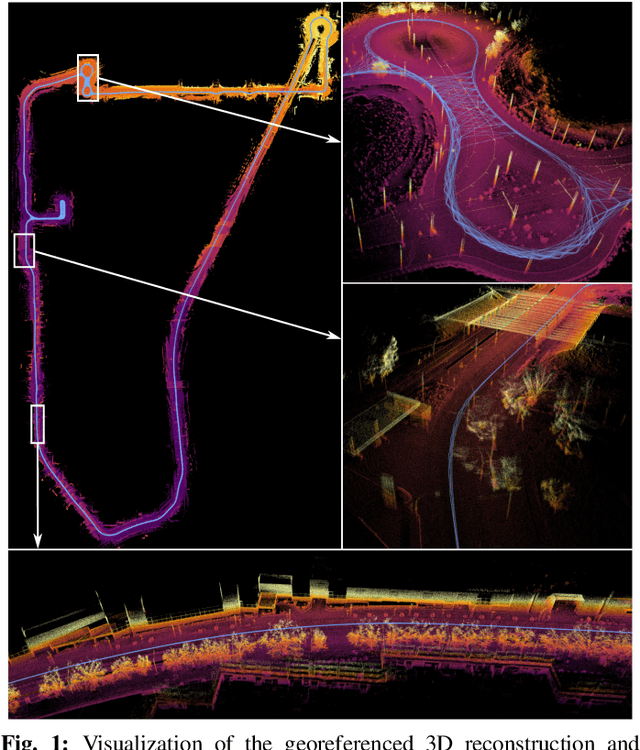

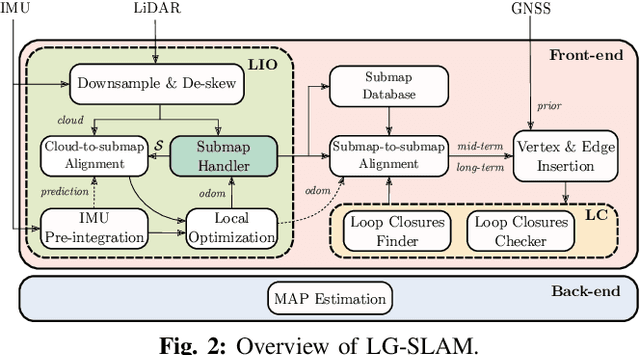

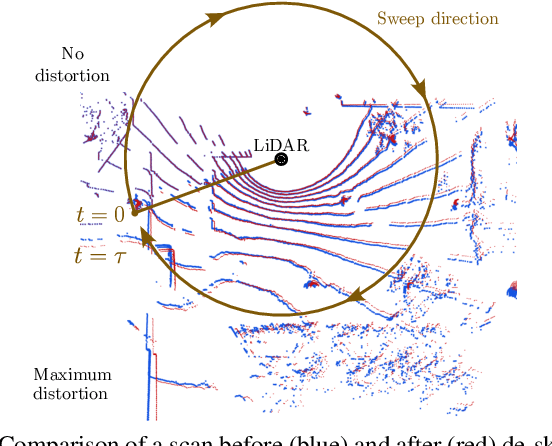

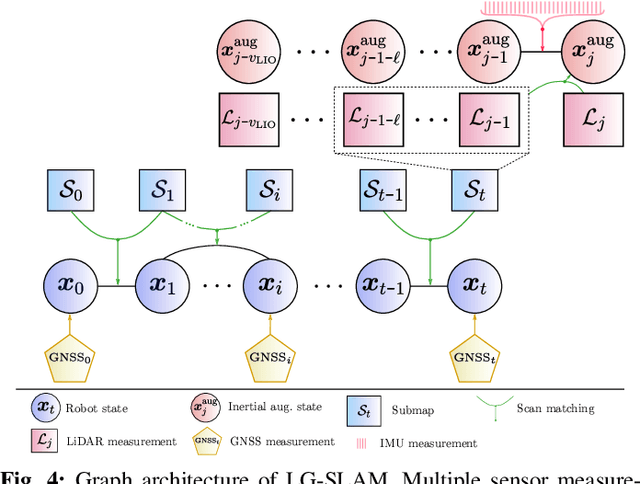

Abstract:Simultaneous Localization and Mapping (SLAM) is an essential component of autonomous robotic applications and self-driving vehicles, enabling them to understand and operate in their environment. Many SLAM systems have been proposed in the last decade, but they are often complex to adapt to different settings or sensor setups. In this work, we present LiDAR Graph-SLAM (LG-SLAM), a versatile range-inertial SLAM framework that can be adapted to different types of sensors and environments, from underground mines to offices with minimal parameter tuning. Our system integrates range, inertial and GNSS measurements into a graph-based optimization framework. We also use a refined submap management approach and a robust loop closure method that effectively accounts for uncertainty in the identification and validation of putative loop closures, ensuring global consistency and robustness. Enabled by a parallelized architecture and GPU integration, our system achieves pose estimation at LiDAR frame rate, along with online loop closing and graph optimization. We validate our system in diverse environments using public datasets and real-world data, consistently achieving an average error below 20 cm and outperforming other state-of-the-art algorithms.

AVOCADO: Adaptive Optimal Collision Avoidance driven by Opinion

Jun 29, 2024

Abstract:We present AVOCADO (AdaptiVe Optimal Collision Avoidance Driven by Opinion), a novel navigation approach to address holonomic robot collision avoidance when the degree of cooperation of the other agents in the environment is unknown. AVOCADO departs from a Velocity Obstacle's formulation akin to the Optimal Reciprocal Collision Avoidance method. However, instead of assuming reciprocity, AVOCADO poses an adaptive control problem that aims at adapting in real-time to the cooperation degree of other robots and agents. Adaptation is achieved through a novel nonlinear opinion dynamics design that relies solely on sensor observations. As a by-product, based on the nonlinear opinion dynamics, we propose a novel method to avoid the deadlocks under geometrical symmetries among robots and agents. Extensive numerical simulations show that AVOCADO surpasses existing geometrical, learning and planning-based approaches in mixed cooperative/non-cooperative navigation environments in terms of success rate, time to goal and computational time. In addition, we conduct multiple real experiments that verify that AVOCADO is able to avoid collisions in environments crowded with other robots and humans.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge