Lucy Gao

Value Function Based Difference-of-Convex Algorithm for Bilevel Hyperparameter Selection Problems

Jun 13, 2022

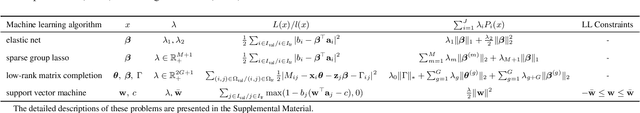

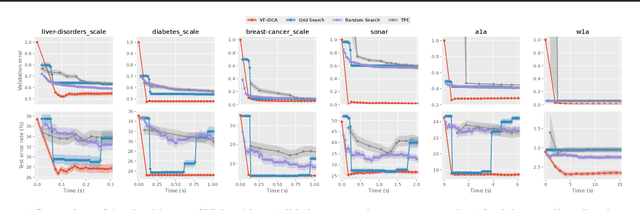

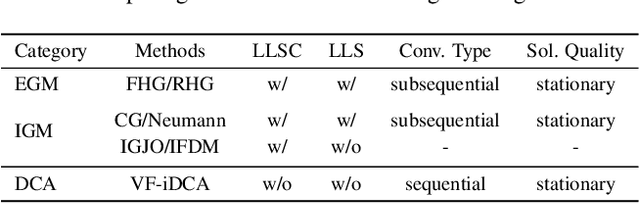

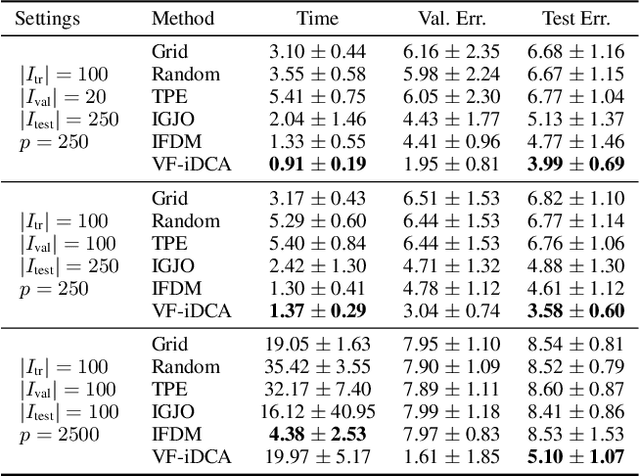

Abstract:Gradient-based optimization methods for hyperparameter tuning guarantee theoretical convergence to stationary solutions when for fixed upper-level variable values, the lower level of the bilevel program is strongly convex (LLSC) and smooth (LLS). This condition is not satisfied for bilevel programs arising from tuning hyperparameters in many machine learning algorithms. In this work, we develop a sequentially convergent Value Function based Difference-of-Convex Algorithm with inexactness (VF-iDCA). We show that this algorithm achieves stationary solutions without LLSC and LLS assumptions for bilevel programs from a broad class of hyperparameter tuning applications. Our extensive experiments confirm our theoretical findings and show that the proposed VF-iDCA yields superior performance when applied to tune hyperparameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge