Lucas Puentes

Goal-Directed Design Agents: Integrating Visual Imitation with One-Step Lookahead Optimization for Generative Design

Oct 07, 2021

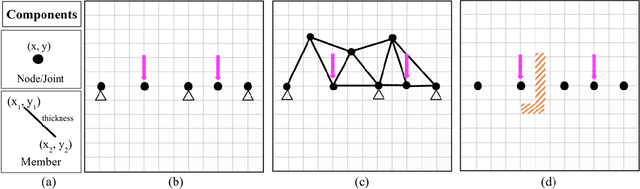

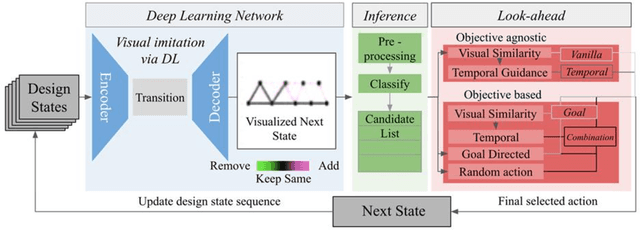

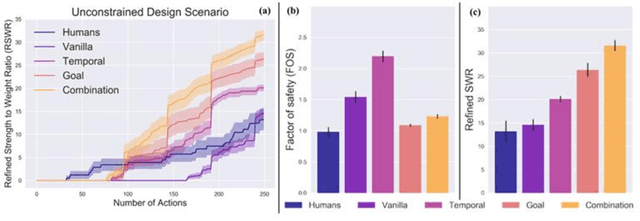

Abstract:Engineering design problems often involve large state and action spaces along with highly sparse rewards. Since an exhaustive search of those spaces is not feasible, humans utilize relevant domain knowledge to condense the search space. Previously, deep learning agents (DLAgents) were introduced to use visual imitation learning to model design domain knowledge. This note builds on DLAgents and integrates them with one-step lookahead search to develop goal-directed agents capable of enhancing learned strategies for sequentially generating designs. Goal-directed DLAgents can employ human strategies learned from data along with optimizing an objective function. The visual imitation network from DLAgents is composed of a convolutional encoder-decoder network, acting as a rough planning step that is agnostic to feedback. Meanwhile, the lookahead search identifies the fine-tuned design action guided by an objective. These design agents are trained on an unconstrained truss design problem that is modeled as a sequential, action-based configuration design problem. The agents are then evaluated on two versions of the problem: the original version used for training and an unseen constrained version with an obstructed construction space. The goal-directed agents outperform the human designers used to train the network as well as the previous objective-agnostic versions of the agent in both scenarios. This illustrates a design agent framework that can efficiently use feedback to not only enhance learned design strategies but also adapt to unseen design problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge