Lorenc Kapllani

A forward differential deep learning-based algorithm for solving high-dimensional nonlinear backward stochastic differential equations

Aug 10, 2024

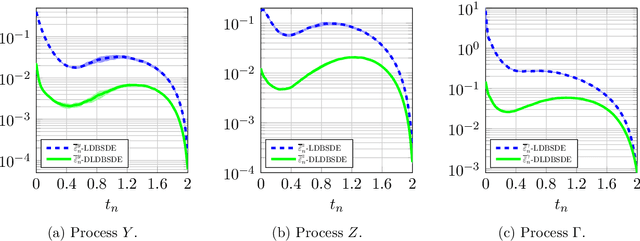

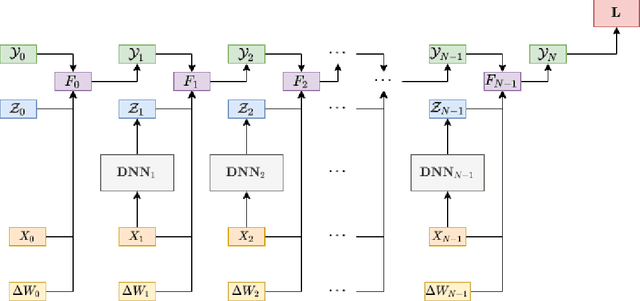

Abstract:In this work, we present a novel forward differential deep learning-based algorithm for solving high-dimensional nonlinear backward stochastic differential equations (BSDEs). Motivated by the fact that differential deep learning can efficiently approximate the labels and their derivatives with respect to inputs, we transform the BSDE problem into a differential deep learning problem. This is done by leveraging Malliavin calculus, resulting in a system of BSDEs. The unknown solution of the BSDE system is a triple of processes $(Y, Z, \Gamma)$, representing the solution, its gradient, and the Hessian matrix. The main idea of our algorithm is to discretize the integrals using the Euler-Maruyama method and approximate the unknown discrete solution triple using three deep neural networks. The parameters of these networks are then optimized by globally minimizing a differential learning loss function, which is novelty defined as a weighted sum of the dynamics of the discretized system of BSDEs. Through various high-dimensional examples, we demonstrate that our proposed scheme is more efficient in terms of accuracy and computation time compared to other contemporary forward deep learning-based methodologies.

A backward differential deep learning-based algorithm for solving high-dimensional nonlinear backward stochastic differential equations

Apr 12, 2024

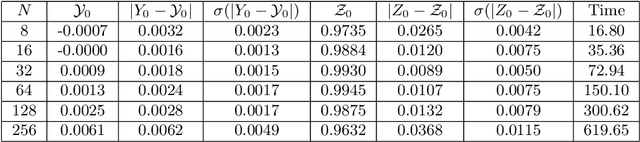

Abstract:In this work, we propose a novel backward differential deep learning-based algorithm for solving high-dimensional nonlinear backward stochastic differential equations (BSDEs), where the deep neural network (DNN) models are trained not only on the inputs and labels but also the differentials of the corresponding labels. This is motivated by the fact that differential deep learning can provide an efficient approximation of the labels and their derivatives with respect to inputs. The BSDEs are reformulated as differential deep learning problems by using Malliavin calculus. The Malliavin derivatives of solution to a BSDE satisfy themselves another BSDE, resulting thus in a system of BSDEs. Such formulation requires the estimation of the solution, its gradient, and the Hessian matrix, represented by the triple of processes $\left(Y, Z, \Gamma\right).$ All the integrals within this system are discretized by using the Euler-Maruyama method. Subsequently, DNNs are employed to approximate the triple of these unknown processes. The DNN parameters are backwardly optimized at each time step by minimizing a differential learning type loss function, which is defined as a weighted sum of the dynamics of the discretized BSDE system, with the first term providing the dynamics of the process $Y$ and the other the process $Z$. An error analysis is carried out to show the convergence of the proposed algorithm. Various numerical experiments up to $50$ dimensions are provided to demonstrate the high efficiency. Both theoretically and numerically, it is demonstrated that our proposed scheme is more efficient compared to other contemporary deep learning-based methodologies, especially in the computation of the process $\Gamma$.

Uncertainty quantification for deep learning-based schemes for solving high-dimensional backward stochastic differential equations

Oct 05, 2023

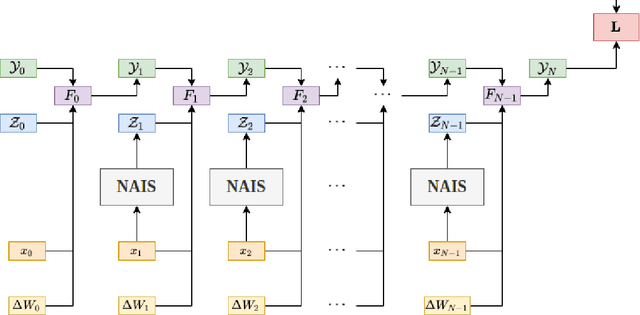

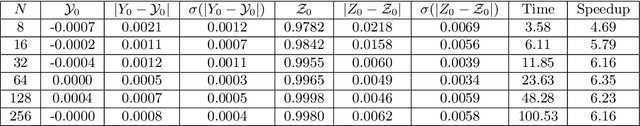

Abstract:Deep learning-based numerical schemes for solving high-dimensional backward stochastic differential equations (BSDEs) have recently raised plenty of scientific interest. While they enable numerical methods to approximate very high-dimensional BSDEs, their reliability has not been studied and is thus not understood. In this work, we study uncertainty quantification (UQ) for a class of deep learning-based BSDE schemes. More precisely, we review the sources of uncertainty involved in the schemes and numerically study the impact of different sources. Usually, the standard deviation (STD) of the approximate solutions obtained from multiple runs of the algorithm with different datasets is calculated to address the uncertainty. This approach is computationally quite expensive, especially for high-dimensional problems. Hence, we develop a UQ model that efficiently estimates the STD of the approximate solution using only a single run of the algorithm. The model also estimates the mean of the approximate solution, which can be leveraged to initialize the algorithm and improve the optimization process. Our numerical experiments show that the UQ model produces reliable estimates of the mean and STD of the approximate solution for the considered class of deep learning-based BSDE schemes. The estimated STD captures multiple sources of uncertainty, demonstrating its effectiveness in quantifying the uncertainty. Additionally, the model illustrates the improved performance when comparing different schemes based on the estimated STD values. Furthermore, it can identify hyperparameter values for which the scheme achieves good approximations.

Deep Learning algorithms for solving high dimensional nonlinear Backward Stochastic Differential Equations

Oct 03, 2020

Abstract:We study deep learning-based schemes for solving high dimensional nonlinear backward stochastic differential equations (BSDEs). First we show how to improve the performances of the proposed scheme in [W. E and J. Han and A. Jentzen, Commun. Math. Stat., 5 (2017), pp.349-380] regarding computational time and stability of numerical convergence by using the advanced neural network architecture instead of the stacked deep neural networks. Furthermore, the proposed scheme in that work can be stuck in local minima, especially for a complex solution structure and longer terminal time. To solve this problem, we investigate to reformulate the problem by including local losses and exploit the Long Short Term Memory (LSTM) networks which are a type of recurrent neural networks (RNN). Finally, in order to study numerical convergence and thus illustrate the improved performances with the proposed methods, we provide numerical results for several 100-dimensional nonlinear BSDEs including a nonlinear pricing problem in finance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge