Loic Brunel

A lattice-based approach to the expressivity of deep ReLU neural networks

Feb 28, 2019

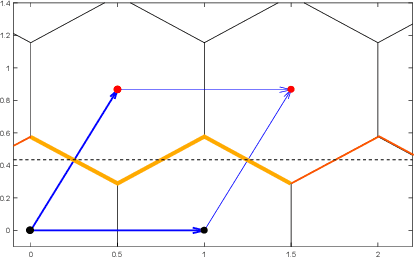

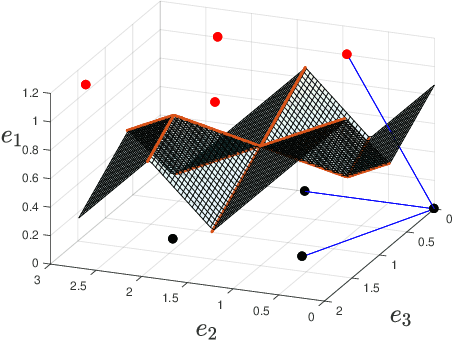

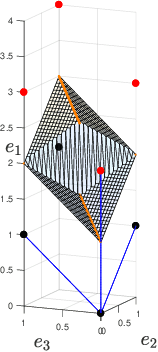

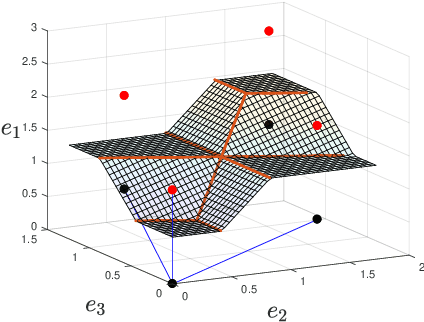

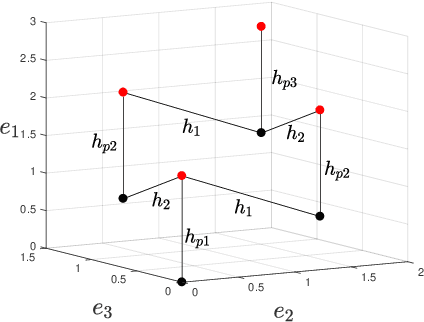

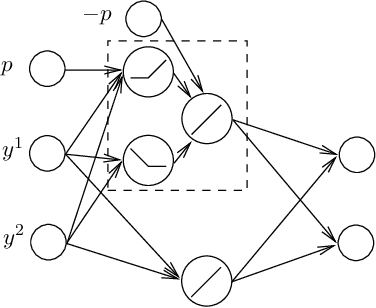

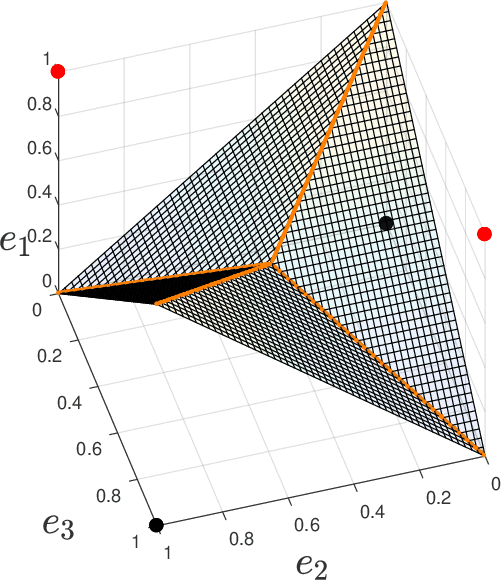

Abstract:We present new families of continuous piecewise linear (CPWL) functions in Rn having a number of affine pieces growing exponentially in $n$. We show that these functions can be seen as the high-dimensional generalization of the triangle wave function used by Telgarsky in 2016. We prove that they can be computed by ReLU networks with quadratic depth and linear width in the space dimension. We also investigate the approximation error of one of these functions by shallower networks and prove a separation result. The main difference between our functions and other constructions is their practical interest: they arise in the scope of channel coding. Hence, computing such functions amounts to performing a decoding operation.

On the CVP for the root lattices via folding with deep ReLU neural networks

Feb 28, 2019

Abstract:Point lattices and their decoding via neural networks are considered in this paper. Lattice decoding in Rn, known as the closest vector problem (CVP), becomes a classification problem in the fundamental parallelotope with a piecewise linear function defining the boundary. Theoretical results are obtained by studying root lattices. We show how the number of pieces in the boundary function reduces dramatically with folding, from exponential to linear. This translates into a two-layer ReLU network requiring a number of neurons growing exponentially in n to solve the CVP, whereas this complexity becomes polynomial in n for a deep ReLU network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge