Lodovico Giaretta

Adaptive Graph Pruning with Sudden-Events Evaluation for Traffic Prediction using Online Semi-Decentralized ST-GNNs

Dec 19, 2025Abstract:Spatio-Temporal Graph Neural Networks (ST-GNNs) are well-suited for processing high-frequency data streams from geographically distributed sensors in smart mobility systems. However, their deployment at the edge across distributed compute nodes (cloudlets) createssubstantial communication overhead due to repeated transmission of overlapping node features between neighbouring cloudlets. To address this, we propose an adaptive pruning algorithm that dynamically filters redundant neighbour features while preserving the most informative spatial context for prediction. The algorithm adjusts pruning rates based on recent model performance, allowing each cloudlet to focus on regions experiencing traffic changes without compromising accuracy. Additionally, we introduce the Sudden Event Prediction Accuracy (SEPA), a novel event-focused metric designed to measure responsiveness to traffic slowdowns and recoveries, which are often missed by standard error metrics. We evaluate our approach in an online semi-decentralized setting with traditional FL, server-free FL, and Gossip Learning on two large-scale traffic datasets, PeMS-BAY and PeMSD7-M, across short-, mid-, and long-term prediction horizons. Experiments show that, in contrast to standard metrics, SEPA exposes the true value of spatial connectivity in predicting dynamic and irregular traffic. Our adaptive pruning algorithm maintains prediction accuracy while significantly lowering communication cost in all online semi-decentralized settings, demonstrating that communication can be reduced without compromising responsiveness to critical traffic events.

Semi-decentralized Training of Spatio-Temporal Graph Neural Networks for Traffic Prediction

Dec 04, 2024Abstract:In smart mobility, large networks of geographically distributed sensors produce vast amounts of high-frequency spatio-temporal data that must be processed in real time to avoid major disruptions. Traditional centralized approaches are increasingly unsuitable to this task, as they struggle to scale with expanding sensor networks, and reliability issues in central components can easily affect the whole deployment. To address these challenges, we explore and adapt semi-decentralized training techniques for Spatio-Temporal Graph Neural Networks (ST-GNNs) in smart mobility domain. We implement a simulation framework where sensors are grouped by proximity into multiple cloudlets, each handling a subgraph of the traffic graph, fetching node features from other cloudlets to train its own local ST-GNN model, and exchanging model updates with other cloudlets to ensure consistency, enhancing scalability and removing reliance on a centralized aggregator. We perform extensive comparative evaluation of four different ST-GNN training setups -- centralized, traditional FL, server-free FL, and Gossip Learning -- on large-scale traffic datasets, the METR-LA and PeMS-BAY datasets, for short-, mid-, and long-term vehicle speed predictions. Experimental results show that semi-decentralized setups are comparable to centralized approaches in performance metrics, while offering advantages in terms of scalability and fault tolerance. In addition, we highlight often overlooked issues in existing literature for distributed ST-GNNs, such as the variation in model performance across different geographical areas due to region-specific traffic patterns, and the significant communication overhead and computational costs that arise from the large receptive field of GNNs, leading to substantial data transfers and increased computation of partial embeddings.

Federated Word2Vec: Leveraging Federated Learning to Encourage Collaborative Representation Learning

Apr 19, 2021

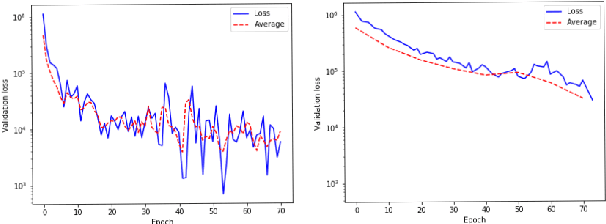

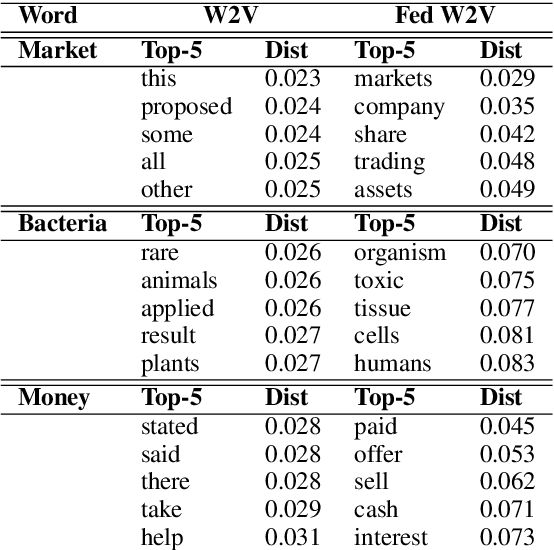

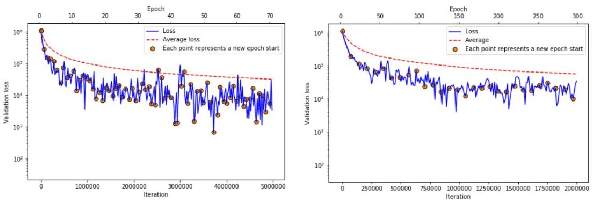

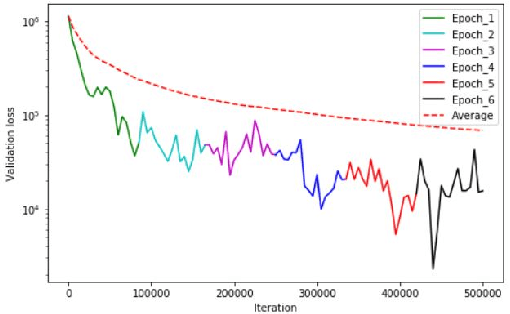

Abstract:Large scale contextual representation models have significantly advanced NLP in recent years, understanding the semantics of text to a degree never seen before. However, they need to process large amounts of data to achieve high-quality results. Joining and accessing all these data from multiple sources can be extremely challenging due to privacy and regulatory reasons. Federated Learning can solve these limitations by training models in a distributed fashion, taking advantage of the hardware of the devices that generate the data. We show the viability of training NLP models, specifically Word2Vec, with the Federated Learning protocol. In particular, we focus on a scenario in which a small number of organizations each hold a relatively large corpus. The results show that neither the quality of the results nor the convergence time in Federated Word2Vec deteriorates as compared to centralised Word2Vec.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge