Lizhen Liang

Predicting the longevity of resources shared in scientific publications

Mar 24, 2022

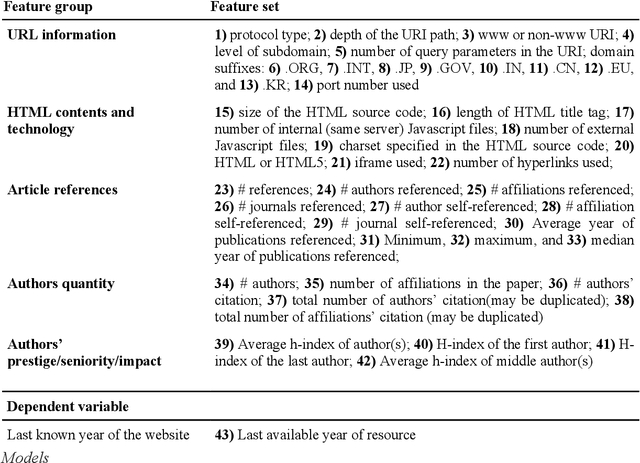

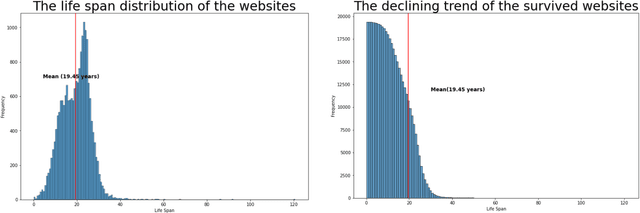

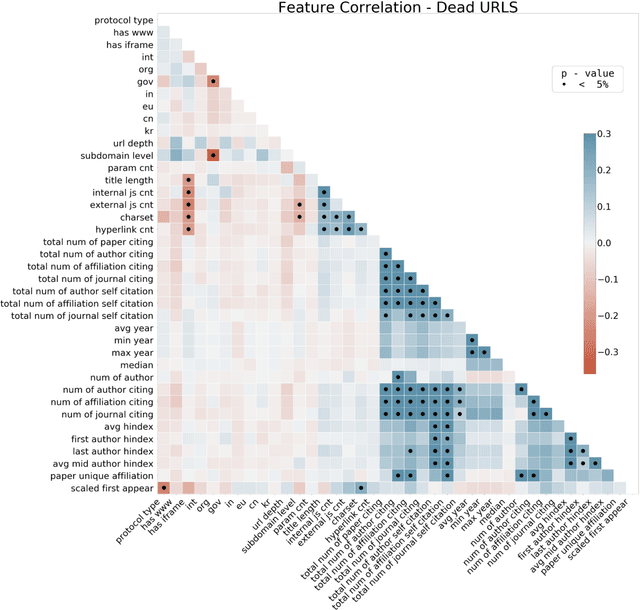

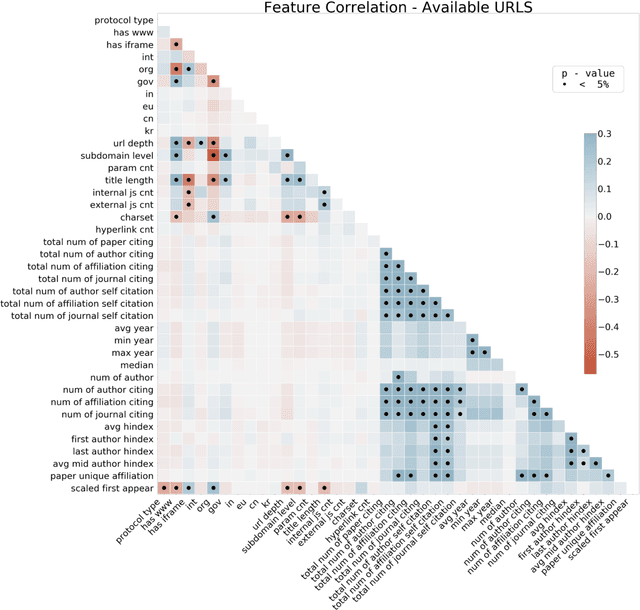

Abstract:Research has shown that most resources shared in articles (e.g., URLs to code or data) are not kept up to date and mostly disappear from the web after some years (Zeng et al., 2019). Little is known about the factors that differentiate and predict the longevity of these resources. This article explores a range of explanatory features related to the publication venue, authors, references, and where the resource is shared. We analyze an extensive repository of publications and, through web archival services, reconstruct how they looked at different time points. We discover that the most important factors are related to where and how the resource is shared, and surprisingly little is explained by the author's reputation or prestige of the journal. By examining the places where long-lasting resources are shared, we suggest that it is critical to disseminate and create standards with modern technologies. Finally, we discuss implications for reproducibility and recognizing scientific datasets as first-class citizens.

Artificial mental phenomena: Psychophysics as a framework to detect perception biases in AI models

Dec 15, 2019

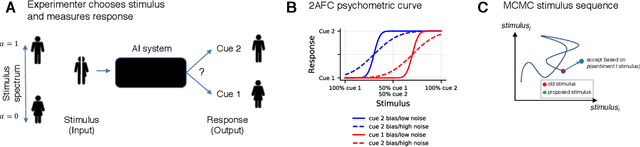

Abstract:Detecting biases in artificial intelligence has become difficult because of the impenetrable nature of deep learning. The central difficulty is in relating unobservable phenomena deep inside models with observable, outside quantities that we can measure from inputs and outputs. For example, can we detect gendered perceptions of occupations (e.g., female librarian, male electrician) using questions to and answers from a word embedding-based system? Current techniques for detecting biases are often customized for a task, dataset, or method, affecting their generalization. In this work, we draw from Psychophysics in Experimental Psychology---meant to relate quantities from the real world (i.e., "Physics") into subjective measures in the mind (i.e., "Psyche")---to propose an intellectually coherent and generalizable framework to detect biases in AI. Specifically, we adapt the two-alternative forced choice task (2AFC) to estimate potential biases and the strength of those biases in black-box models. We successfully reproduce previously-known biased perceptions in word embeddings and sentiment analysis predictions. We discuss how concepts in experimental psychology can be naturally applied to understanding artificial mental phenomena, and how psychophysics can form a useful methodological foundation to study fairness in AI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge