Lisi Qarkaxhija

Link Prediction with Untrained Message Passing Layers

Jun 24, 2024

Abstract:Message passing neural networks (MPNNs) operate on graphs by exchanging information between neigbouring nodes. MPNNs have been successfully applied to various node-, edge-, and graph-level tasks in areas like molecular science, computer vision, natural language processing, and combinatorial optimization. However, most MPNNs require training on large amounts of labeled data, which can be costly and time-consuming. In this work, we explore the use of various untrained message passing layers in graph neural networks, i.e. variants of popular message passing architecture where we remove all trainable parameters that are used to transform node features in the message passing step. Focusing on link prediction, we find that untrained message passing layers can lead to competitive and even superior performance compared to fully trained MPNNs, especially in the presence of high-dimensional features. We provide a theoretical analysis of untrained message passing by relating the inner products of features implicitly produced by untrained message passing layers to path-based topological node similarity measures. As such, untrained message passing architectures can be viewed as a highly efficient and interpretable approach to link prediction.

Inference of Sequential Patterns for Neural Message Passing in Temporal Graphs

Jun 24, 2024

Abstract:The modelling of temporal patterns in dynamic graphs is an important current research issue in the development of time-aware GNNs. Whether or not a specific sequence of events in a temporal graph constitutes a temporal pattern not only depends on the frequency of its occurrence. We consider whether it deviates from what is expected in a temporal graph where timestamps are randomly shuffled. While accounting for such a random baseline is important to model temporal patterns, it has mostly been ignored by current temporal graph neural networks. To address this issue we propose HYPA-DBGNN, a novel two-step approach that combines (i) the inference of anomalous sequential patterns in time series data on graphs based on a statistically principled null model, with (ii) a neural message passing approach that utilizes a higher-order De Bruijn graph whose edges capture overrepresented sequential patterns. Our method leverages hypergeometric graph ensembles to identify anomalous edges within both first- and higher-order De Bruijn graphs, which encode the temporal ordering of events. The model introduces an inductive bias that enhances model interpretability. We evaluate our approach for static node classification using benchmark datasets and a synthetic dataset that showcases its ability to incorporate the observed inductive bias regarding over- and under-represented temporal edges. We demonstrate the framework's effectiveness in detecting similar patterns within empirical datasets, resulting in superior performance compared to baseline methods in node classification tasks. To the best of our knowledge, our work is the first to introduce statistically informed GNNs that leverage temporal and causal sequence anomalies. HYPA-DBGNN represents a path for bridging the gap between statistical graph inference and neural graph representation learning, with potential applications to static GNNs.

One Graph to Rule them All: Using NLP and Graph Neural Networks to analyse Tolkien's Legendarium

Oct 14, 2022

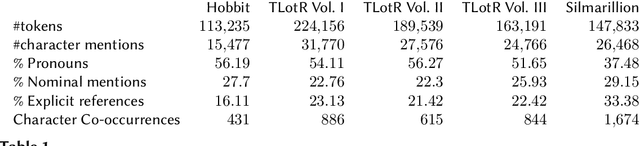

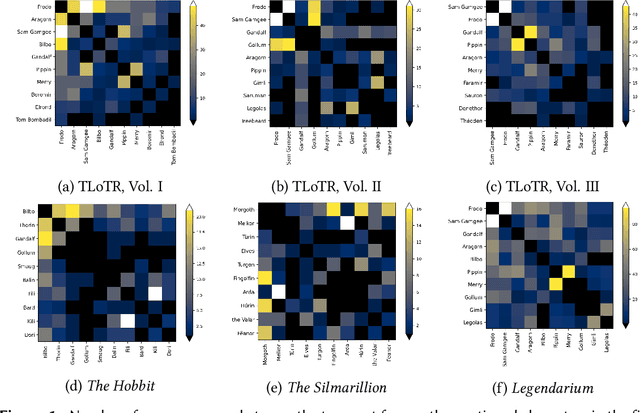

Abstract:Natural Language Processing and Machine Learning have considerably advanced Computational Literary Studies. Similarly, the construction of co-occurrence networks of literary characters, and their analysis using methods from social network analysis and network science, have provided insights into the micro- and macro-level structure of literary texts. Combining these perspectives, in this work we study character networks extracted from a text corpus of J.R.R. Tolkien's Legendarium. We show that this perspective helps us to analyse and visualise the narrative style that characterises Tolkien's works. Addressing character classification, embedding and co-occurrence prediction, we further investigate the advantages of state-of-the-art Graph Neural Networks over a popular word embedding method. Our results highlight the large potential of graph learning in Computational Literary Studies.

De Bruijn goes Neural: Causality-Aware Graph Neural Networks for Time Series Data on Dynamic Graphs

Sep 17, 2022

Abstract:We introduce De Bruijn Graph Neural Networks (DBGNNs), a novel time-aware graph neural network architecture for time-resolved data on dynamic graphs. Our approach accounts for temporal-topological patterns that unfold in the causal topology of dynamic graphs, which is determined by causal walks, i.e. temporally ordered sequences of links by which nodes can influence each other over time. Our architecture builds on multiple layers of higher-order De Bruijn graphs, an iterative line graph construction where nodes in a De Bruijn graph of order k represent walks of length k-1, while edges represent walks of length k. We develop a graph neural network architecture that utilizes De Bruijn graphs to implement a message passing scheme that follows a non-Markovian dynamics, which enables us to learn patterns in the causal topology of a dynamic graph. Addressing the issue that De Bruijn graphs with different orders k can be used to model the same data set, we further apply statistical model selection to determine the optimal graph topology to be used for message passing. An evaluation in synthetic and empirical data sets suggests that DBGNNs can leverage temporal patterns in dynamic graphs, which substantially improves the performance in a supervised node classification task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge