Lisa Everest

Towards Optimal Valve Prescription for Transcatheter Aortic Valve Replacement (TAVR) Surgery: A Machine Learning Approach

Dec 09, 2025Abstract:Transcatheter Aortic Valve Replacement (TAVR) has emerged as a minimally invasive treatment option for patients with severe aortic stenosis, a life-threatening cardiovascular condition. Multiple transcatheter heart valves (THV) have been approved for use in TAVR, but current guidelines regarding valve type prescription remain an active topic of debate. We propose a data-driven clinical support tool to identify the optimal valve type with the objective of minimizing the risk of permanent pacemaker implantation (PPI), a predominant postoperative complication. We synthesize a novel dataset that combines U.S. and Greek patient populations and integrates three distinct data sources (patient demographics, computed tomography scans, echocardiograms) while harmonizing differences in each country's record system. We introduce a leaf-level analysis to leverage population heterogeneity and avoid benchmarking against uncertain counterfactual risk estimates. The final prescriptive model shows a reduction in PPI rates of 26% and 16% compared with the current standard of care in our internal U.S. population and external Greek validation cohort, respectively. To the best of our knowledge, this work represents the first unified, personalized prescription strategy for THV selection in TAVR.

Multimodal Prescriptive Deep Learning

Jan 24, 2025

Abstract:We introduce a multimodal deep learning framework, Prescriptive Neural Networks (PNNs), that combines ideas from optimization and machine learning, and is, to the best of our knowledge, the first prescriptive method to handle multimodal data. The PNN is a feedforward neural network trained on embeddings to output an outcome-optimizing prescription. In two real-world multimodal datasets, we demonstrate that PNNs prescribe treatments that are able to significantly improve estimated outcomes in transcatheter aortic valve replacement (TAVR) procedures by reducing estimated postoperative complication rates by 32% and in liver trauma injuries by reducing estimated mortality rates by over 40%. In four real-world, unimodal tabular datasets, we demonstrate that PNNs outperform or perform comparably to other well-known, state-of-the-art prescriptive models; importantly, on tabular datasets, we also recover interpretability through knowledge distillation, fitting interpretable Optimal Classification Tree models onto the PNN prescriptions as classification targets, which is critical for many real-world applications. Finally, we demonstrate that our multimodal PNN models achieve stability across randomized data splits comparable to other prescriptive methods and produce realistic prescriptions across the different datasets.

Deep Trees for (Un)structured Data: Tractability, Performance, and Interpretability

Oct 28, 2024

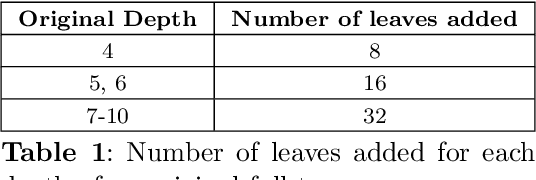

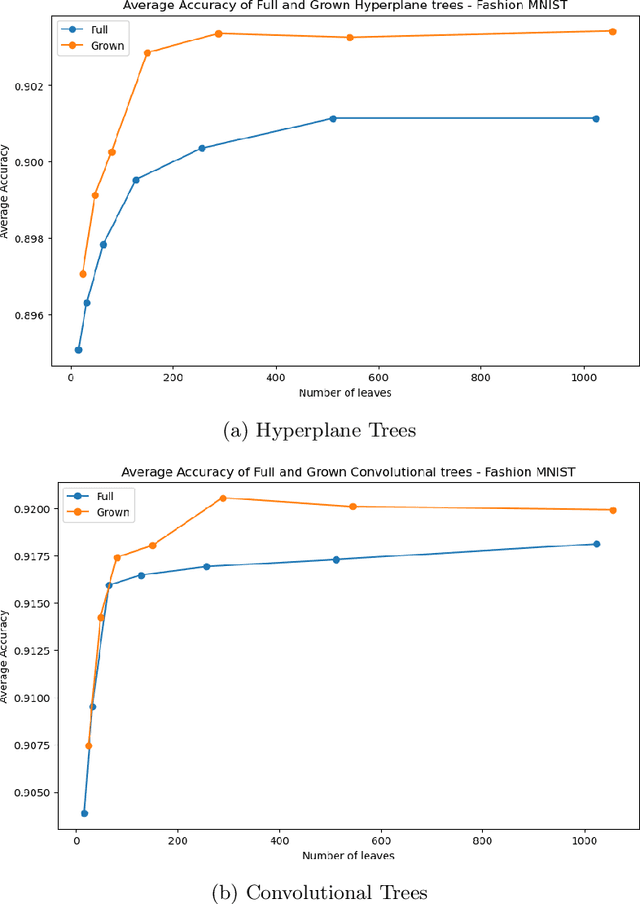

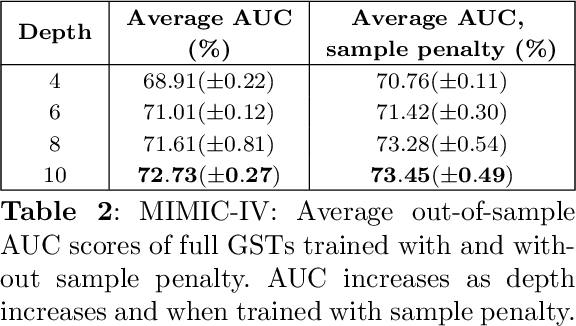

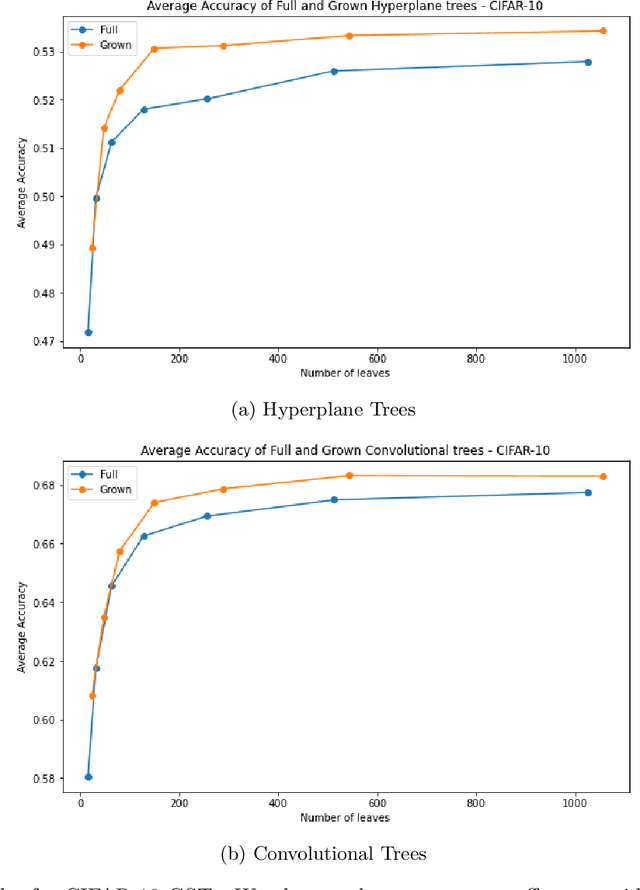

Abstract:Decision Trees have remained a popular machine learning method for tabular datasets, mainly due to their interpretability. However, they lack the expressiveness needed to handle highly nonlinear or unstructured datasets. Motivated by recent advances in tree-based machine learning (ML) techniques and first-order optimization methods, we introduce Generalized Soft Trees (GSTs), which extend soft decision trees (STs) and are capable of processing images directly. We demonstrate their advantages with respect to tractability, performance, and interpretability. We develop a tractable approach to growing GSTs, given by the DeepTree algorithm, which, in addition to new regularization terms, produces high-quality models with far fewer nodes and greater interpretability than traditional soft trees. We test the performance of our GSTs on benchmark tabular and image datasets, including MIMIC-IV, MNIST, Fashion MNIST, CIFAR-10 and Celeb-A. We show that our approach outperforms other popular tree methods (CART, Random Forests, XGBoost) in almost all of the datasets, with Convolutional Trees having a significant edge in the hardest CIFAR-10 and Fashion MNIST datasets. Finally, we explore the interpretability of our GSTs and find that even the most complex GSTs are considerably more interpretable than deep neural networks. Overall, our approach of Generalized Soft Trees provides a tractable method that is high-performing on (un)structured datasets and preserves interpretability more than traditional deep learning methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge