Lindsay Sanneman

An Information Bottleneck Characterization of the Understanding-Workload Tradeoff

Oct 11, 2023

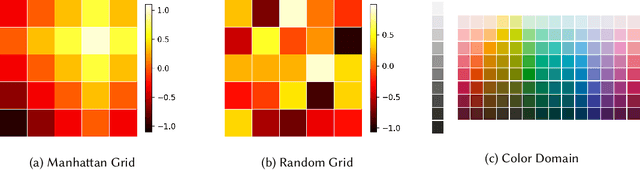

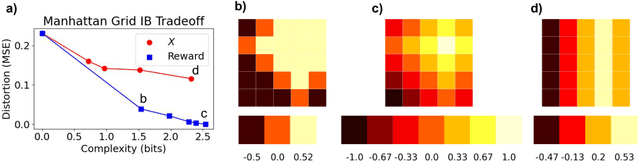

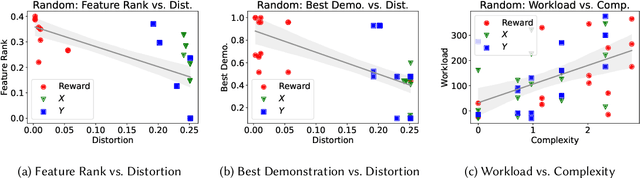

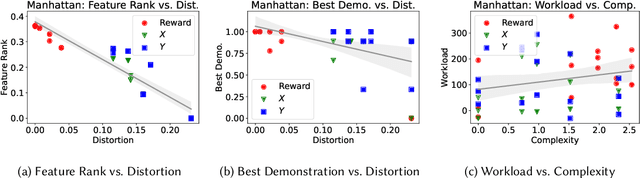

Abstract:Recent advances in artificial intelligence (AI) have underscored the need for explainable AI (XAI) to support human understanding of AI systems. Consideration of human factors that impact explanation efficacy, such as mental workload and human understanding, is central to effective XAI design. Existing work in XAI has demonstrated a tradeoff between understanding and workload induced by different types of explanations. Explaining complex concepts through abstractions (hand-crafted groupings of related problem features) has been shown to effectively address and balance this workload-understanding tradeoff. In this work, we characterize the workload-understanding balance via the Information Bottleneck method: an information-theoretic approach which automatically generates abstractions that maximize informativeness and minimize complexity. In particular, we establish empirical connections between workload and complexity and between understanding and informativeness through human-subject experiments. This empirical link between human factors and information-theoretic concepts provides an important mathematical characterization of the workload-understanding tradeoff which enables user-tailored XAI design.

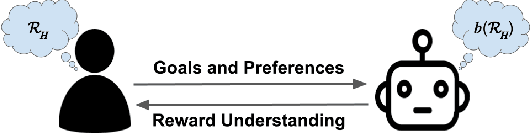

Explaining Reward Functions to Humans for Better Human-Robot Collaboration

Oct 08, 2021

Abstract:Explainable AI techniques that describe agent reward functions can enhance human-robot collaboration in a variety of settings. One context where human understanding of agent reward functions is particularly beneficial is in the value alignment setting. In the value alignment context, an agent aims to infer a human's reward function through interaction so that it can assist the human with their tasks. If the human can understand where gaps exist in the agent's reward understanding, they will be able to teach more efficiently and effectively, leading to quicker human-agent team performance improvements. In order to support human collaborators in the value alignment setting and similar contexts, it is first important to understand the effectiveness of different reward explanation techniques in a variety of domains. In this paper, we introduce a categorization of information modalities for reward explanation techniques, suggest a suite of assessment techniques for human reward understanding, and introduce four axes of domain complexity. We then propose an experiment to study the relative efficacy of a broad set of reward explanation techniques covering multiple modalities of information in a set of domains of varying complexity.

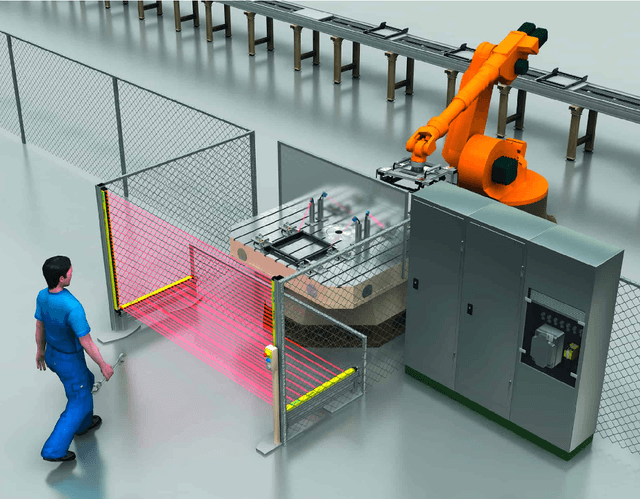

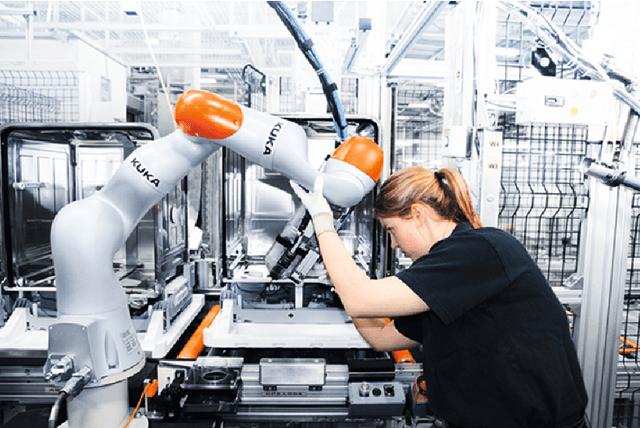

The State of Industrial Robotics: Emerging Technologies, Challenges, and Key Research Directions

Oct 27, 2020

Abstract:Robotics and related technologies are central to the ongoing digitization and advancement of manufacturing. In recent years, a variety of strategic initiatives around the world including "Industry 4.0", introduced in Germany in 2011 have aimed to improve and connect manufacturing technologies in order to optimize production processes. In this work, we study the changing technological landscape of robotics and "internet-of-things" (IoT)-based connective technologies over the last 7-10 years in the wake of Industry 4.0. We interviewed key players within the European robotics ecosystem, including robotics manufacturers and integrators, original equipment manufacturers (OEMs), and applied industrial research institutions and synthesize our findings in this paper. We first detail the state-of-the-art robotics and IoT technologies we observed and that the companies discussed during our interviews. We then describe the processes the companies follow when deciding whether and how to integrate new technologies, the challenges they face when integrating these technologies, and some immediate future technological avenues they are exploring in robotics and IoT. Finally, based on our findings, we highlight key research directions for the robotics community that can enable improved capabilities in the context of manufacturing.

Trust Considerations for Explainable Robots: A Human Factors Perspective

May 12, 2020Abstract:Recent advances in artificial intelligence (AI) and robotics have drawn attention to the need for AI systems and robots to be understandable to human users. The explainable AI (XAI) and explainable robots literature aims to enhance human understanding and human-robot team performance by providing users with necessary information about AI and robot behavior. Simultaneously, the human factors literature has long addressed important considerations that contribute to human performance, including human trust in autonomous systems. In this paper, drawing from the human factors literature, we discuss three important trust-related considerations for the design of explainable robot systems: the bases of trust, trust calibration, and trust specificity. We further detail existing and potential metrics for assessing trust in robotic systems based on explanations provided by explainable robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge