Lihui Xue

The Road to On-board Change Detection: A Lightweight Patch-Level Change Detection Network via Exploring the Potential of Pruning and Pooling

Oct 16, 2023Abstract:Existing satellite remote sensing change detection (CD) methods often crop original large-scale bi-temporal image pairs into small patch pairs and then use pixel-level CD methods to fairly process all the patch pairs. However, due to the sparsity of change in large-scale satellite remote sensing images, existing pixel-level CD methods suffer from a waste of computational cost and memory resources on lots of unchanged areas, which reduces the processing efficiency of on-board platform with extremely limited computation and memory resources. To address this issue, we propose a lightweight patch-level CD network (LPCDNet) to rapidly remove lots of unchanged patch pairs in large-scale bi-temporal image pairs. This is helpful to accelerate the subsequent pixel-level CD processing stage and reduce its memory costs. In our LPCDNet, a sensitivity-guided channel pruning method is proposed to remove unimportant channels and construct the lightweight backbone network on basis of ResNet18 network. Then, the multi-layer feature compression (MLFC) module is designed to compress and fuse the multi-level feature information of bi-temporal image patch. The output of MLFC module is fed into the fully-connected decision network to generate the predicted binary label. Finally, a weighted cross-entropy loss is utilized in the training process of network to tackle the change/unchange class imbalance problem. Experiments on two CD datasets demonstrate that our LPCDNet achieves more than 1000 frames per second on an edge computation platform, i.e., NVIDIA Jetson AGX Orin, which is more than 3 times that of the existing methods without noticeable CD performance loss. In addition, our method reduces more than 60% memory costs of the subsequent pixel-level CD processing stage.

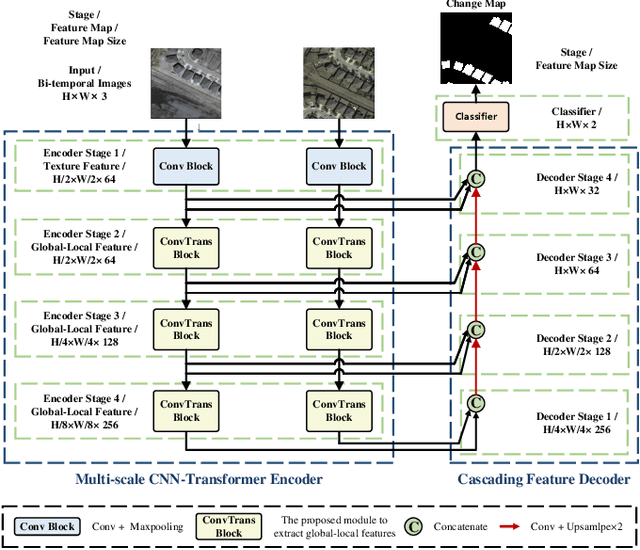

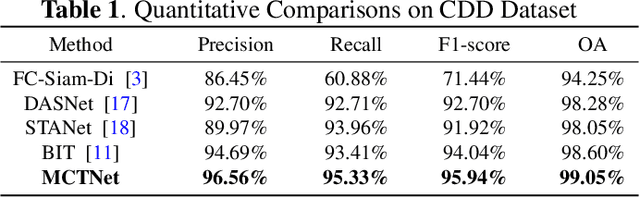

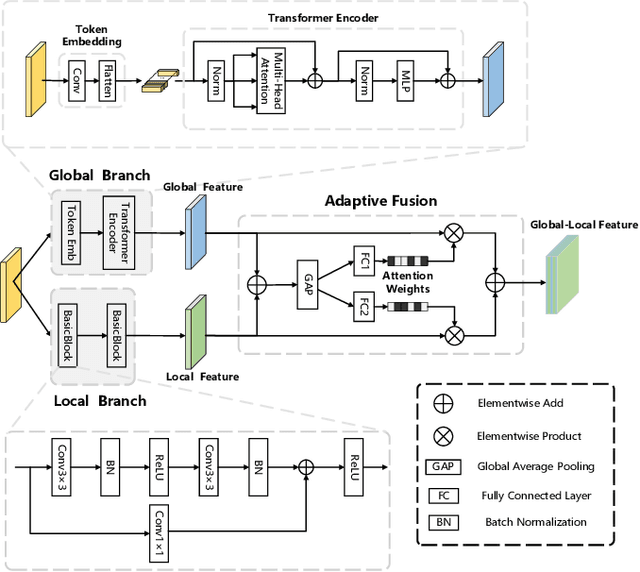

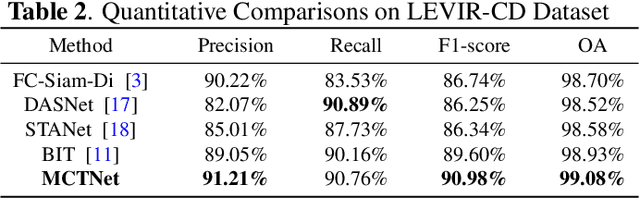

MCTNet: A Multi-Scale CNN-Transformer Network for Change Detection in Optical Remote Sensing Images

Oct 14, 2022

Abstract:For the task of change detection (CD) in remote sensing images, deep convolution neural networks (CNNs)-based methods have recently aggregated transformer modules to improve the capability of global feature extraction. However, they suffer degraded CD performance on small changed areas due to the simple single-scale integration of deep CNNs and transformer modules. To address this issue, we propose a hybrid network based on multi-scale CNN-transformer structure, termed MCTNet, where the multi-scale global and local information are exploited to enhance the robustness of the CD performance on changed areas with different sizes. Especially, we design the ConvTrans block to adaptively aggregate global features from transformer modules and local features from CNN layers, which provides abundant global-local features with different scales. Experimental results demonstrate that our MCTNet achieves better detection performance than existing state-of-the-art CD methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge