Leonie Benischke

Evaluating Large Language Models for Causal Modeling

Nov 24, 2024

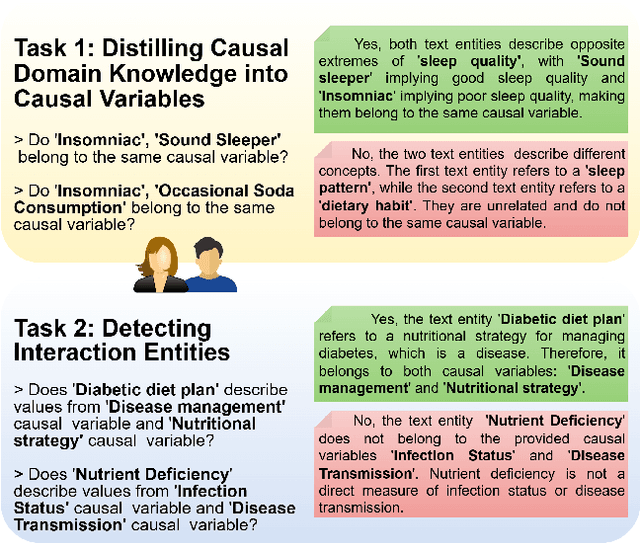

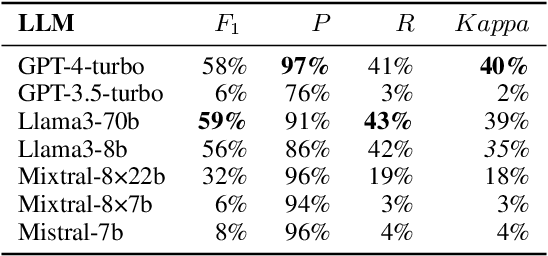

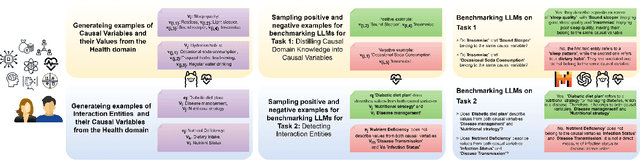

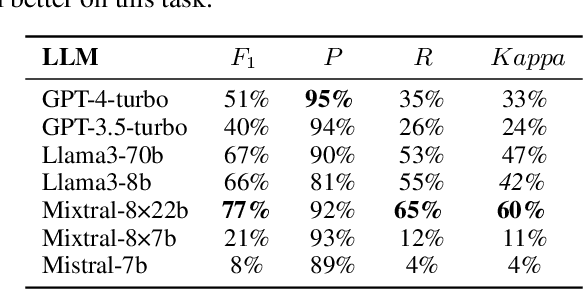

Abstract:In this paper, we consider the process of transforming causal domain knowledge into a representation that aligns more closely with guidelines from causal data science. To this end, we introduce two novel tasks related to distilling causal domain knowledge into causal variables and detecting interaction entities using LLMs. We have determined that contemporary LLMs are helpful tools for conducting causal modeling tasks in collaboration with human experts, as they can provide a wider perspective. Specifically, LLMs, such as GPT-4-turbo and Llama3-70b, perform better in distilling causal domain knowledge into causal variables compared to sparse expert models, such as Mixtral-8x22b. On the contrary, sparse expert models such as Mixtral-8x22b stand out as the most effective in identifying interaction entities. Finally, we highlight the dependency between the domain where the entities are generated and the performance of the chosen LLM for causal modeling.

Increasing the Accessibility of Causal Domain Knowledge via Causal Information Extraction Methods: A Case Study in the Semiconductor Manufacturing Industry

Nov 15, 2024

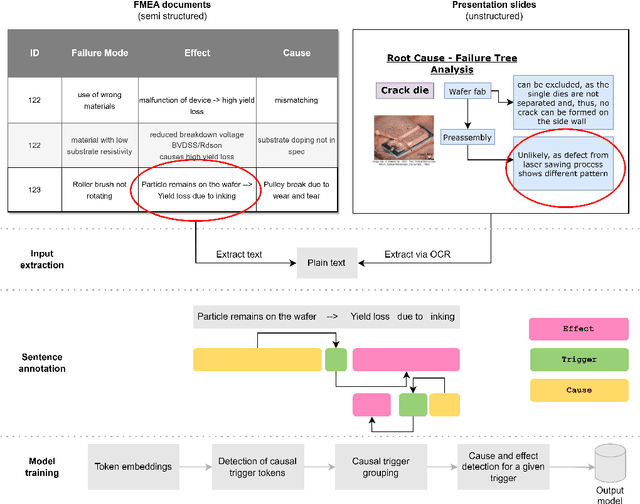

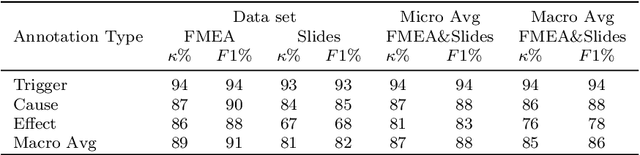

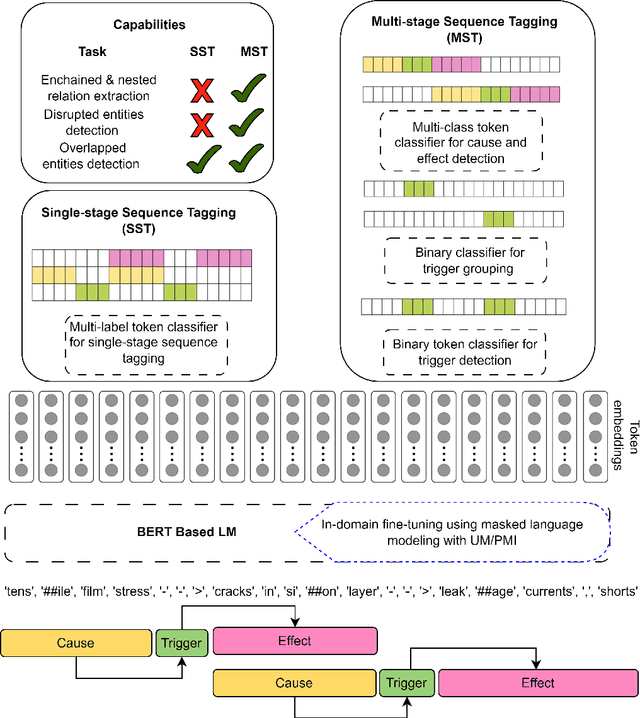

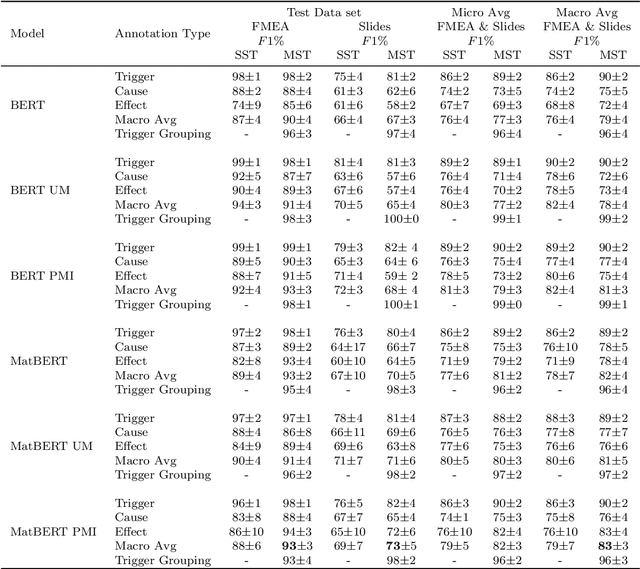

Abstract:The extraction of causal information from textual data is crucial in the industry for identifying and mitigating potential failures, enhancing process efficiency, prompting quality improvements, and addressing various operational challenges. This paper presents a study on the development of automated methods for causal information extraction from actual industrial documents in the semiconductor manufacturing industry. The study proposes two types of causal information extraction methods, single-stage sequence tagging (SST) and multi-stage sequence tagging (MST), and evaluates their performance using existing documents from a semiconductor manufacturing company, including presentation slides and FMEA (Failure Mode and Effects Analysis) documents. The study also investigates the effect of representation learning on downstream tasks. The presented case study showcases that the proposed MST methods for extracting causal information from industrial documents are suitable for practical applications, especially for semi structured documents such as FMEAs, with a 93\% F1 score. Additionally, MST achieves a 73\% F1 score on texts extracted from presentation slides. Finally, the study highlights the importance of choosing a language model that is more aligned with the domain and in-domain fine-tuning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge