Leonardo Borgioli

Expanded Comprehensive Robotic Cholecystectomy Dataset (CRCD)

Dec 16, 2024

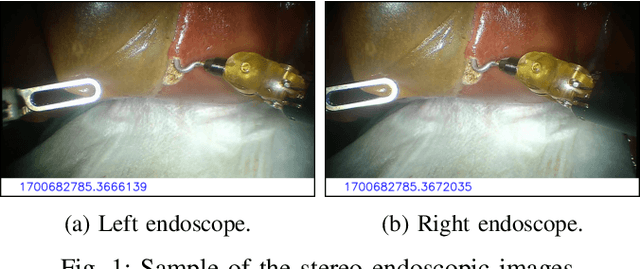

Abstract:In recent years, the application of machine learning to minimally invasive surgery (MIS) has attracted considerable interest. Datasets are critical to the use of such techniques. This paper presents a unique dataset recorded during ex vivo pseudo-cholecystectomy procedures on pig livers using the da Vinci Research Kit (dVRK). Unlike existing datasets, it addresses a critical gap by providing comprehensive kinematic data, recordings of all pedal inputs, and offers a time-stamped record of the endoscope's movements. This expanded version also includes segmentation and keypoint annotations of images, enhancing its utility for computer vision applications. Contributed by seven surgeons with varied backgrounds and experience levels that are provided as a part of this expanded version, the dataset is an important new resource for surgical robotics research. It enables the development of advanced methods for evaluating surgeon skills, tools for providing better context awareness, and automation of surgical tasks. Our work overcomes the limitations of incomplete recordings and imprecise kinematic data found in other datasets. To demonstrate the potential of the dataset for advancing automation in surgical robotics, we introduce two models that predict clutch usage and camera activation, a 3D scene reconstruction example, and the results from our keypoint and segmentation models.

Sensory Glove-Based Surgical Robot User Interface

Mar 20, 2024

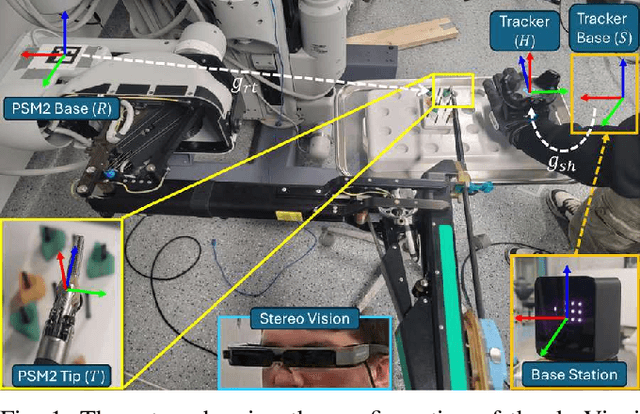

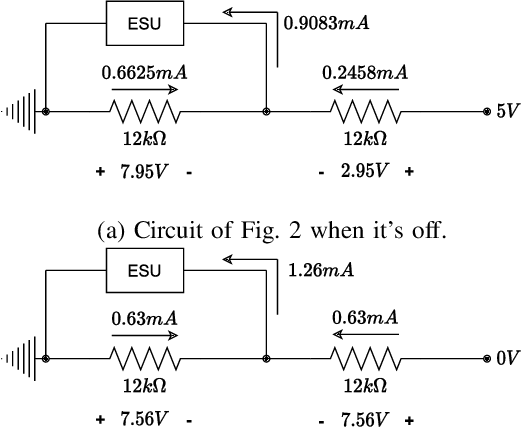

Abstract:Robotic surgery has reached a high level of maturity and has become an integral part of standard surgical care. However, existing surgeon consoles are bulky and take up valuable space in the operating room, present challenges for surgical team coordination, and their proprietary nature makes it difficult to take advantage of recent technological advances, especially in virtual and augmented reality. One potential area for further improvement is the integration of modern sensory gloves into robotic platforms, allowing surgeons to control robotic arms directly with their hand movements intuitively. We propose one such system that combines an HTC Vive tracker, a Manus Meta Prime 3 XR sensory glove, and God Vision wireless smart glasses. The system controls one arm of a da Vinci surgical robot. In addition to moving the arm, the surgeon can use fingers to control the end-effector of the surgical instrument. Hand gestures are used to implement clutching and similar functions. In particular, we introduce clutching of the instrument orientation, a functionality not available in the da Vinci system. The vibrotactile elements of the glove are used to provide feedback to the user when gesture commands are invoked. A preliminary evaluation of the system shows that it has excellent tracking accuracy and allows surgeons to efficiently perform common surgical training tasks with minimal practice with the new interface; this suggests that the interface is highly intuitive. The proposed system is inexpensive, allows rapid prototyping, and opens opportunities for further innovations in the design of surgical robot interfaces.

Comprehensive Robotic Cholecystectomy Dataset (CRCD): Integrating Kinematics, Pedal Signals, and Endoscopic Videos

Dec 02, 2023

Abstract:In recent years, the potential applications of machine learning to Minimally Invasive Surgery (MIS) have spurred interest in data sets that can be used to develop data-driven tools. This paper introduces a novel dataset recorded during ex vivo pseudo-cholecystectomy procedures on pig livers, utilizing the da Vinci Research Kit (dVRK). Unlike current datasets, ours bridges a critical gap by offering not only full kinematic data but also capturing all pedal inputs used during the procedure and providing a time-stamped record of the endoscope's movements. Contributed by seven surgeons, this data set introduces a new dimension to surgical robotics research, allowing the creation of advanced models for automating console functionalities. Our work addresses the existing limitation of incomplete recordings and imprecise kinematic data, common in other datasets. By introducing two models, dedicated to predicting clutch usage and camera activation, we highlight the dataset's potential for advancing automation in surgical robotics. The comparison of methodologies and time windows provides insights into the models' boundaries and limitations.

A Framework For Automated Dissection Along Tissue Boundary

Oct 14, 2023

Abstract:Robotic surgery promises enhanced precision and adaptability over traditional surgical methods. It also offers the possibility of automating surgical interventions, resulting in reduced stress on the surgeon, better surgical outcomes, and lower costs. Cholecystectomy, the removal of the gallbladder, serves as an ideal model procedure for automation due to its distinct and well-contrasted anatomical features between the gallbladder and liver, along with standardized surgical maneuvers. Dissection is a frequently used subtask in cholecystectomy where the surgeon delivers the energy on the hook to detach the gallbladder from the liver. Hence, dissection along tissue boundaries is a good candidate for surgical automation. For the da Vinci surgical robot to perform the same procedure as a surgeon automatically, it needs to have the ability to (1) recognize and distinguish between the two different tissues (e.g. the liver and the gallbladder), (2) understand where the boundary between the two tissues is located in the 3D workspace, (3) locate the instrument tip relative to the boundary in the 3D space using visual feedback, and (4) move the instrument along the boundary. This paper presents a novel framework that addresses these challenges through AI-assisted image processing and vision-based robot control. We also present the ex-vivo evaluation of the automated procedure on chicken and pork liver specimens that demonstrates the effectiveness of the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge