Leon Eisemann

Introducing a Class-Aware Metric for Monocular Depth Estimation: An Automotive Perspective

Sep 06, 2024

Abstract:The increasing accuracy reports of metric monocular depth estimation models lead to a growing interest from the automotive domain. Current model evaluations do not provide deeper insights into the models' performance, also in relation to safety-critical or unseen classes. Within this paper, we present a novel approach for the evaluation of depth estimation models. Our proposed metric leverages three components, a class-wise component, an edge and corner image feature component, and a global consistency retaining component. Classes are further weighted on their distance in the scene and on criticality for automotive applications. In the evaluation, we present the benefits of our metric through comparison to classical metrics, class-wise analytics, and the retrieval of critical situations. The results show that our metric provides deeper insights into model results while fulfilling safety-critical requirements. We release the code and weights on the following repository: \href{https://github.com/leisemann/ca_mmde}

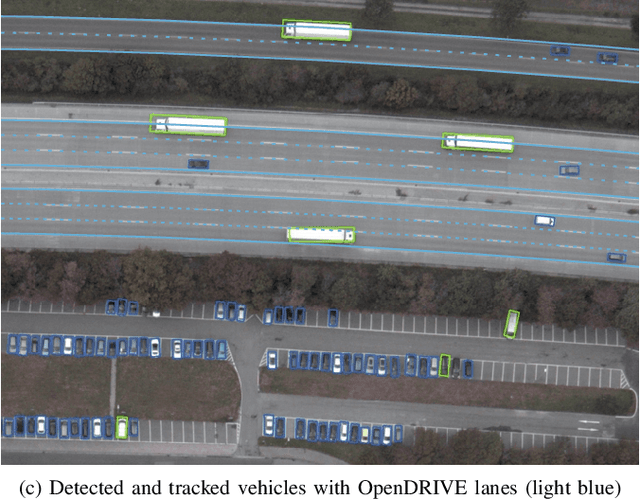

Divide and Conquer: A Systematic Approach for Industrial Scale High-Definition OpenDRIVE Generation from Sparse Point Clouds

Jul 26, 2024Abstract:High-definition road maps play a crucial role in the functionality and verification of highly automated driving functions. These contain precise information about the road network, geometry, condition, as well as traffic signs. Despite their importance for the development and evaluation of driving functions, the generation of high-definition maps is still an ongoing research topic. While previous work in this area has primarily focused on the accuracy of road geometry, we present a novel approach for automated large-scale map generation for use in industrial applications. Our proposed method leverages a minimal number of external information about the road to process LiDAR data in segments. These segments are subsequently combined, enabling a flexible and scalable process that achieves high-definition accuracy. Additionally, we showcase the use of the resulting OpenDRIVE in driving function simulation.

* 8 pages, 5 figures, 1 table. arXiv admin note: text overlap with arXiv:2405.07544

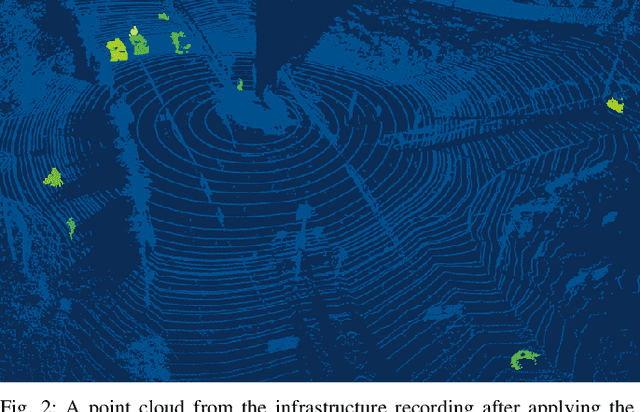

Automatic Odometry-Less OpenDRIVE Generation From Sparse Point Clouds

May 13, 2024

Abstract:High-resolution road representations are a key factor for the success of (highly) automated driving functions. These representations, for example, high-definition (HD) maps, contain accurate information on a multitude of factors, among others: road geometry, lane information, and traffic signs. Through the growing complexity and functionality of automated driving functions, also the requirements on testing and evaluation grow continuously. This leads to an increasing interest in virtual test drives for evaluation purposes. As roads play a crucial role in traffic flow, accurate real-world representations are needed, especially when deriving realistic driving behavior data. This paper proposes a novel approach to generate realistic road representations based solely on point cloud information, independent of the LiDAR sensor, mounting position, and without the need for odometry data, multi-sensor fusion, machine learning, or highly-accurate calibration. As the primary use case is simulation, we use the OpenDRIVE format for evaluation.

* 8 pages, 4 figures, 3 algorithms, 2 tables

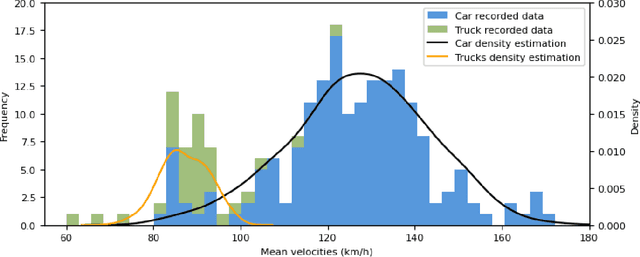

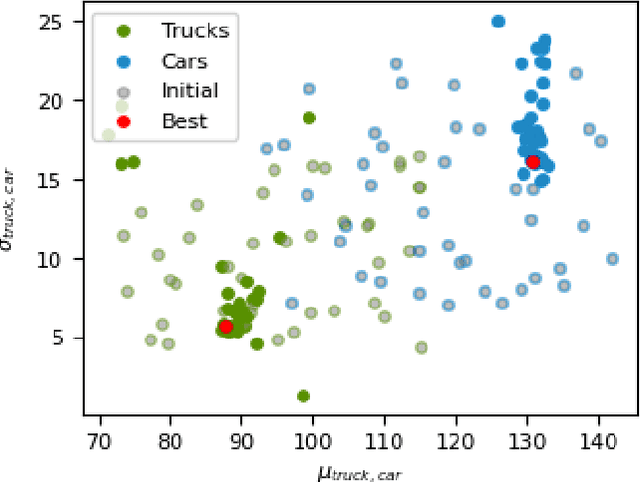

A Joint Approach Towards Data-Driven Virtual Testing for Automated Driving: The AVEAS Project

May 10, 2024

Abstract:With growing complexity and responsibility of automated driving functions in road traffic and growing scope of their operational design domains, there is increasing demand for covering significant parts of development, validation, and verification via virtual environments and simulation models. If, however, simulations are meant not only to augment real-world experiments, but to replace them, quantitative approaches are required that measure to what degree and under which preconditions simulation models adequately represent reality, and thus allow their usage for virtual testing of driving functions. Especially in research and development areas related to the safety impacts of the "open world", there is a significant shortage of real-world data to parametrize and/or validate simulations - especially with respect to the behavior of human traffic participants, whom automated vehicles will meet in mixed traffic. This paper presents the intermediate results of the German AVEAS research project (www.aveas.org) which aims at developing methods and metrics for the harmonized, systematic, and scalable acquisition of real-world data for virtual verification and validation of advanced driver assistance systems and automated driving, and establishing an online database following the FAIR principles.

* 6 pages, 5 figures, 2 tables

An Approach to Systematic Data Acquisition and Data-Driven Simulation for the Safety Testing of Automated Driving Functions

May 02, 2024

Abstract:With growing complexity and criticality of automated driving functions in road traffic and their operational design domains (ODD), there is increasing demand for covering significant proportions of development, validation, and verification in virtual environments and through simulation models. If, however, simulations are meant not only to augment real-world experiments, but to replace them, quantitative approaches are required that measure to what degree and under which preconditions simulation models adequately represent reality, and thus, using their results accordingly. Especially in R&D areas related to the safety impact of the "open world", there is a significant shortage of real-world data to parameterize and/or validate simulations - especially with respect to the behavior of human traffic participants, whom automated driving functions will meet in mixed traffic. We present an approach to systematically acquire data in public traffic by heterogeneous means, transform it into a unified representation, and use it to automatically parameterize traffic behavior models for use in data-driven virtual validation of automated driving functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge