Lawson Wong

Robot Body Schema Learning from Full-body Extero/Proprioception Sensors

Feb 28, 2024

Abstract:For a robot, its body structure is an a-prior knowledge when it is designed. However, when such information is not available, can a robot recognize it by itself? In this paper, we aim to grant a robot such ability to learn its body structure from exteroception and proprioception data collected from on-body sensors. By a novel machine learning method, the robot can learn a binary Heterogeneous Dependency Matrix from its sensor readings. We showed such matrix is equivalent to a Heterogeneous out-tree structure which can uniquely represent the robot body topology. We explored the properties of such matrix and the out-tree, and proposed a remedy to fix them when they are contaminated by partial observability or data noise. We ran our algorithm on 6 different robots with different body structures in simulation and 1 real robot. Our algorithm correctly recognized their body structures with only on-body sensor readings but no topology prior knowledge.

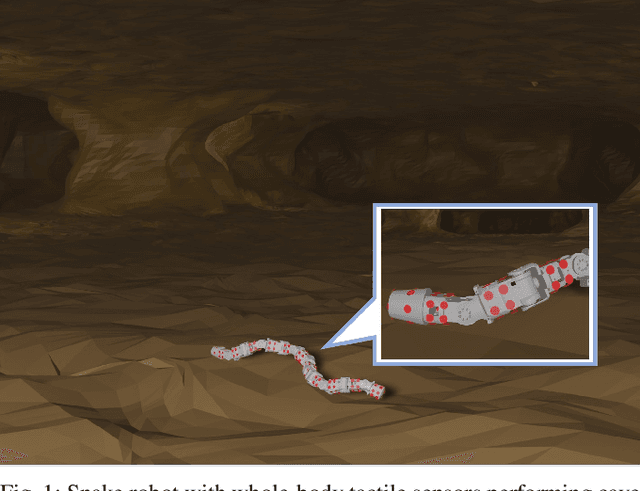

Snake Robot with Tactile Perception Navigates on Large-scale Challenging Terrain

Dec 06, 2023

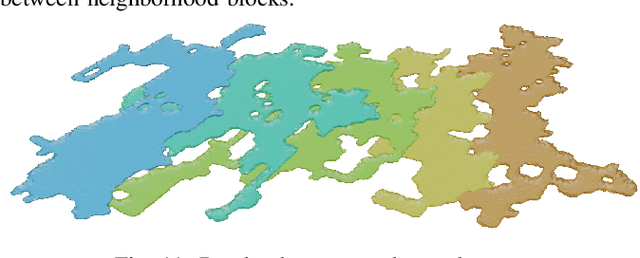

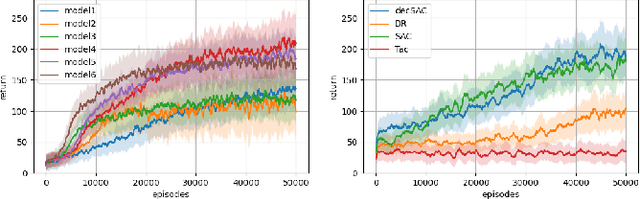

Abstract:Along with the advancement of robot skin technology, there has been notable progress in the development of snake robots featuring body-surface tactile perception. In this study, we proposed a locomotion control framework for snake robots that integrates tactile perception to augment their adaptability to various terrains. Our approach embraces a hierarchical reinforcement learning (HRL) architecture, wherein the high-level orchestrates global navigation strategies while the low-level uses curriculum learning for local navigation maneuvers. Due to the significant computational demands of collision detection in whole-body tactile sensing, the efficiency of the simulator is severely compromised. Thus a distributed training pattern to mitigate the efficiency reduction was adopted. We evaluated the navigation performance of the snake robot in complex large-scale cave exploration with challenging terrains to exhibit improvements in motion efficiency, evidencing the efficacy of tactile perception in terrain-adaptive locomotion of snake robots.

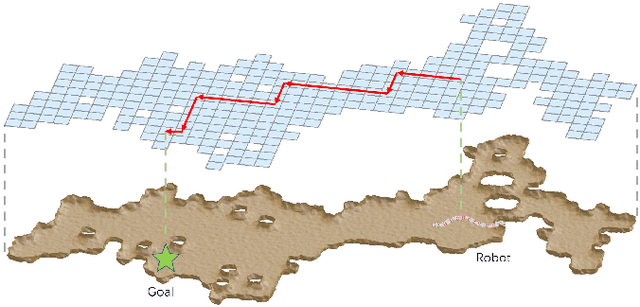

Hierarchical RL-Guided Large-scale Navigation of a Snake Robot

Dec 06, 2023

Abstract:Classical snake robot control leverages mimicking snake-like gaits tuned for specific environments. However, to operate adaptively in unstructured environments, gait generation must be dynamically scheduled. In this work, we present a four-layer hierarchical control scheme to enable the snake robot to navigate freely in large-scale environments. The proposed model decomposes navigation into global planning, local planning, gait generation, and gait tracking. Using reinforcement learning (RL) and a central pattern generator (CPG), our method learns to navigate in complex mazes within hours and can be directly deployed to arbitrary new environments in a zero-shot fashion. We use the high-fidelity model of Northeastern's slithering robot COBRA to test the effectiveness of the proposed hierarchical control approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge