Laura Lahesoo

Binary Anomaly Detection in Streaming IoT Traffic under Concept Drift

Oct 31, 2025

Abstract:With the growing volume of Internet of Things (IoT) network traffic, machine learning (ML)-based anomaly detection is more relevant than ever. Traditional batch learning models face challenges such as high maintenance and poor adaptability to rapid anomaly changes, known as concept drift. In contrast, streaming learning integrates online and incremental learning, enabling seamless updates and concept drift detection to improve robustness. This study investigates anomaly detection in streaming IoT traffic as binary classification, comparing batch and streaming learning approaches while assessing the limitations of current IoT traffic datasets. We simulated heterogeneous network data streams by carefully mixing existing datasets and streaming the samples one by one. Our results highlight the failure of batch models to handle concept drift, but also reveal persisting limitations of current datasets to expose model limitations due to low traffic heterogeneity. We also investigated the competitiveness of tree-based ML algorithms, well-known in batch anomaly detection, and compared it to non-tree-based ones, confirming the advantages of the former. Adaptive Random Forest achieved F1-score of 0.990 $\pm$ 0.006 at one-third the computational cost of its batch counterpart. Hoeffding Adaptive Tree reached F1-score of 0.910 $\pm$ 0.007, reducing computational cost by four times, making it a viable choice for online applications despite a slight trade-off in stability.

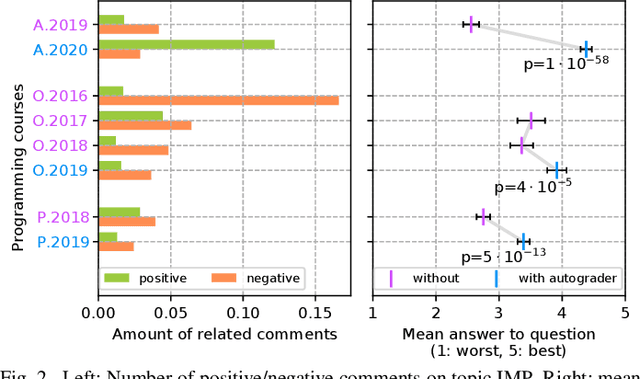

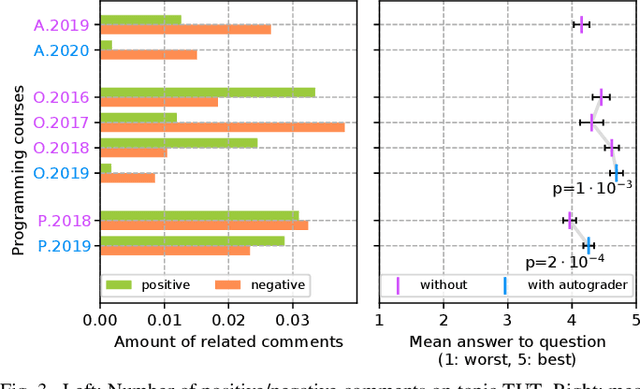

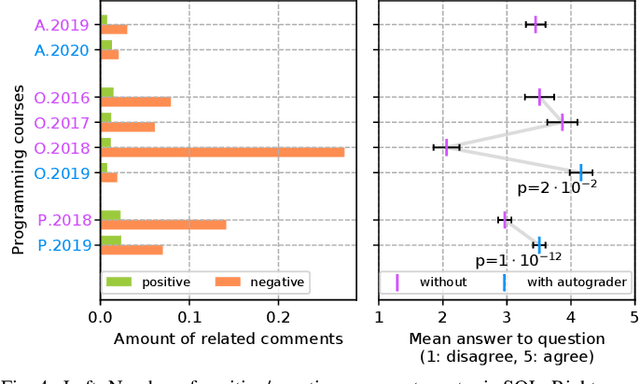

An Analysis of Programming Course Evaluations Before and After the Introduction of an Autograder

Oct 28, 2021

Abstract:Commonly, introductory programming courses in higher education institutions have hundreds of participating students eager to learn to program. The manual effort for reviewing the submitted source code and for providing feedback can no longer be managed. Manually reviewing the submitted homework can be subjective and unfair, particularly if many tutors are responsible for grading. Different autograders can help in this situation; however, there is a lack of knowledge about how autograders can impact students' overall perception of programming classes and teaching. This is relevant for course organizers and institutions to keep their programming courses attractive while coping with increasing students. This paper studies the answers to the standardized university evaluation questionnaires of multiple large-scale foundational computer science courses which recently introduced autograding. The differences before and after this intervention are analyzed. By incorporating additional observations, we hypothesize how the autograder might have contributed to the significant changes in the data, such as, improved interactions between tutors and students, improved overall course quality, improved learning success, increased time spent, and reduced difficulty. This qualitative study aims to provide hypotheses for future research to define and conduct quantitative surveys and data analysis. The autograder technology can be validated as a teaching method to improve student satisfaction with programming courses.

* Accepted full paper article on IEEE ITHET 2021

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge