Laura Greige

Collusion Detection in Team-Based Multiplayer Games

Mar 10, 2022

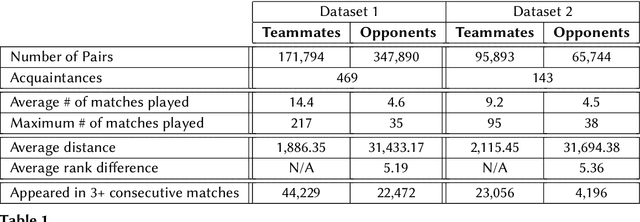

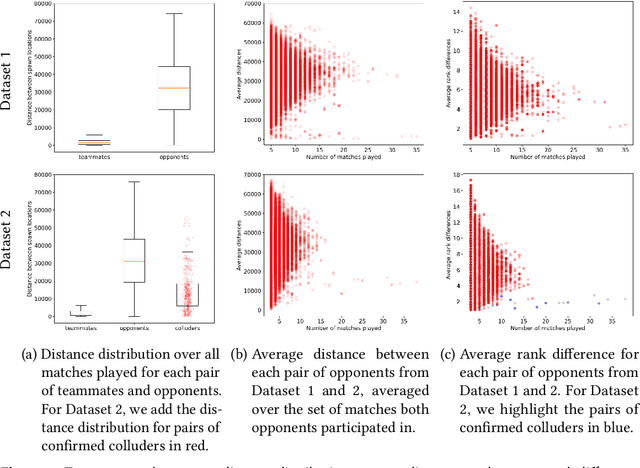

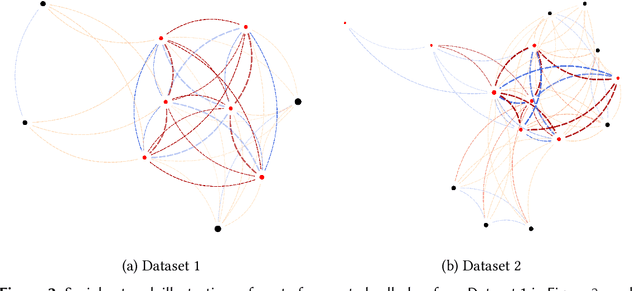

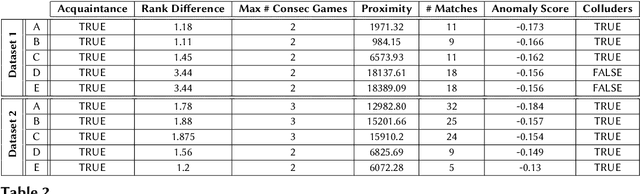

Abstract:In the context of competitive multiplayer games, collusion happens when two or more teams decide to collaborate towards a common goal, with the intention of gaining an unfair advantage from this cooperation. The task of identifying colluders from the player population is however infeasible to game designers due to the sheer size of the player population. In this paper, we propose a system that detects colluding behaviors in team-based multiplayer games and highlights the players that most likely exhibit colluding behaviors. The game designers then proceed to analyze a smaller subset of players and decide what action to take. For this reason, it is important and necessary to be extremely careful with false positives when automating the detection. The proposed method analyzes the players' social relationships paired with their in-game behavioral patterns and, using tools from graph theory, infers a feature set that allows us to detect and measure the degree of collusion exhibited by each pair of players from opposing teams. We then automate the detection using Isolation Forest, an unsupervised learning technique specialized in highlighting outliers, and show the performance and efficiency of our approach on two real datasets, each with over 170,000 unique players and over 100,000 different matches.

Reinforcement Learning in FlipIt

Feb 28, 2020

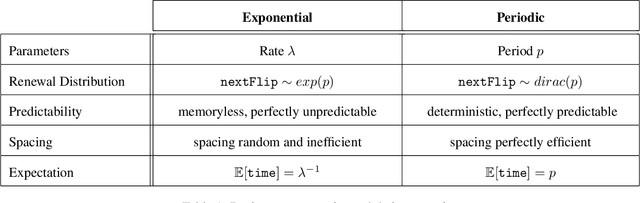

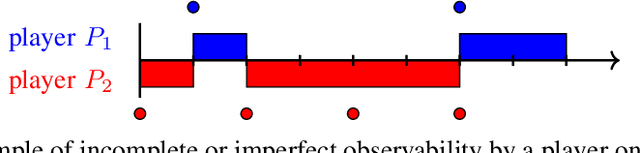

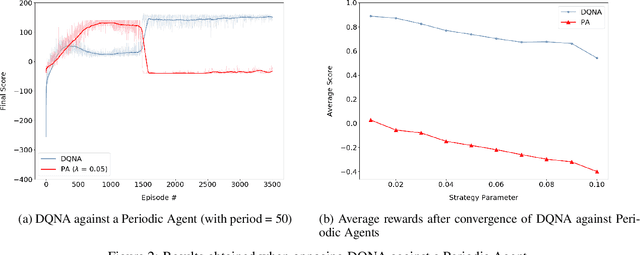

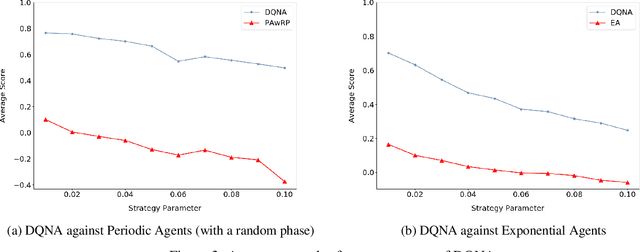

Abstract:Reinforcement learning has shown much success in games such as chess, backgammon and Go. However, in most of these games, agents have full knowledge of the environment at all times. In this paper, we describe a deep learning model that successfully optimizes its score using reinforcement learning in a game with incomplete and imperfect information. We apply our model to FlipIt, a two-player game in which both players, the attacker and the defender, compete for ownership of a shared resource and only receive information on the current state (such as the current owner of the resource, or the time since the opponent last moved, etc.) upon making a move. Our model is a deep neural network combined with Q-learning and is trained to maximize the defender's time of ownership of the resource. Despite the imperfect observations, our model successfully learns an optimal cost-effective counter-strategy and shows the advantages of the use of deep reinforcement learning in game theoretic scenarios. Our results show that it outperforms the Greedy strategy against distributions such as periodic and exponential distributions without any prior knowledge of the opponent's strategy, and we generalize the model to $n$-player games.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge