Reinforcement Learning in FlipIt

Paper and Code

Feb 28, 2020

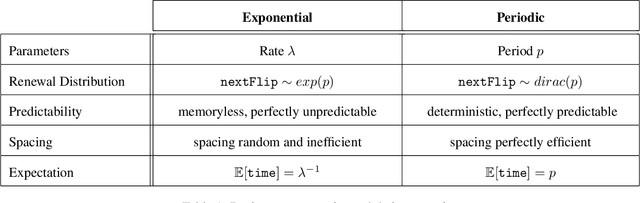

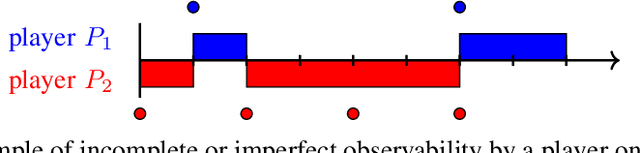

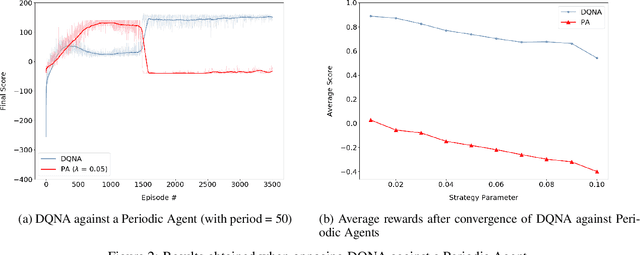

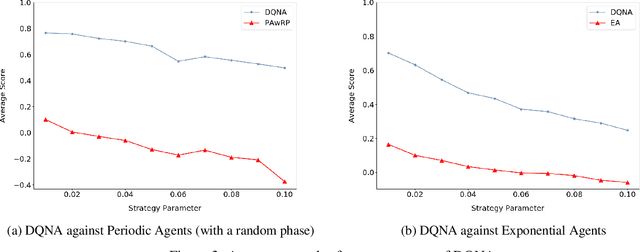

Reinforcement learning has shown much success in games such as chess, backgammon and Go. However, in most of these games, agents have full knowledge of the environment at all times. In this paper, we describe a deep learning model that successfully optimizes its score using reinforcement learning in a game with incomplete and imperfect information. We apply our model to FlipIt, a two-player game in which both players, the attacker and the defender, compete for ownership of a shared resource and only receive information on the current state (such as the current owner of the resource, or the time since the opponent last moved, etc.) upon making a move. Our model is a deep neural network combined with Q-learning and is trained to maximize the defender's time of ownership of the resource. Despite the imperfect observations, our model successfully learns an optimal cost-effective counter-strategy and shows the advantages of the use of deep reinforcement learning in game theoretic scenarios. Our results show that it outperforms the Greedy strategy against distributions such as periodic and exponential distributions without any prior knowledge of the opponent's strategy, and we generalize the model to $n$-player games.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge