Lars Möllenbrok

Continual Self-Supervised Learning with Masked Autoencoders in Remote Sensing

Jun 26, 2025

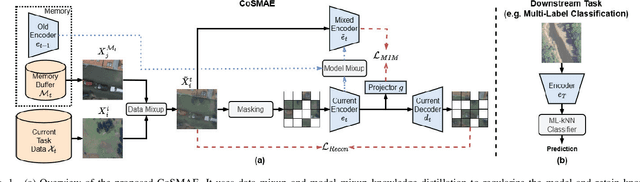

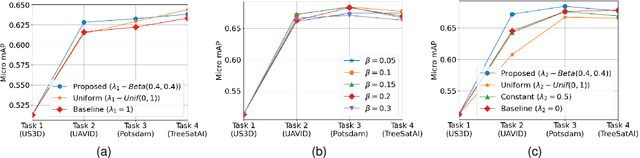

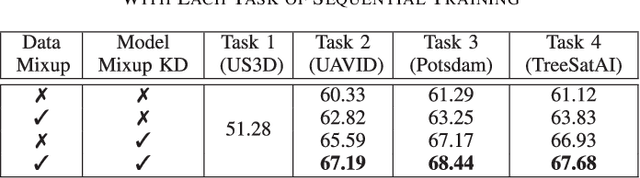

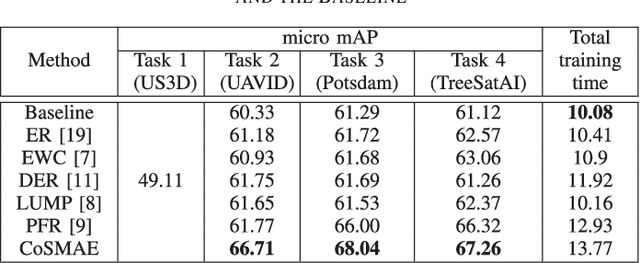

Abstract:The development of continual learning (CL) methods, which aim to learn new tasks in a sequential manner from the training data acquired continuously, has gained great attention in remote sensing (RS). The existing CL methods in RS, while learning new tasks, enhance robustness towards catastrophic forgetting. This is achieved by using a large number of labeled training samples, which is costly and not always feasible to gather in RS. To address this problem, we propose a novel continual self-supervised learning method in the context of masked autoencoders (denoted as CoSMAE). The proposed CoSMAE consists of two components: i) data mixup; and ii) model mixup knowledge distillation. Data mixup is associated with retaining information on previous data distributions by interpolating images from the current task with those from the previous tasks. Model mixup knowledge distillation is associated with distilling knowledge from past models and the current model simultaneously by interpolating their model weights to form a teacher for the knowledge distillation. The two components complement each other to regularize the MAE at the data and model levels to facilitate better generalization across tasks and reduce the risk of catastrophic forgetting. Experimental results show that CoSMAE achieves significant improvements of up to 4.94% over state-of-the-art CL methods applied to MAE. Our code is publicly available at: https://git.tu-berlin.de/rsim/CoSMAE.

A Plasticity-Aware Method for Continual Self-Supervised Learning in Remote Sensing

Mar 31, 2025Abstract:Continual self-supervised learning (CSSL) methods have gained increasing attention in remote sensing (RS) due to their capability to learn new tasks sequentially from continuous streams of unlabeled data. Existing CSSL methods, while learning new tasks, focus on preventing catastrophic forgetting. To this end, most of them use regularization strategies to retain knowledge of previous tasks. This reduces the model's ability to adapt to the data of new tasks (i.e., learning plasticity), which can degrade performance. To address this problem, in this paper, we propose a novel CSSL method that aims to learn tasks sequentially, while achieving high learning plasticity. To this end, the proposed method uses a knowledge distillation strategy with an integrated decoupling mechanism. The decoupling is achieved by first dividing the feature dimensions into task-common and task-specific parts. Then, the task-common features are forced to be correlated to ensure memory stability while the task-specific features are forced to be de-correlated facilitating the learning of new features. Experimental results show the effectiveness of the proposed method compared to CaSSLe, which is a widely used CSSL framework, with improvements of up to 1.12% in average accuracy and 2.33% in intransigence in a task-incremental scenario, and 1.24% in average accuracy and 2.01% in intransigence in a class-incremental scenario.

Annotation Cost Efficient Active Learning for Content Based Image Retrieval

Jun 26, 2023

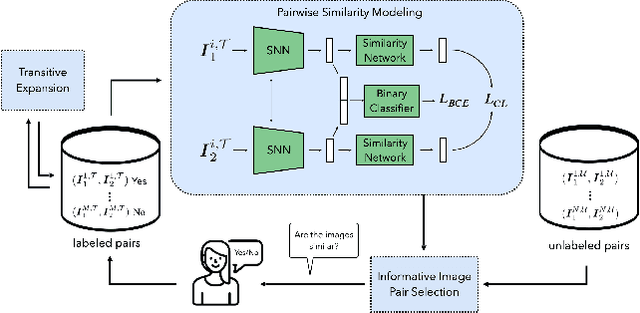

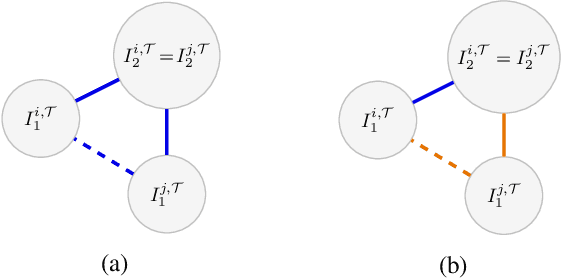

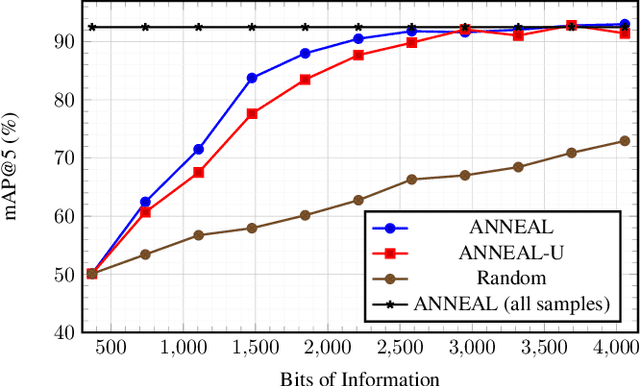

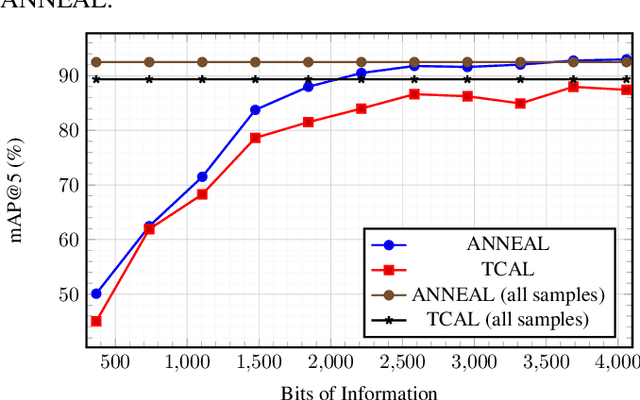

Abstract:Deep metric learning (DML) based methods have been found very effective for content-based image retrieval (CBIR) in remote sensing (RS). For accurately learning the model parameters of deep neural networks, most of the DML methods require a high number of annotated training images, which can be costly to gather. To address this problem, in this paper we present an annotation cost efficient active learning (AL) method (denoted as ANNEAL). The proposed method aims to iteratively enrich the training set by annotating the most informative image pairs as similar or dissimilar, while accurately modelling a deep metric space. This is achieved by two consecutive steps. In the first step the pairwise image similarity is modelled based on the available training set. Then, in the second step the most uncertain and diverse (i.e., informative) image pairs are selected to be annotated. Unlike the existing AL methods for CBIR, at each AL iteration of ANNEAL a human expert is asked to annotate the most informative image pairs as similar/dissimilar. This significantly reduces the annotation cost compared to annotating images with land-use/land cover class labels. Experimental results show the effectiveness of our method. The code of ANNEAL is publicly available at https://git.tu-berlin.de/rsim/ANNEAL.

Active Learning Guided Fine-Tuning for enhancing Self-Supervised Based Multi-Label Classification of Remote Sensing Images

Jun 21, 2023Abstract:In recent years, deep neural networks (DNNs) have been found very successful for multi-label classification (MLC) of remote sensing (RS) images. Self-supervised pre-training combined with fine-tuning on a randomly selected small training set has become a popular approach to minimize annotation efforts of data-demanding DNNs. However, fine-tuning on a small and biased training set may limit model performance. To address this issue, we investigate the effectiveness of the joint use of self-supervised pre-training with active learning (AL). The considered AL strategy aims at guiding the MLC fine-tuning of a self-supervised model by selecting informative training samples to annotate in an iterative manner. Experimental results show the effectiveness of applying AL-guided fine-tuning (particularly for the case where strong class-imbalance is present in MLC problems) compared to the application of fine-tuning using a randomly constructed small training set.

Deep Active Learning for Multi-Label Classification of Remote Sensing Images

Dec 02, 2022Abstract:The use of deep neural networks (DNNs) has recently attracted great attention in the framework of the multi-label classification (MLC) of remote sensing (RS) images. To optimize the large number of parameters of DNNs a high number of reliable training images annotated with multi-labels is often required. However, the collection of a large training set is time-consuming, complex and costly. To minimize annotation efforts for data-demanding DNNs, in this paper we present several query functions for active learning (AL) in the context of DNNs for the MLC of RS images. Unlike the AL query functions defined for single-label classification or semantic segmentation problems, each query function presented in this paper is based on the evaluation of two criteria: i) multi-label uncertainty; and ii) multi-label diversity. The multi-label uncertainty criterion is associated to the confidence of the DNNs in correctly assigning multi-labels to each image. To assess the multi-label uncertainty, we present and adapt to the MLC problems three strategies: i) learning multi-label loss ordering; ii) measuring temporal discrepancy of multi-label prediction; and iii) measuring magnitude of approximated gradient embedding. The multi-label diversity criterion aims at selecting a set of uncertain images that are as diverse as possible to reduce the redundancy among them. To assess this criterion we exploit a clustering based strategy. We combine each of the above-mentioned uncertainty strategy with the clustering based diversity strategy, resulting in three different query functions. Experimental results obtained on two benchmark archives show that our query functions result in the selection of a highly informative set of samples at each iteration of the AL process in the context of MLC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge