Larissa Putzar

Unimodal and Multimodal Static Facial Expression Recognition for Virtual Reality Users with EmoHeVRDB

Dec 15, 2024

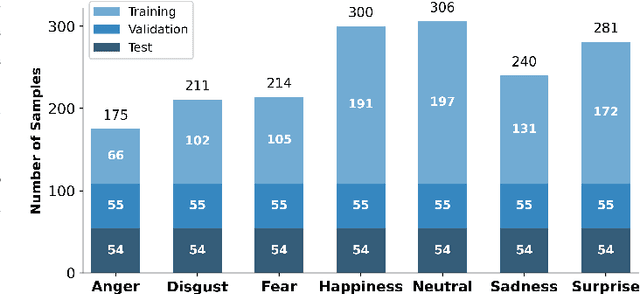

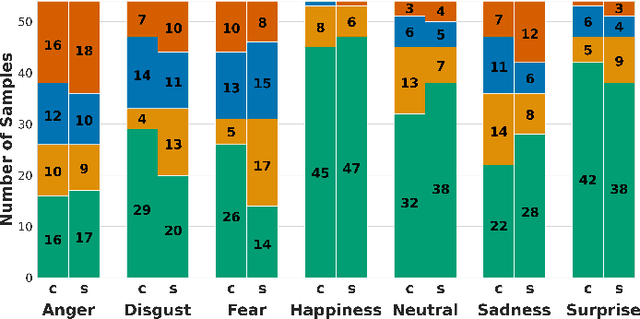

Abstract:In this study, we explored the potential of utilizing Facial Expression Activations (FEAs) captured via the Meta Quest Pro Virtual Reality (VR) headset for Facial Expression Recognition (FER) in VR settings. Leveraging the EmojiHeroVR Database (EmoHeVRDB), we compared several unimodal approaches and achieved up to 73.02% accuracy for the static FER task with seven emotion categories. Furthermore, we integrated FEA and image data in multimodal approaches, observing significant improvements in recognition accuracy. An intermediate fusion approach achieved the highest accuracy of 80.42%, significantly surpassing the baseline evaluation result of 69.84% reported for EmoHeVRDB's image data. Our study is the first to utilize EmoHeVRDB's unique FEA data for unimodal and multimodal static FER, establishing new benchmarks for FER in VR settings. Our findings highlight the potential of fusing complementary modalities to enhance FER accuracy in VR settings, where conventional image-based methods are severely limited by the occlusion caused by Head-Mounted Displays (HMDs).

EmojiHeroVR: A Study on Facial Expression Recognition under Partial Occlusion from Head-Mounted Displays

Oct 04, 2024

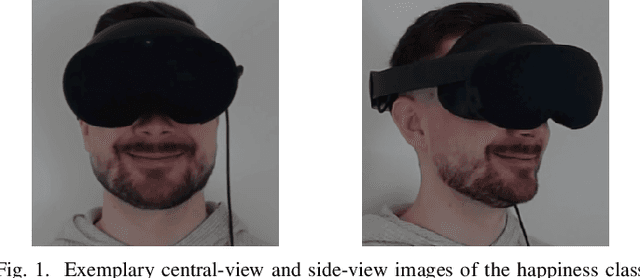

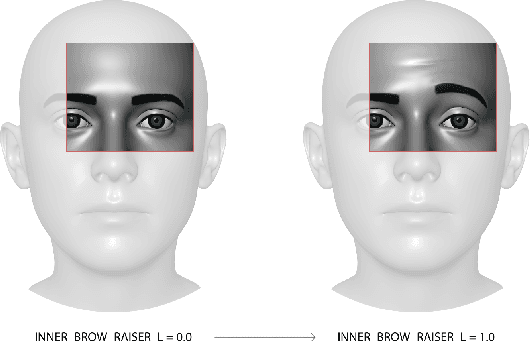

Abstract:Emotion recognition promotes the evaluation and enhancement of Virtual Reality (VR) experiences by providing emotional feedback and enabling advanced personalization. However, facial expressions are rarely used to recognize users' emotions, as Head-Mounted Displays (HMDs) occlude the upper half of the face. To address this issue, we conducted a study with 37 participants who played our novel affective VR game EmojiHeroVR. The collected database, EmoHeVRDB (EmojiHeroVR Database), includes 3,556 labeled facial images of 1,778 reenacted emotions. For each labeled image, we also provide 29 additional frames recorded directly before and after the labeled image to facilitate dynamic Facial Expression Recognition (FER). Additionally, EmoHeVRDB includes data on the activations of 63 facial expressions captured via the Meta Quest Pro VR headset for each frame. Leveraging our database, we conducted a baseline evaluation on the static FER classification task with six basic emotions and neutral using the EfficientNet-B0 architecture. The best model achieved an accuracy of 69.84% on the test set, indicating that FER under HMD occlusion is feasible but significantly more challenging than conventional FER.

Realtime Spectrum Monitoring via Reinforcement Learning -- A Comparison Between Q-Learning and Heuristic Methods

Jul 11, 2023Abstract:Due to technological advances in the field of radio technology and its availability, the number of interference signals in the radio spectrum is continuously increasing. Interference signals must be detected in a timely fashion, in order to maintain standards and keep emergency frequencies open. To this end, specialized (multi-channel) receivers are used for spectrum monitoring. In this paper, the performances of two different approaches for controlling the available receiver resources are compared. The methods used for resource management (ReMa) are linear frequency tuning as a heuristic approach and a Q-learning algorithm from the field of reinforcement learning. To test the methods to be investigated, a simplified scenario was designed with two receiver channels monitoring ten non-overlapping frequency bands with non-uniform signal activity. For this setting, it is shown that the Q-learning algorithm used has a significantly higher detection rate than the heuristic approach at the expense of a smaller exploration rate. In particular, the Q-learning approach can be parameterized to allow for a suitable trade-off between detection and exploration rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge