Lanbo Zhang

Automatic Historical Feature Generation through Tree-based Method in Ads Prediction

Dec 31, 2020

Abstract:Historical features are important in ads click-through rate (CTR) prediction, because they account for past engagements between users and ads. In this paper, we study how to efficiently construct historical features through counting features. The key challenge of such problem lies in how to automatically identify counting keys. We propose a tree-based method for counting key selection. The intuition is that a decision tree naturally provides various combinations of features, which could be used as counting key candidate. In order to select personalized counting features, we train one decision tree model per user, and the counting keys are selected across different users with a frequency-based importance measure. To validate the effectiveness of proposed solution, we conduct large scale experiments on Twitter video advertising data. In both online learning and offline training settings, the automatically identified counting features outperform the manually curated counting features.

Managing Diversity in Airbnb Search

Mar 31, 2020

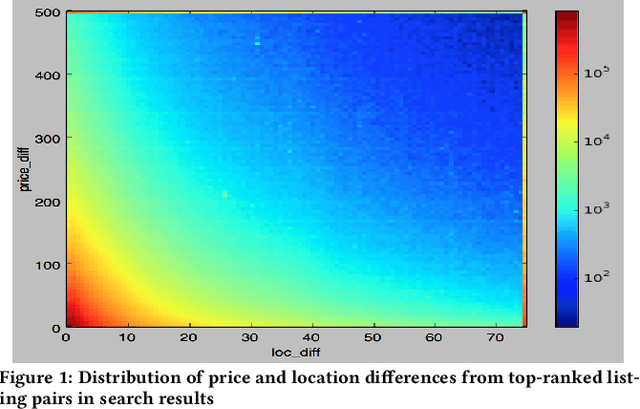

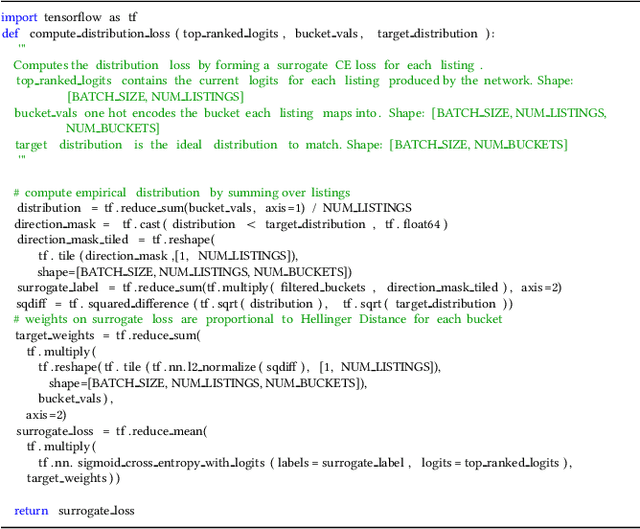

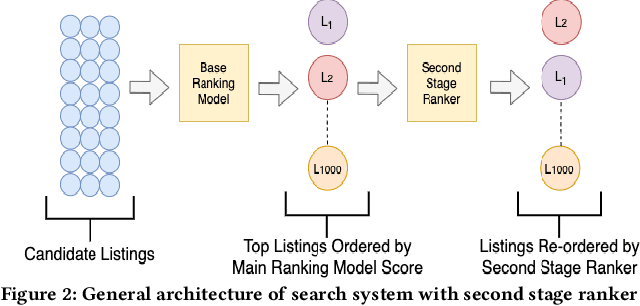

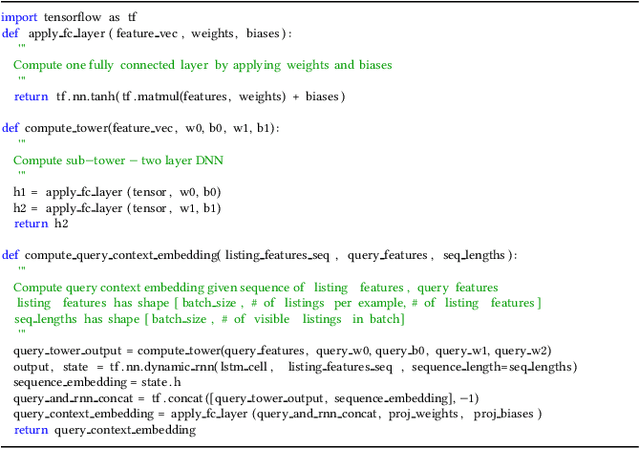

Abstract:One of the long-standing questions in search systems is the role of diversity in results. From a product perspective, showing diverse results provides the user with more choice and should lead to an improved experience. However, this intuition is at odds with common machine learning approaches to ranking which directly optimize the relevance of each individual item without a holistic view of the result set. In this paper, we describe our journey in tackling the problem of diversity for Airbnb search, starting from heuristic based approaches and concluding with a novel deep learning solution that produces an embedding of the entire query context by leveraging Recurrent Neural Networks (RNNs). We hope our lessons learned will prove useful to others and motivate further research in this area.

Improving Deep Learning For Airbnb Search

Feb 10, 2020

Abstract:The application of deep learning to search ranking was one of the most impactful product improvements at Airbnb. But what comes next after you launch a deep learning model? In this paper we describe the journey beyond, discussing what we refer to as the ABCs of improving search: A for architecture, B for bias and C for cold start. For architecture, we describe a new ranking neural network, focusing on the process that evolved our existing DNN beyond a fully connected two layer network. On handling positional bias in ranking, we describe a novel approach that led to one of the most significant improvements in tackling inventory that the DNN historically found challenging. To solve cold start, we describe our perspective on the problem and changes we made to improve the treatment of new listings on the platform. We hope ranking teams transitioning to deep learning will find this a practical case study of how to iterate on DNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge