Lakshay Narula

All-Weather sub-50-cm Radar-Inertial Positioning

Sep 09, 2020

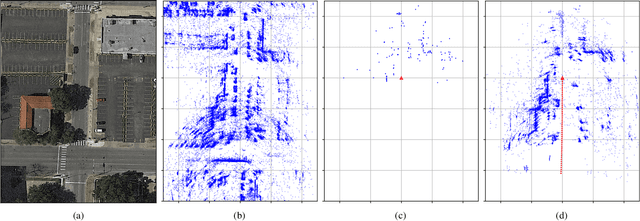

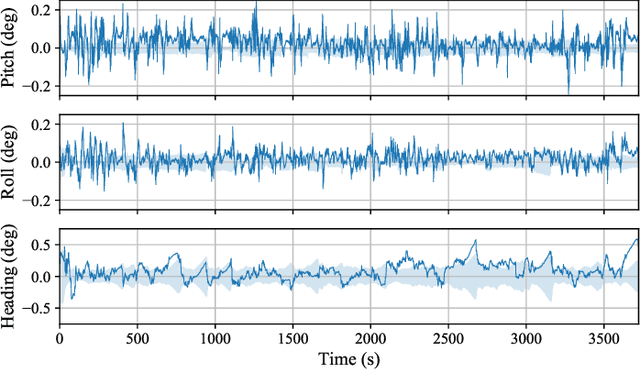

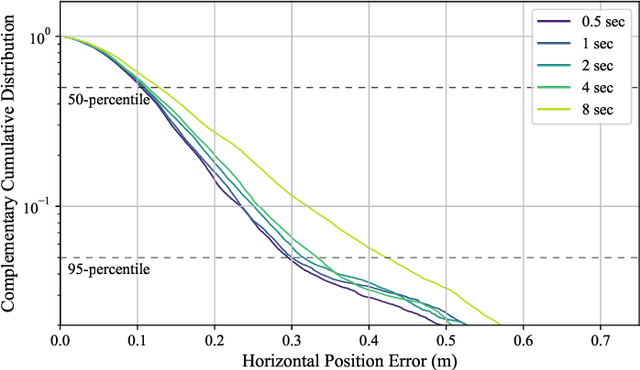

Abstract:Deployment of automated ground vehicles beyond the confines of sunny and dry climes will require sub-lane-level positioning techniques based on radio waves rather than near-visible-light radiation. Like human sight, lidar and cameras perform poorly in low-visibility conditions. This paper develops and demonstrates a novel technique for robust sub-50-cm-accurate urban ground vehicle positioning based on all-weather sensors. The technique incorporates a computationally-efficient globally-optimal radar scan batch registration algorithm into a larger estimation pipeline that fuses data from commercially-available low-cost automotive radars, low-cost inertial sensors, vehicle motion constraints, and, when available, precise GNSS measurements. Performance is evaluated on an extensive and realistic urban data set. Comparison against ground truth shows that during 60 minutes of GNSS-denied driving in the urban center of Austin, TX, the technique maintains 95th-percentile errors below 50 cm in horizontal position and 0.5 degrees in heading.

TEX-CUP: The University of Texas Challenge for Urban Positioning

May 02, 2020

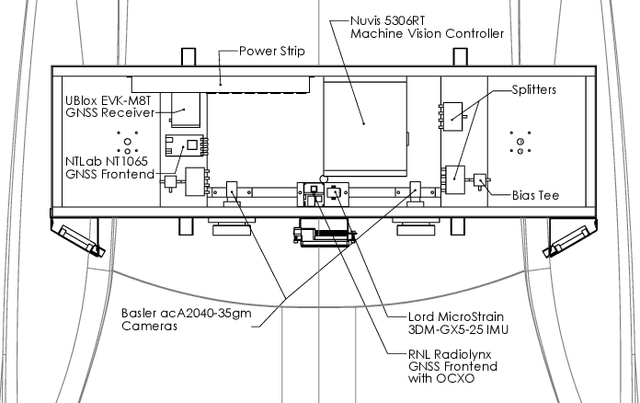

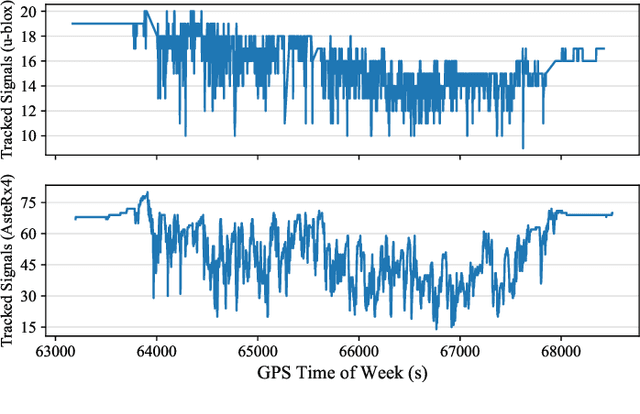

Abstract:A public benchmark dataset collected in the dense urban center of the city of Austin, TX is introduced for evaluation of multi-sensor GNSS-based urban positioning. Existing public datasets on localization and/or odometry evaluation are based on sensors such as lidar, cameras, and radar. The role of GNSS in these datasets is typically limited to the generation of a reference trajectory in conjunction with a high-end inertial navigation system (INS). In contrast, the dataset introduced in this paper provides raw ADC output of wideband intermediate frequency (IF) GNSS data along with tightly synchronized raw measurements from inertial measurement units (IMUs) and a stereoscopic camera unit. This dataset will enable optimization of the full GNSS stack from signal tracking to state estimation, as well as sensor fusion with other automotive sensors. The dataset is available at http://radionavlab.ae.utexas.edu under Public Datasets. Efforts to collect and share similar datasets from a number of dense urban centers around the world are under way.

Automotive-Radar-Based 50-cm Urban Positioning

May 02, 2020

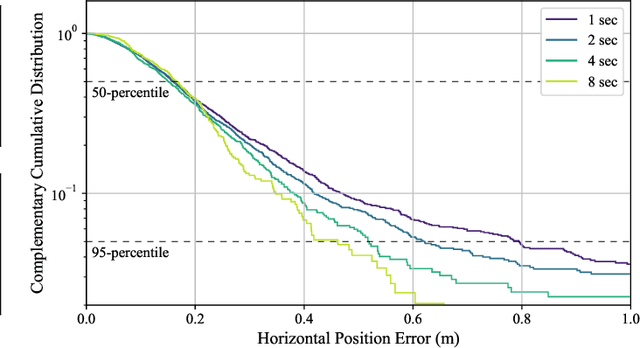

Abstract:Deployment of automated ground vehicles (AGVs) beyond the confines of sunny and dry climes will require sub-lane-level positioning techniques based on radio waves rather than near-visible-light radiation. Like human sight, lidar and cameras perform poorly in low-visibility conditions. This paper develops and demonstrates a novel technique for robust 50-cm-accurate urban ground positioning based on commercially-available low-cost automotive radars. The technique is computationally efficient yet obtains a globally-optimal translation and heading solution, avoiding local minima caused by repeating patterns in the urban radar environment. Performance is evaluated on an extensive and realistic urban data set. Comparison against ground truth shows that, when coupled with stable short-term odometry, the technique maintains 95-percentile errors below 50 cm in horizontal position and 1 degree in heading.

Automotive Collision Risk Estimation Under Cooperative Sensing

Apr 21, 2020

Abstract:This paper offers a technique for estimating collision risk for automated ground vehicles engaged in cooperative sensing. The technique allows quantification of (i) risk reduced due to cooperation, and (ii) the increased accuracy of risk assessment due to cooperation. If either is significant, cooperation can be viewed as a desirable practice for meeting the stringent risk budget of increasingly automated vehicles; if not, then cooperation - with its various drawbacks - need not be pursued. Collision risk is evaluated over an ego vehicle's trajectory based on a dynamic probabilistic occupancy map and a loss function that maps collision-relevant state information to a cost metric. The risk evaluation framework is demonstrated using real data captured from two cooperating vehicles traversing an urban intersection.

Deep urban unaided precise GNSS vehicle positioning

Jun 23, 2019

Abstract:This paper presents the most thorough study to date of vehicular carrier-phase differential GNSS (CDGNSS) positioning performance in a deep urban setting unaided by complementary sensors. Using data captured during approximately 2 hours of driving in and around the dense urban center of Austin, TX, a CDGNSS system is demonstrated to achieve 17-cm-accurate 3D urban positioning (95% probability) with solution availability greater than 87%. The results are achieved without any aiding by inertial, electro-optical, or odometry sensors. Development and evaluation of the unaided GNSS-based precise positioning system is a key milestone toward the overall goal of combining precise GNSS, vision, radar, and inertial sensing for all-weather high-integrity high-absolute-accuracy positioning for automated and connected vehicles. The system described and evaluated herein is composed of a densely-spaced reference network, a software-defined GNSS receiver, and a real-time kinematic (RTK) positioning engine. A performance sensitivity analysis reveals that navigation data wipeoff for fully-modulated GNSS signals and a dense reference network are key to high-performance urban RTK positioning. A comparison with existing unaided systems for urban GNSS processing indicates that the proposed system has significantly greater availability or accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge