Lada Rudnitckaia

Identification of Biased Terms in News Articles by Comparison of Outlet-specific Word Embeddings

Dec 14, 2021

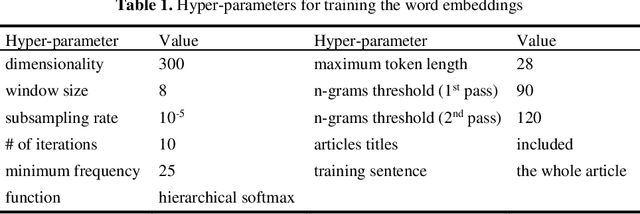

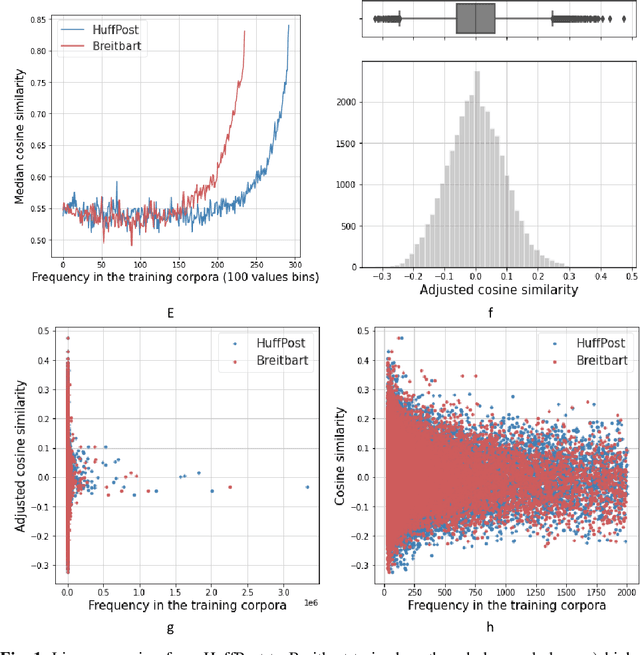

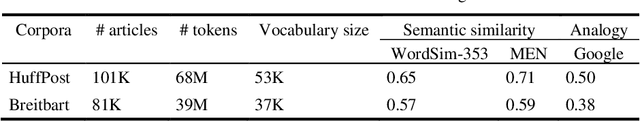

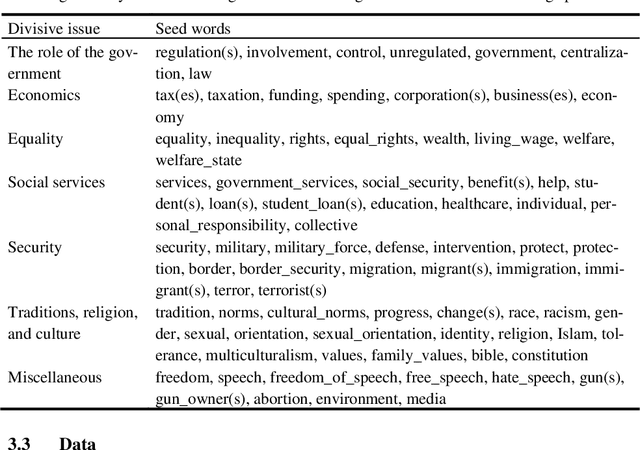

Abstract:Slanted news coverage, also called media bias, can heavily influence how news consumers interpret and react to the news. To automatically identify biased language, we present an exploratory approach that compares the context of related words. We train two word embedding models, one on texts of left-wing, the other on right-wing news outlets. Our hypothesis is that a word's representations in both word embedding spaces are more similar for non-biased words than biased words. The underlying idea is that the context of biased words in different news outlets varies more strongly than the one of non-biased words, since the perception of a word as being biased differs depending on its context. While we do not find statistical significance to accept the hypothesis, the results show the effectiveness of the approach. For example, after a linear mapping of both word embeddings spaces, 31% of the words with the largest distances potentially induce bias. To improve the results, we find that the dataset needs to be significantly larger, and we derive further methodology as future research direction. To our knowledge, this paper presents the first in-depth look at the context of bias words measured by word embeddings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge