L. Wu

Science, Research and Innovation, dsm-firmenich

Learning solutions of parametric Navier-Stokes with physics-informed neural networks

Feb 05, 2024Abstract:We leverage Physics-Informed Neural Networks (PINNs) to learn solution functions of parametric Navier-Stokes Equations (NSE). Our proposed approach results in a feasible optimization problem setup that bypasses PINNs' limitations in converging to solutions of highly nonlinear parametric-PDEs like NSE. We consider the parameter(s) of interest as inputs of PINNs along with spatio-temporal coordinates, and train PINNs on generated numerical solutions of parametric-PDES for instances of the parameters. We perform experiments on the classical 2D flow past cylinder problem aiming to learn velocities and pressure functions over a range of Reynolds numbers as parameter of interest. Provision of training data from generated numerical simulations allows for interpolation of the solution functions for a range of parameters. Therefore, we compare PINNs with unconstrained conventional Neural Networks (NN) on this problem setup to investigate the effectiveness of considering the PDEs regularization in the loss function. We show that our proposed approach results in optimizing PINN models that learn the solution functions while making sure that flow predictions are in line with conservational laws of mass and momentum. Our results show that PINN results in accurate prediction of gradients compared to NN model, this is clearly visible in predicted vorticity fields given that none of these models were trained on vorticity labels.

MAD: Meta Adversarial Defense Benchmark

Sep 18, 2023Abstract:Adversarial training (AT) is a prominent technique employed by deep learning models to defend against adversarial attacks, and to some extent, enhance model robustness. However, there are three main drawbacks of the existing AT-based defense methods: expensive computational cost, low generalization ability, and the dilemma between the original model and the defense model. To this end, we propose a novel benchmark called meta adversarial defense (MAD). The MAD benchmark consists of two MAD datasets, along with a MAD evaluation protocol. The two large-scale MAD datasets were generated through experiments using 30 kinds of attacks on MNIST and CIFAR-10 datasets. In addition, we introduce a meta-learning based adversarial training (Meta-AT) algorithm as the baseline, which features high robustness to unseen adversarial attacks through few-shot learning. Experimental results demonstrate the effectiveness of our Meta-AT algorithm compared to the state-of-the-art methods. Furthermore, the model after Meta-AT maintains a relatively high clean-samples classification accuracy (CCA). It is worth noting that Meta-AT addresses all three aforementioned limitations, leading to substantial improvements. This benchmark ultimately achieved breakthroughs in investigating the transferability of adversarial defense methods to new attacks and the ability to learn from a limited number of adversarial examples. Our codes and attacked datasets address will be available at https://github.com/PXX1110/Meta_AT.

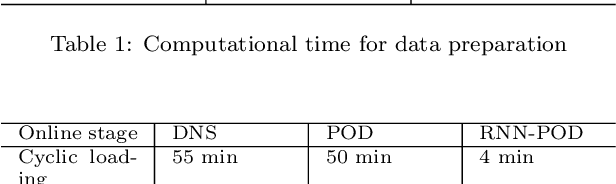

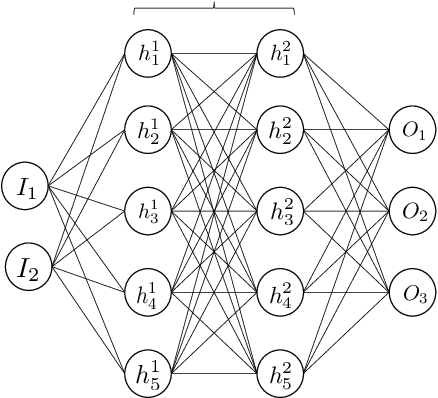

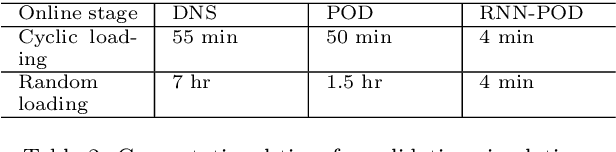

Neural-network acceleration of projection-based model-order-reduction for finite plasticity: Application to RVEs

Sep 16, 2021

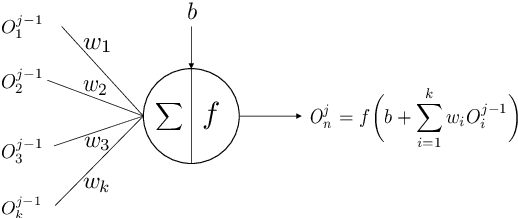

Abstract:Compared to conventional projection-based model-order-reduction, its neural-network acceleration has the advantage that the online simulations are equation-free, meaning that no system of equations needs to be solved iteratively. Consequently, no stiffness matrix needs to be constructed and the stress update needs to be computed only once per increment. In this contribution, a recurrent neural network is developed to accelerate a projection-based model-order-reduction of the elastoplastic mechanical behaviour of an RVE. In contrast to a neural network that merely emulates the relation between the macroscopic deformation (path) and the macroscopic stress, the neural network acceleration of projection-based model-order-reduction preserves all microstructural information, at the price of computing this information once per increment.

The Microsoft 2017 Conversational Speech Recognition System

Aug 24, 2017

Abstract:We describe the 2017 version of Microsoft's conversational speech recognition system, in which we update our 2016 system with recent developments in neural-network-based acoustic and language modeling to further advance the state of the art on the Switchboard speech recognition task. The system adds a CNN-BLSTM acoustic model to the set of model architectures we combined previously, and includes character-based and dialog session aware LSTM language models in rescoring. For system combination we adopt a two-stage approach, whereby subsets of acoustic models are first combined at the senone/frame level, followed by a word-level voting via confusion networks. We also added a confusion network rescoring step after system combination. The resulting system yields a 5.1\% word error rate on the 2000 Switchboard evaluation set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge