Kunpeng Guo

QAnswer: Towards Question Answering Search over Websites

Jan 17, 2024Abstract:Question Answering (QA) is increasingly used by search engines to provide results to their end-users, yet very few websites currently use QA technologies for their search functionality. To illustrate the potential of QA technologies for the website search practitioner, we demonstrate web searches that combine QA over knowledge graphs and QA over free text -- each being usually tackled separately. We also discuss the different benefits and drawbacks of both approaches for web site searches. We use the case studies made of websites hosted by the Wikimedia Foundation (namely Wikipedia and Wikidata). Differently from a search engine (e.g. Google, Bing, etc), the data are indexed integrally, i.e. we do not index only a subset, and they are indexed exclusively, i.e. we index only data available on the corresponding website.

Fine-tuning Strategies for Domain Specific Question Answering under Low Annotation Budget Constraints

Jan 17, 2024

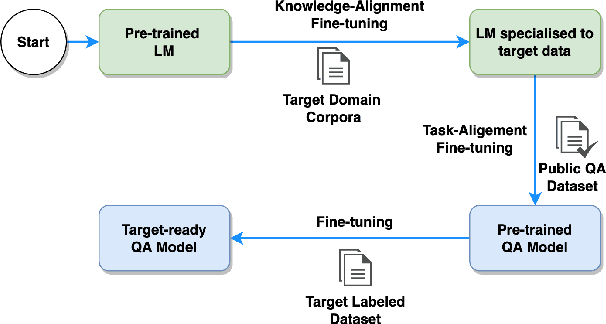

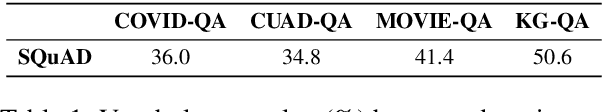

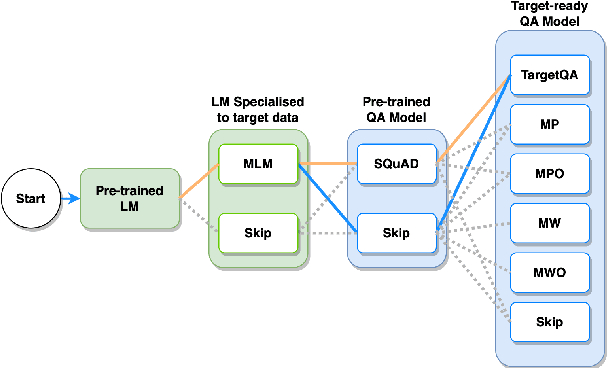

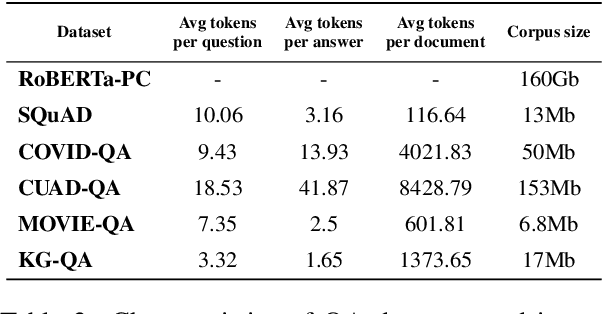

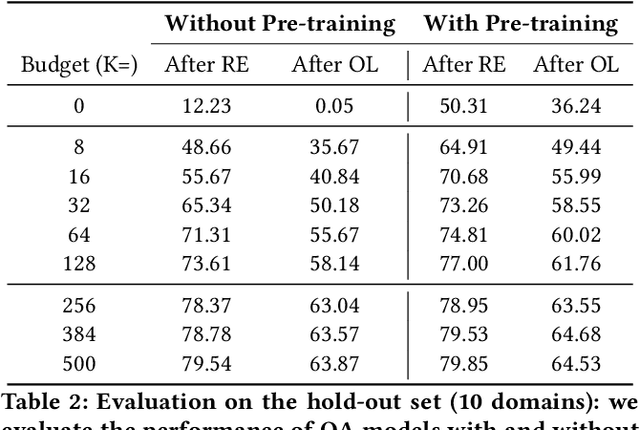

Abstract:The progress introduced by pre-trained language models and their fine-tuning has resulted in significant improvements in most downstream NLP tasks. The unsupervised training of a language model combined with further target task fine-tuning has become the standard QA fine-tuning procedure. In this work, we demonstrate that this strategy is sub-optimal for fine-tuning QA models, especially under a low QA annotation budget, which is a usual setting in practice due to the extractive QA labeling cost. We draw our conclusions by conducting an exhaustive analysis of the performance of the alternatives of the sequential fine-tuning strategy on different QA datasets. Based on the experiments performed, we observed that the best strategy to fine-tune the QA model in low-budget settings is taking a pre-trained language model (PLM) and then fine-tuning PLM with a dataset composed of the target dataset and SQuAD dataset. With zero extra annotation effort, the best strategy outperforms the standard strategy by 2.28% to 6.48%. Our experiments provide one of the first investigations on how to best fine-tune a QA system under a low budget and are therefore of the utmost practical interest to the QA practitioners.

Wikidata as a seed for Web Extraction

Jan 15, 2024

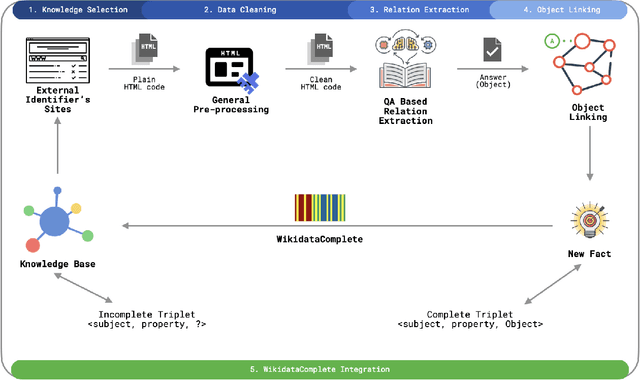

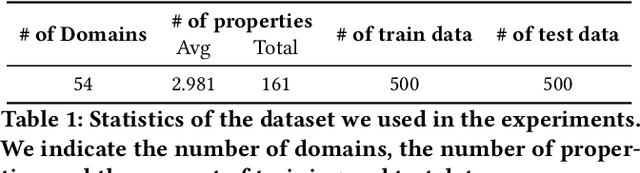

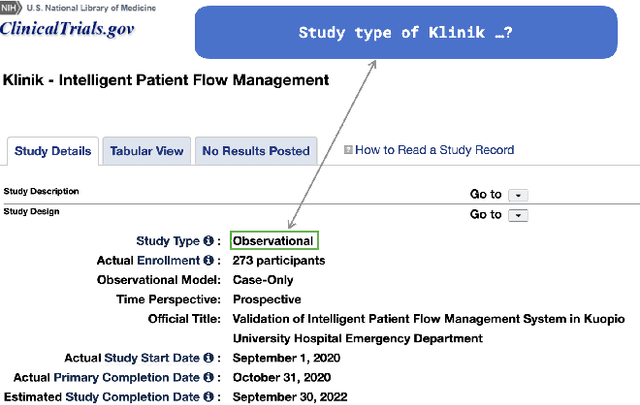

Abstract:Wikidata has grown to a knowledge graph with an impressive size. To date, it contains more than 17 billion triples collecting information about people, places, films, stars, publications, proteins, and many more. On the other side, most of the information on the Web is not published in highly structured data repositories like Wikidata, but rather as unstructured and semi-structured content, more concretely in HTML pages containing text and tables. Finding, monitoring, and organizing this data in a knowledge graph is requiring considerable work from human editors. The volume and complexity of the data make this task difficult and time-consuming. In this work, we present a framework that is able to identify and extract new facts that are published under multiple Web domains so that they can be proposed for validation by Wikidata editors. The framework is relying on question-answering technologies. We take inspiration from ideas that are used to extract facts from textual collections and adapt them to extract facts from Web pages. For achieving this, we demonstrate that language models can be adapted to extract facts not only from textual collections but also from Web pages. By exploiting the information already contained in Wikidata the proposed framework can be trained without the need for any additional learning signals and can extract new facts for a wide range of properties and domains. Following this path, Wikidata can be used as a seed to extract facts on the Web. Our experiments show that we can achieve a mean performance of 84.07 at F1-score. Moreover, our estimations show that we can potentially extract millions of facts that can be proposed for human validation. The goal is to help editors in their daily tasks and contribute to the completion of the Wikidata knowledge graph.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge