Kuan Tung

Entropy-Assisted Multi-Modal Emotion Recognition Framework Based on Physiological Signals

Sep 22, 2018

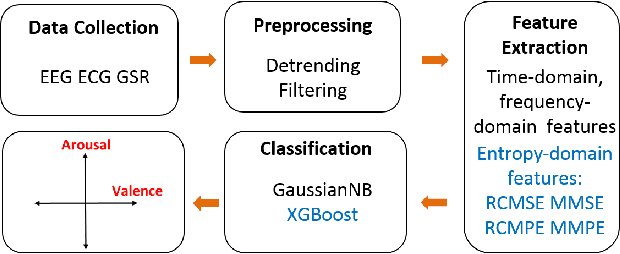

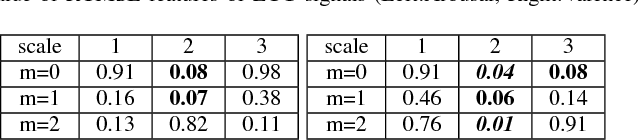

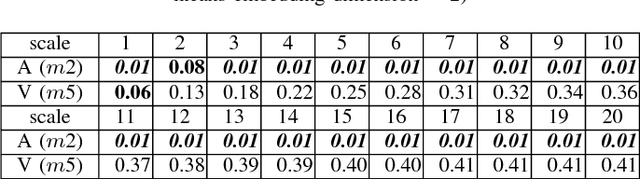

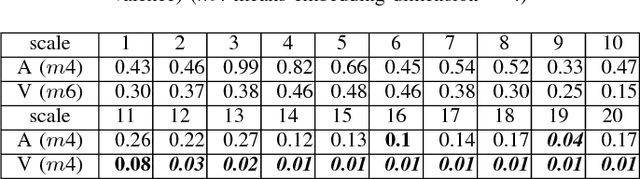

Abstract:As the result of the growing importance of the Human Computer Interface system, understanding human's emotion states has become a consequential ability for the computer. This paper aims to improve the performance of emotion recognition by conducting the complexity analysis of physiological signals. Based on AMIGOS dataset, we extracted several entropy-domain features such as Refined Composite Multi-Scale Entropy (RCMSE), Refined Composite Multi-Scale Permutation Entropy (RCMPE) from ECG and GSR signals, and Multivariate Multi-Scale Entropy (MMSE), Multivariate Multi-Scale Permutation Entropy (MMPE) from EEG, respectively. The statistical results show that RCMSE in GSR has a dominating performance in arousal, while RCMPE in GSR would be the excellent feature in valence. Furthermore, we selected XGBoost model to predict emotion and get 68% accuracy in arousal and 84% in valence.

Joint Learning of Interactive Spoken Content Retrieval and Trainable User Simulator

Apr 01, 2018

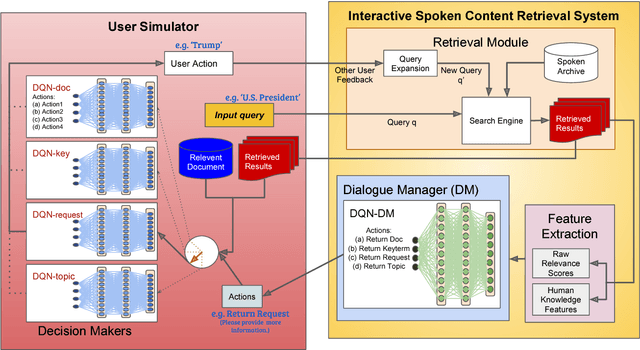

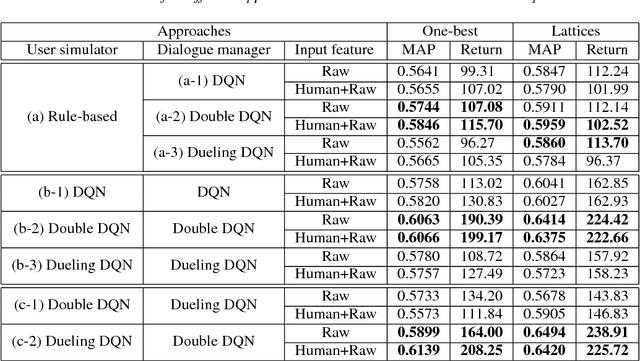

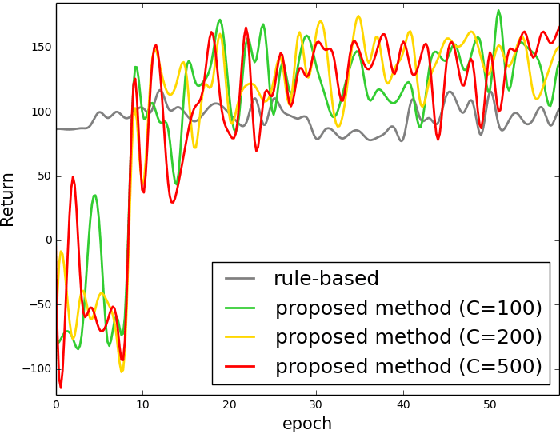

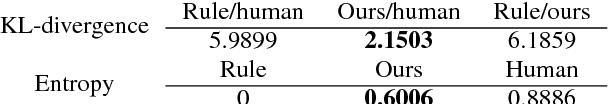

Abstract:User-machine interaction is crucial for information retrieval, especially for spoken content retrieval, because spoken content is difficult to browse, and speech recognition has a high degree of uncertainty. In interactive retrieval, the machine takes different actions to interact with the user to obtain better retrieval results; here it is critical to select the most efficient action. In previous work, deep Q-learning techniques were proposed to train an interactive retrieval system but rely on a hand-crafted user simulator; building a reliable user simulator is difficult. In this paper, we further improve the interactive spoken content retrieval framework by proposing a learnable user simulator which is jointly trained with interactive retrieval system, making the hand-crafted user simulator unnecessary. The experimental results show that the learned simulated users not only achieve larger rewards than the hand-crafted ones but act more like real users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge