Kshitij Nikhal

Discovering EV Charging Site Archetypes Through Few Shot Forecasting: The First U.S.-Wide Study

Oct 30, 2025Abstract:The decarbonization of transportation relies on the widespread adoption of electric vehicles (EVs), which requires an accurate understanding of charging behavior to ensure cost-effective, grid-resilient infrastructure. Existing work is constrained by small-scale datasets, simple proximity-based modeling of temporal dependencies, and weak generalization to sites with limited operational history. To overcome these limitations, this work proposes a framework that integrates clustering with few-shot forecasting to uncover site archetypes using a novel large-scale dataset of charging demand. The results demonstrate that archetype-specific expert models outperform global baselines in forecasting demand at unseen sites. By establishing forecast performance as a basis for infrastructure segmentation, we generate actionable insights that enable operators to lower costs, optimize energy and pricing strategies, and support grid resilience critical to climate goals.

Cross-Spectral Attention for Unsupervised RGB-IR Face Verification and Person Re-identification

Nov 28, 2024

Abstract:Cross-spectral biometrics, such as matching imagery of faces or persons from visible (RGB) and infrared (IR) bands, have rapidly advanced over the last decade due to increasing sensitivity, size, quality, and ubiquity of IR focal plane arrays and enhanced analytics beyond the visible spectrum. Current techniques for mitigating large spectral disparities between RGB and IR imagery often include learning a discriminative common subspace by exploiting precisely curated data acquired from multiple spectra. Although there are challenges with determining robust architectures for extracting common information, a critical limitation for supervised methods is poor scalability in terms of acquiring labeled data. Therefore, we propose a novel unsupervised cross-spectral framework that combines (1) a new pseudo triplet loss with cross-spectral voting, (2) a new cross-spectral attention network leveraging multiple subspaces, and (3) structured sparsity to perform more discriminative cross-spectral clustering. We extensively compare our proposed RGB-IR biometric learning framework (and its individual components) with recent and previous state-of-the-art models on two challenging benchmark datasets: DEVCOM Army Research Laboratory Visible-Thermal Face Dataset (ARL-VTF) and RegDB person re-identification dataset, and, in some cases, achieve performance superior to completely supervised methods.

HashReID: Dynamic Network with Binary Codes for Efficient Person Re-identification

Aug 23, 2023

Abstract:Biometric applications, such as person re-identification (ReID), are often deployed on energy constrained devices. While recent ReID methods prioritize high retrieval performance, they often come with large computational costs and high search time, rendering them less practical in real-world settings. In this work, we propose an input-adaptive network with multiple exit blocks, that can terminate computation early if the retrieval is straightforward or noisy, saving a lot of computation. To assess the complexity of the input, we introduce a temporal-based classifier driven by a new training strategy. Furthermore, we adopt a binary hash code generation approach instead of relying on continuous-valued features, which significantly improves the search process by a factor of 20. To ensure similarity preservation, we utilize a new ranking regularizer that bridges the gap between continuous and binary features. Extensive analysis of our proposed method is conducted on three datasets: Market1501, MSMT17 (Multi-Scene Multi-Time), and the BGC1 (BRIAR Government Collection). Using our approach, more than 70% of the samples with compact hash codes exit early on the Market1501 dataset, saving 80% of the networks computational cost and improving over other hash-based methods by 60%. These results demonstrate a significant improvement over dynamic networks and showcase comparable accuracy performance to conventional ReID methods. Code will be made available.

Weakly Supervised Face and Whole Body Recognition in Turbulent Environments

Aug 22, 2023

Abstract:Face and person recognition have recently achieved remarkable success under challenging scenarios, such as off-pose and cross-spectrum matching. However, long-range recognition systems are often hindered by atmospheric turbulence, leading to spatially and temporally varying distortions in the image. Current solutions rely on generative models to reconstruct a turbulent-free image, but often preserve photo-realism instead of discriminative features that are essential for recognition. This can be attributed to the lack of large-scale datasets of turbulent and pristine paired images, necessary for optimal reconstruction. To address this issue, we propose a new weakly supervised framework that employs a parameter-efficient self-attention module to generate domain agnostic representations, aligning turbulent and pristine images into a common subspace. Additionally, we introduce a new tilt map estimator that predicts geometric distortions observed in turbulent images. This estimate is used to re-rank gallery matches, resulting in up to 13.86\% improvement in rank-1 accuracy. Our method does not require synthesizing turbulent-free images or ground-truth paired images, and requires significantly fewer annotated samples, enabling more practical and rapid utility of increasingly large datasets. We analyze our framework using two datasets -- Long-Range Face Identification Dataset (LRFID) and BRIAR Government Collection 1 (BGC1) -- achieving enhanced discriminability under varying turbulence and standoff distance.

Understanding Cross Domain Presentation Attack Detection for Visible Face Recognition

Nov 03, 2021

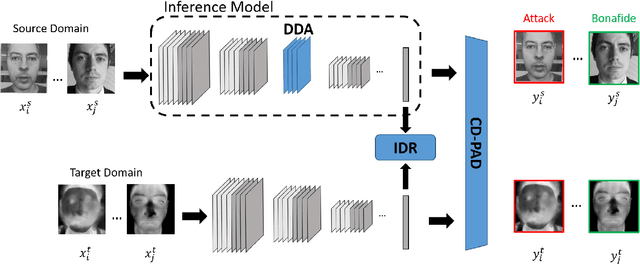

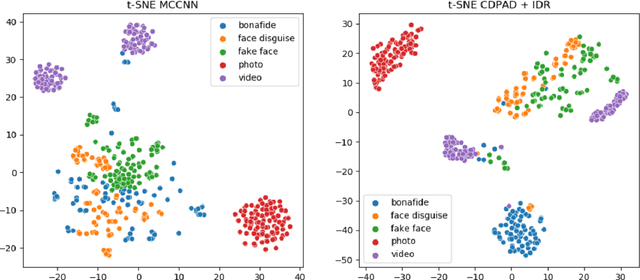

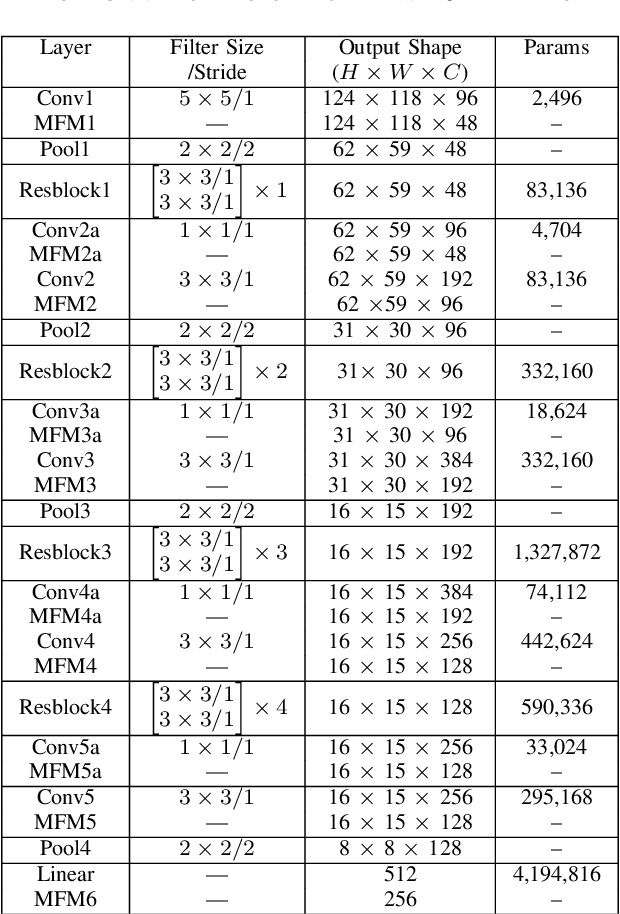

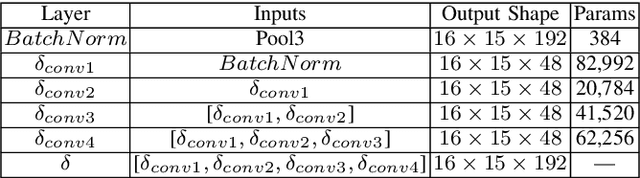

Abstract:Face signatures, including size, shape, texture, skin tone, eye color, appearance, and scars/marks, are widely used as discriminative, biometric information for access control. Despite recent advancements in facial recognition systems, presentation attacks on facial recognition systems have become increasingly sophisticated. The ability to detect presentation attacks or spoofing attempts is a pressing concern for the integrity, security, and trust of facial recognition systems. Multi-spectral imaging has been previously introduced as a way to improve presentation attack detection by utilizing sensors that are sensitive to different regions of the electromagnetic spectrum (e.g., visible, near infrared, long-wave infrared). Although multi-spectral presentation attack detection systems may be discriminative, the need for additional sensors and computational resources substantially increases complexity and costs. Instead, we propose a method that exploits information from infrared imagery during training to increase the discriminability of visible-based presentation attack detection systems. We introduce (1) a new cross-domain presentation attack detection framework that increases the separability of bonafide and presentation attacks using only visible spectrum imagery, (2) an inverse domain regularization technique for added training stability when optimizing our cross-domain presentation attack detection framework, and (3) a dense domain adaptation subnetwork to transform representations between visible and non-visible domains.

Unsupervised Attention Based Instance Discriminative Learning for Person Re-Identification

Nov 03, 2020

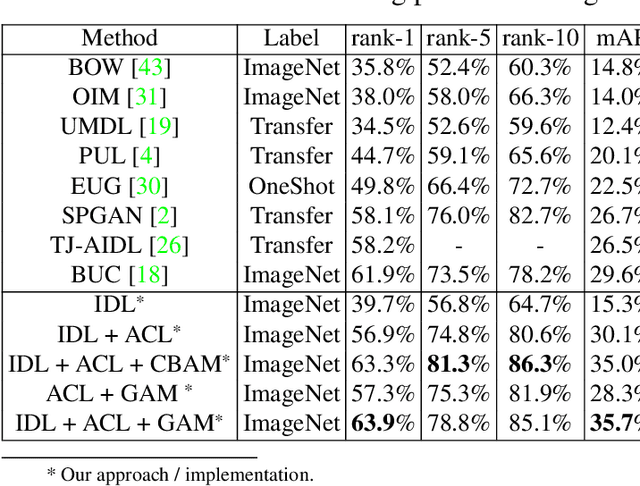

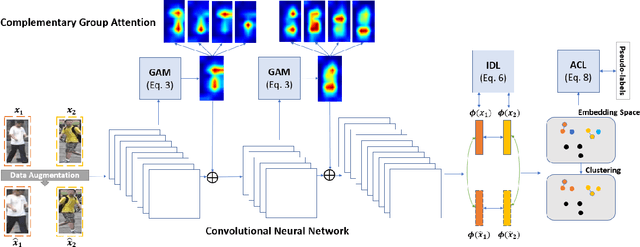

Abstract:Recent advances in person re-identification have demonstrated enhanced discriminability, especially with supervised learning or transfer learning. However, since the data requirements---including the degree of data curations---are becoming increasingly complex and laborious, there is a critical need for unsupervised methods that are robust to large intra-class variations, such as changes in perspective, illumination, articulated motion, resolution, etc. Therefore, we propose an unsupervised framework for person re-identification which is trained in an end-to-end manner without any pre-training. Our proposed framework leverages a new attention mechanism that combines group convolutions to (1) enhance spatial attention at multiple scales and (2) reduce the number of trainable parameters by 59.6%. Additionally, our framework jointly optimizes the network with agglomerative clustering and instance learning to tackle hard samples. We perform extensive analysis using the Market1501 and DukeMTMC-reID datasets to demonstrate that our method consistently outperforms the state-of-the-art methods (with and without pre-trained weights).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge