Kshiteesh Hegde

Cause and Effect: Can Large Language Models Truly Understand Causality?

Feb 28, 2024

Abstract:With the rise of Large Language Models(LLMs), it has become crucial to understand their capabilities and limitations in deciphering and explaining the complex web of causal relationships that language entails. Current methods use either explicit or implicit causal reasoning, yet there is a strong need for a unified approach combining both to tackle a wide array of causal relationships more effectively. This research proposes a novel architecture called Context Aware Reasoning Enhancement with Counterfactual Analysis(CARE CA) framework to enhance causal reasoning and explainability. The proposed framework incorporates an explicit causal detection module with ConceptNet and counterfactual statements, as well as implicit causal detection through LLMs. Our framework goes one step further with a layer of counterfactual explanations to accentuate LLMs understanding of causality. The knowledge from ConceptNet enhances the performance of multiple causal reasoning tasks such as causal discovery, causal identification and counterfactual reasoning. The counterfactual sentences add explicit knowledge of the not caused by scenarios. By combining these powerful modules, our model aims to provide a deeper understanding of causal relationships, enabling enhanced interpretability. Evaluation of benchmark datasets shows improved performance across all metrics, such as accuracy, precision, recall, and F1 scores. We also introduce CausalNet, a new dataset accompanied by our code, to facilitate further research in this domain.

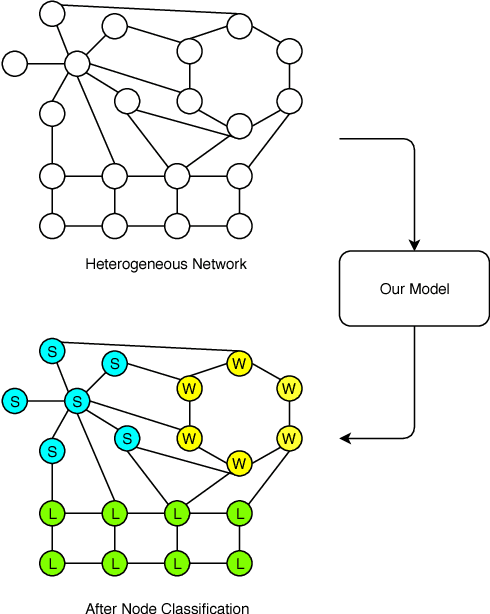

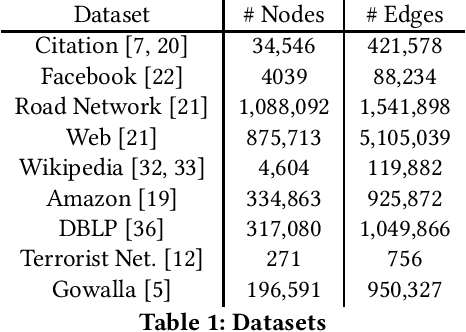

Network Lens: Node Classification in Topologically Heterogeneous Networks

Jan 15, 2019

Abstract:We study the problem of identifying different behaviors occurring in different parts of a large heterogenous network. We zoom in to the network using lenses of different sizes to capture the local structure of the network. These network signatures are then weighted to provide a set of predicted labels for every node. We achieve a peak accuracy of $\sim42\%$ (random=$11\%$) on two networks with $\sim100,000$ and $\sim1,000,000$ nodes each. Further, we perform better than random even when the given node is connected to up to 5 different types of networks. Finally, we perform this analysis on homogeneous networks and show that highly structured networks have high homogeneity.

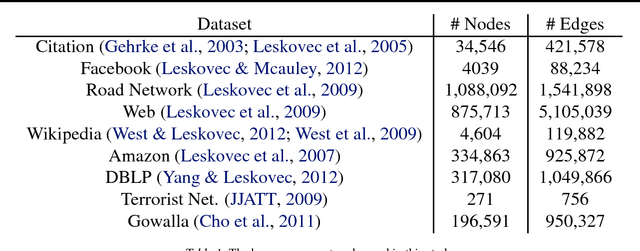

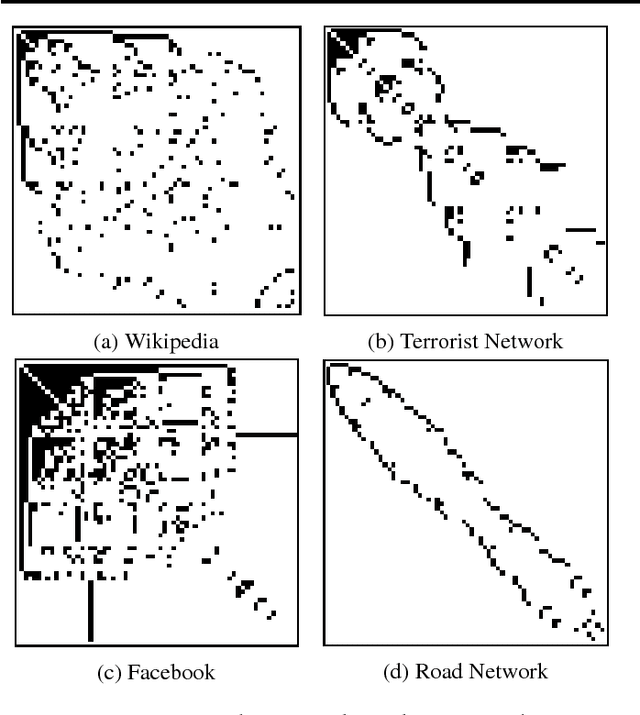

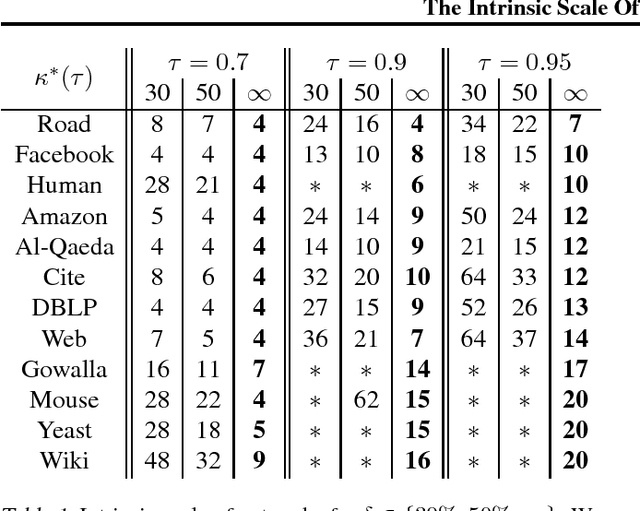

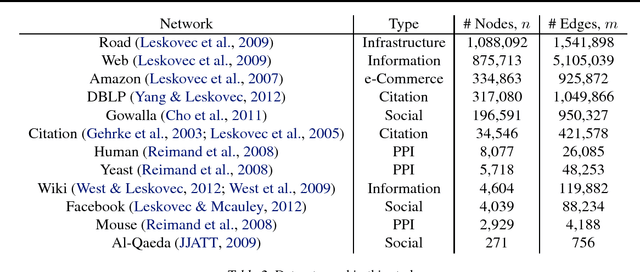

The Intrinsic Scale of Networks is Small

Jan 15, 2019

Abstract:We define the intrinsic scale at which a network begins to reveal its identity as the scale at which subgraphs in the network (created by a random walk) are distinguishable from similar sized subgraphs in a perturbed copy of the network. We conduct an extensive study of intrinsic scale for several networks, ranging from structured (e.g. road networks) to ad-hoc and unstructured (e.g. crowd sourced information networks), to biological. We find: (a) The intrinsic scale is surprisingly small (7-20 vertices), even though the networks are many orders of magnitude larger. (b) The intrinsic scale quantifies ``structure'' in a network -- networks which are explicitly constructed for specific tasks have smaller intrinsic scale. (c) The structure at different scales can be fragile (easy to disrupt) or robust.

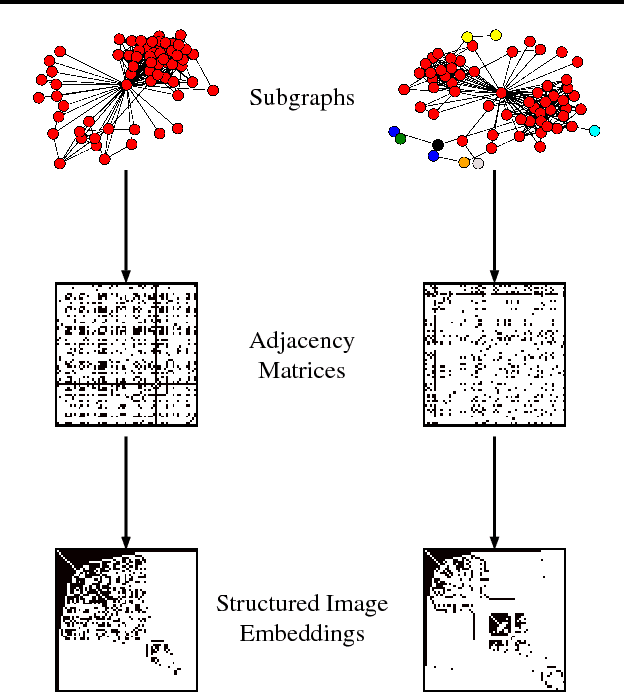

Network Signatures from Image Representation of Adjacency Matrices: Deep/Transfer Learning for Subgraph Classification

Apr 17, 2018

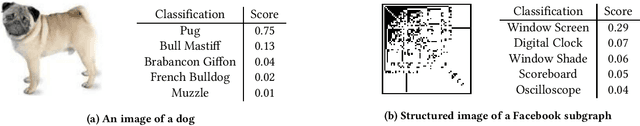

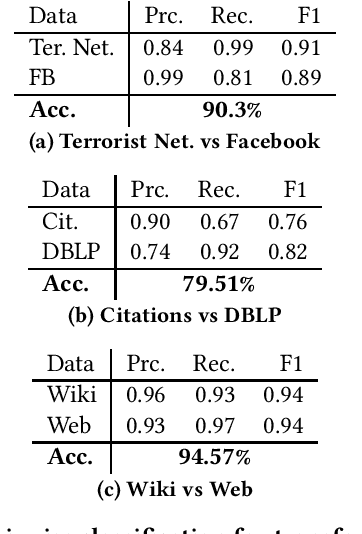

Abstract:We propose a novel subgraph image representation for classification of network fragments with the targets being their parent networks. The graph image representation is based on 2D image embeddings of adjacency matrices. We use this image representation in two modes. First, as the input to a machine learning algorithm. Second, as the input to a pure transfer learner. Our conclusions from several datasets are that (a) deep learning using our structured image features performs the best compared to benchmark graph kernel and classical features based methods; and, (b) pure transfer learning works effectively with minimum interference from the user and is robust against small data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge