Koushik Roy

BLPnet: A new DNN model and Bengali OCR engine for Automatic License Plate Recognition

Feb 18, 2022

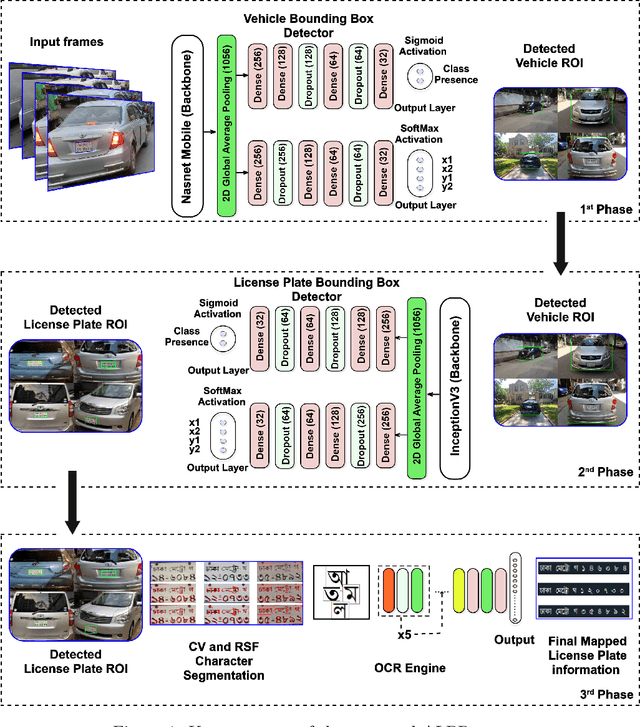

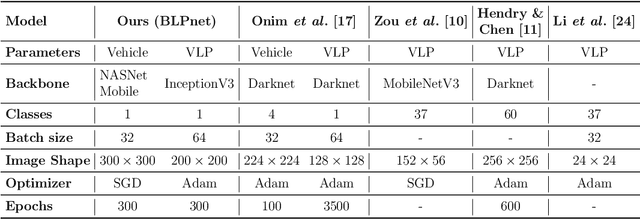

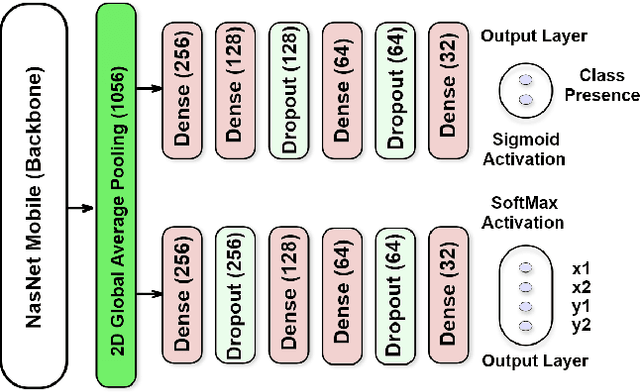

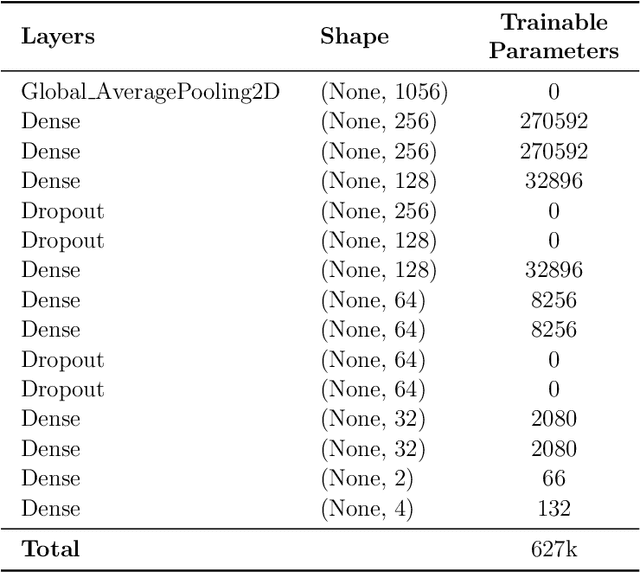

Abstract:The development of the Automatic License Plate Recognition (ALPR) system has received much attention for the English license plate. However, despite being the sixth largest population around the world, no significant progress can be tracked in the Bengali language countries or states for the ALPR system addressing their more alarming traffic management with inadequate road-safety measures. This paper reports a computationally efficient and reasonably accurate Automatic License Plate Recognition (ALPR) system for Bengali characters with a new end-to-end DNN model that we call Bengali License Plate Network(BLPnet). The cascaded architecture for detecting vehicle regions prior to vehicle license plate (VLP) in the model is proposed to eliminate false positives resulting in higher detection accuracy of VLP. Besides, a lower set of trainable parameters is considered for reducing the computational cost making the system faster and more compatible for a real-time application. With a Computational Neural Network (CNN)based new Bengali OCR engine and word-mapping process, the model is characters rotation invariant, and can readily extract, detect and output the complete license plate number of a vehicle. The model feeding with17 frames per second (fps) on real-time video footage can detect a vehicle with the Mean Squared Error (MSE) of 0.0152, and the mean license plate character recognition accuracy of 95%. While compared to the other models, an improvement of 5% and 20% were recorded for the BLPnetover the prominent YOLO-based ALPR model and the Tesseract model for the number-plate detection accuracy and time requirement, respectively.

Modelling Lips-State Detection Using CNN for Non-Verbal Communications

Dec 11, 2021

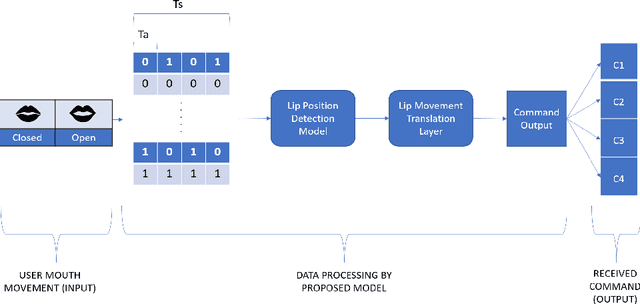

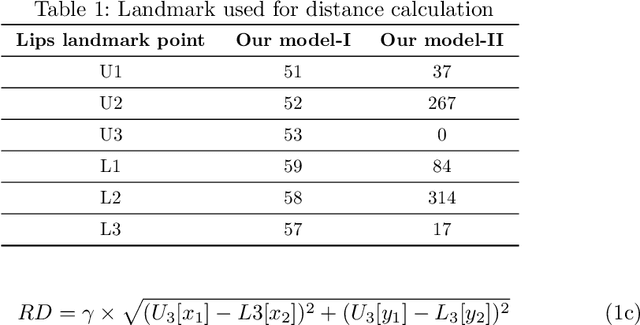

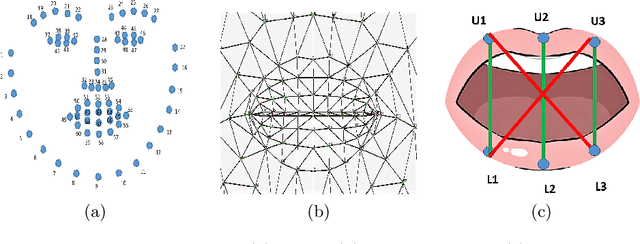

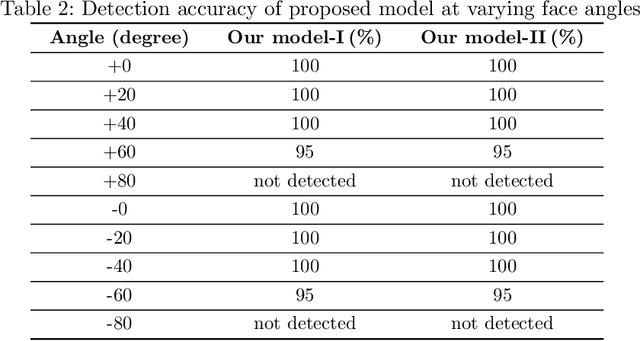

Abstract:Vision-based deep learning models can be promising for speech-and-hearing-impaired and secret communications. While such non-verbal communications are primarily investigated with hand-gestures and facial expressions, no research endeavour is tracked so far for the lips state (i.e., open/close)-based interpretation/translation system. In support of this development, this paper reports two new Convolutional Neural Network (CNN) models for lips state detection. Building upon two prominent lips landmark detectors, DLIB and MediaPipe, we simplify lips-state model with a set of six key landmarks, and use their distances for the lips state classification. Thereby, both the models are developed to count the opening and closing of lips and thus, they can classify a symbol with the total count. Varying frame-rates, lips-movements and face-angles are investigated to determine the effectiveness of the models. Our early experimental results demonstrate that the model with DLIB is relatively slower in terms of an average of 6 frames per second (FPS) and higher average detection accuracy of 95.25%. In contrast, the model with MediaPipe offers faster landmark detection capability with an average FPS of 20 and detection accuracy of 94.4%. Both models thus could effectively interpret the lips state for non-verbal semantics into a natural language.

Demand Forecasting in Smart Grid Using Long Short-Term Memory

Jul 28, 2021

Abstract:Demand forecasting in power sector has become an important part of modern demand management and response systems with the rise of smart metering enabled grids. Long Short-Term Memory (LSTM) shows promising results in predicting time series data which can also be applied to power load demand in smart grids. In this paper, an LSTM based model using neural network architecture is proposed to forecast power demand. The model is trained with hourly energy and power usage data of four years from a smart grid. After training and prediction, the accuracy of the model is compared against the traditional statistical time series analysis algorithms, such as Auto-Regressive (AR), to determine the efficiency. The mean absolute percentile error is found to be 1.22 in the proposed LSTM model, which is the lowest among the other models. From the findings, it is clear that the inclusion of neural network in predicting power demand reduces the error of prediction significantly. Thus, the application of LSTM can enable a more efficient demand response system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge