Kostas Vlachos

Ocean Current-Harnessing Stage-Gated MPC: Monotone Cost Shaping and Speed-to-Fly for Energy-Efficient AUV Navigation

Jan 31, 2026Abstract:Autonomous Underwater Vehicles (AUVs) are a highly promising technology for ocean exploration and diverse offshore operations, yet their practical deployment is constrained by energy efficiency and endurance. To address this, we propose Current-Harnessing Stage-Gated MPC, which exploits ocean currents via a per-stage scalar which indicates the "helpfulness" of ocean currents. This scalar is computed along the prediction horizon to gate lightweight cost terms only where the ocean currents truly aids the control goal. The proposed cost terms, that are merged in the objective function, are (i) a Monotone Cost Shaping (MCS) term, a help-gated, non-worsening modification that relaxes along-track position error and provides a bounded translational energy rebate, guaranteeing the shaped objective is never larger than a set baseline, and (ii) a speed-to-fly (STF) cost component that increases the price of thrust and softly matches ground velocity to the ocean current, enabling near zero water-relative "gliding". All terms are C1 and integrate as a plug-and-play in MPC designs. Extensive simulations with the BlueROV2 model under realistic ocean current fields show that the proposed approach achieves substantially lower energy consumption than conventional predictive control while maintaining comparable arrival times and constraint satisfaction.

Safe Sliding Mode Control for Marine Vessels Using High-Order Control Barrier Functions and Fast Projection

Dec 30, 2025Abstract:This paper presents a novel safe control framework that integrates Sliding Mode Control (SMC), High-Order Control Barrier Functions (HOCBFs) with state-dependent adaptiveness and a lightweight projection for collision-free navigation of an over-actuated 3-DOF marine surface vessel subjected to strong environmental disturbances (wind, waves, and current). SMC provides robustness to matched disturbances common in marine operations, while HOCBFs enforce forward invariance of obstacle-avoidance constraints. A fast half-space projection method adjusts the SMC control only when needed, preserving robustness and minimizing chattering. The approach is evaluated on a nonlinear marine platform model that includes added mass, hydrodynamic damping, and full thruster allocation. Simulation results show robust navigation, guaranteed obstacle avoidance, and computational efficiency suitable for real-time embedded use. For small marine robots and surface vessels with limited onboard computational resources-where execution speed and computational efficiency are critical-the SMC-HOCBF framework constitutes a strong candidate for safety-critical control.

Dropout MPC: An Ensemble Neural MPC Approach for Systems with Learned Dynamics

Jun 04, 2024Abstract:Neural networks are lately more and more often being used in the context of data-driven control, as an approximate model of the true system dynamics. Model Predictive Control (MPC) adopts this practise leading to neural MPC strategies. This raises a question of whether the trained neural network has converged and generalized in a way that the learned model encapsulates an accurate approximation of the true dynamic model of the system, thus making it a reliable choice for model-based control, especially for disturbed and uncertain systems. To tackle that, we propose Dropout MPC, a novel sampling-based ensemble neural MPC algorithm that employs the Monte-Carlo dropout technique on the learned system model. The closed loop is based on an ensemble of predictive controllers, that are used simultaneously at each time-step for trajectory optimization. Each member of the ensemble influences the control input, based on a weighted voting scheme, thus by employing different realizations of the learned system dynamics, neural control becomes more reliable by design. An additional strength of the method is that it offers by design a way to estimate future uncertainty, leading to cautious control. While the method aims in general at uncertain systems with complex dynamics, where models derived from first principles are hard to infer, to showcase the application we utilize data gathered in the laboratory from a real mobile manipulator and employ the proposed algorithm for the navigation of the robot in simulation.

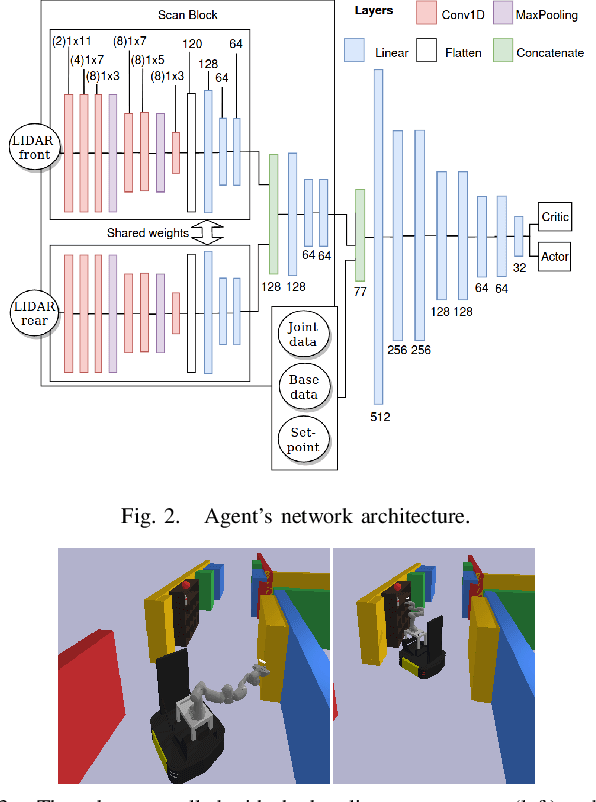

Improved Reinforcement Learning Coordinated Control of a Mobile Manipulator using Joint Clamping

Oct 05, 2021

Abstract:Many robotic path planning problems are continuous, stochastic, and high-dimensional. The ability of a mobile manipulator to coordinate its base and manipulator in order to control its whole-body online is particularly challenging when self and environment collision avoidance is required. Reinforcement Learning techniques have the potential to solve such problems through their ability to generalise over environments. We study joint penalties and joint limits of a state-of-the-art mobile manipulator whole-body controller that uses LIDAR sensing for obstacle collision avoidance. We propose directions to improve the reinforcement learning method. Our agent achieves significantly higher success rates than the baseline in a goal-reaching environment and it can solve environments that require coordinated whole-body control which the baseline fails.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge