Koji Tabata

Research Institute for Electronic Science, Hokkaido University

An Efficient Algorithm for Thresholding Monte Carlo Tree Search

Jan 30, 2026Abstract:We introduce the Thresholding Monte Carlo Tree Search problem, in which, given a tree $\mathcal{T}$ and a threshold $θ$, a player must answer whether the root node value of $\mathcal{T}$ is at least $θ$ or not. In the given tree, `MAX' or `MIN' is labeled on each internal node, and the value of a `MAX'-labeled (`MIN'-labeled) internal node is the maximum (minimum) of its child values. The value of a leaf node is the mean reward of an unknown distribution, from which the player can sample rewards. For this problem, we develop a $δ$-correct sequential sampling algorithm based on the Track-and-Stop strategy that has asymptotically optimal sample complexity. We show that a ratio-based modification of the D-Tracking arm-pulling strategy leads to a substantial improvement in empirical sample complexity, as well as reducing the per-round computational cost from linear to logarithmic in the number of arms.

Gaussian Process Classification Bandits

Dec 26, 2022

Abstract:Classification bandits are multi-armed bandit problems whose task is to classify a given set of arms into either positive or negative class depending on whether the rate of the arms with the expected reward of at least h is not less than w for given thresholds h and w. We study a special classification bandit problem in which arms correspond to points x in d-dimensional real space with expected rewards f(x) which are generated according to a Gaussian process prior. We develop a framework algorithm for the problem using various arm selection policies and propose policies called FCB and FTSV. We show a smaller sample complexity upper bound for FCB than that for the existing algorithm of the level set estimation, in which whether f(x) is at least h or not must be decided for every arm's x. Arm selection policies depending on an estimated rate of arms with rewards of at least h are also proposed and shown to improve empirical sample complexity. According to our experimental results, the rate-estimation versions of FCB and FTSV, together with that of the popular active learning policy that selects the point with the maximum variance, outperform other policies for synthetic functions, and the version of FTSV is also the best performer for our real-world dataset.

A Bad Arm Existence Checking Problem

Jan 31, 2019

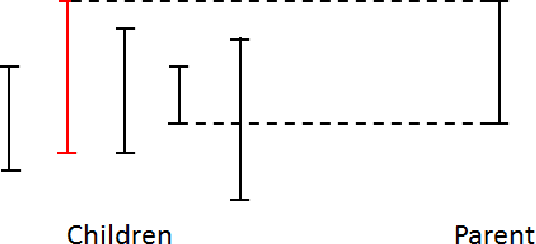

Abstract:We study a bad arm existing checking problem in which a player's task is to judge whether a positive arm exists or not among given K arms by drawing as small number of arms as possible. Here, an arm is positive if its expected loss suffered by drawing the arm is at least a given threshold. This problem is a formalization of diagnosis of disease or machine failure. An interesting structure of this problem is the asymmetry of positive and negative (non-positive) arms' roles; finding one positive arm is enough to judge existence while all the arms must be discriminated as negative to judge non-existence. We propose an algorithms with arm selection policy (policy to determine the next arm to draw) and stopping condition (condition to stop drawing arms) utilizing this asymmetric problem structure and prove its effectiveness theoretically and empirically.

Feature selection as Monte-Carlo Search in Growing Single Rooted Directed Acyclic Graph by Best Leaf Identification

Nov 19, 2018

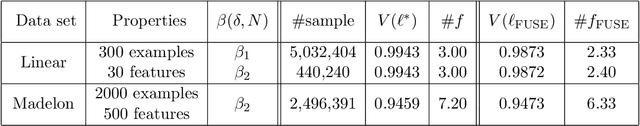

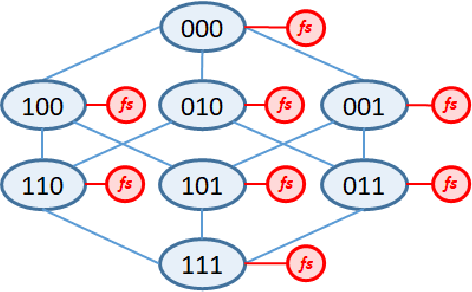

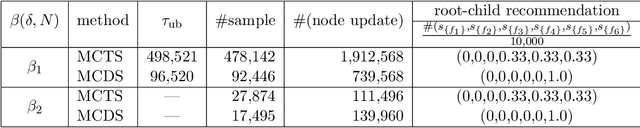

Abstract:Monte Carlo tree search (MCTS) has received considerable interest due to its spectacular success in the difficult problem of computer Go and also proved beneficial in a range of other domains. A major issue that has received little attention in the MCTS literature is the fact that, in most games, different actions can lead to the same state, that may lead to a high degree of redundancy in tree representation and unnecessary additional computational cost. We extend MCTS to single rooted directed acyclic graph (SR-DAG), and consider the Best Arm Identification (BAI) and the Best Leaf Identification (BLI) problem of an expanding SR-DAG of arbitrary depth. We propose algorithms that are (epsilon, delta)-correct in the fixed confidence setting, and prove an asymptotic upper bounds of sample complexity for our BAI algorithm. As a major application for our BLI algorithm, a novel approach for Feature Selection is proposed by representing the feature set space as a SR-DAG and repeatedly evaluating feature subsets until a candidate for the best leaf is returned, a proof of concept is shown on benchmark data sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge