Koen Bertels

QKSA: Quantum Knowledge Seeking Agent -- resource-optimized reinforcement learning using quantum process tomography

Dec 07, 2021

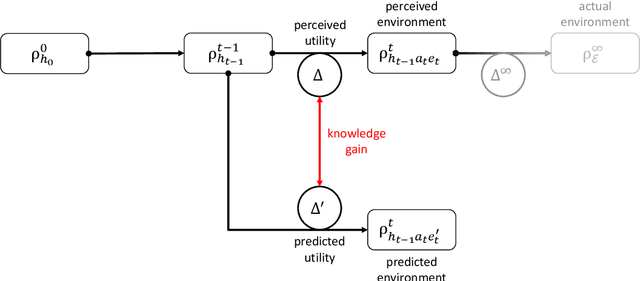

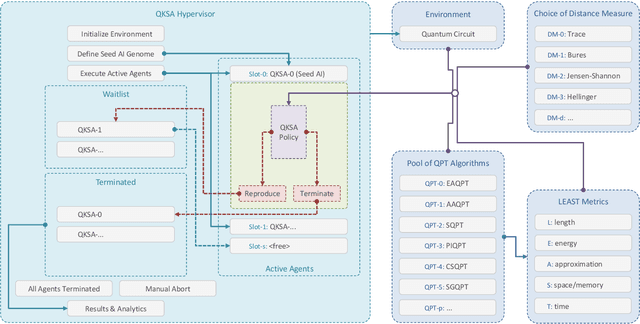

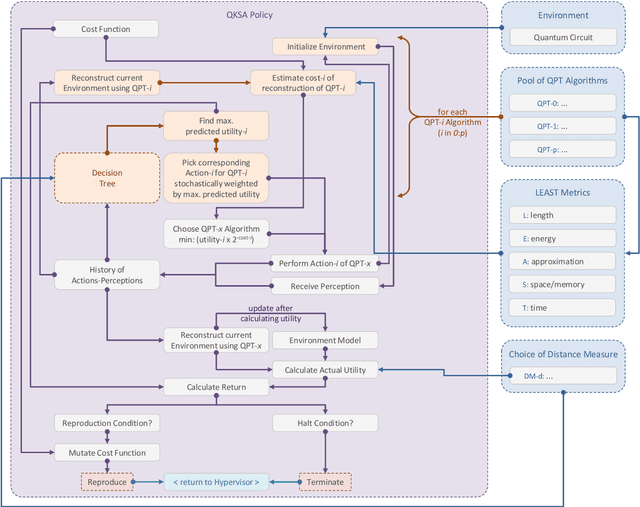

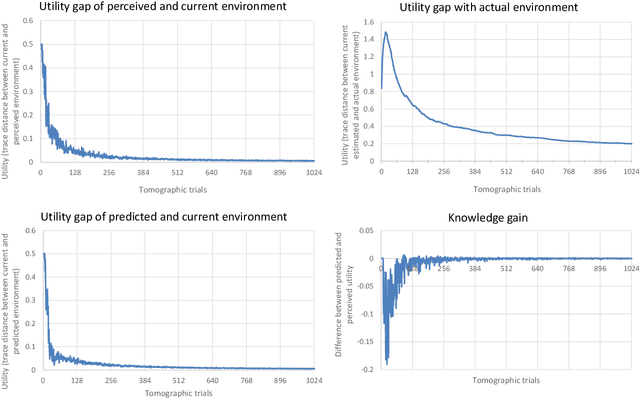

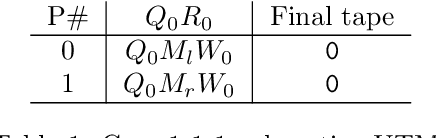

Abstract:In this research, we extend the universal reinforcement learning (URL) agent models of artificial general intelligence to quantum environments. The utility function of a classical exploratory stochastic Knowledge Seeking Agent, KL-KSA, is generalized to distance measures from quantum information theory on density matrices. Quantum process tomography (QPT) algorithms form the tractable subset of programs for modeling environmental dynamics. The optimal QPT policy is selected based on a mutable cost function based on algorithmic complexity as well as computational resource complexity. Instead of Turing machines, we estimate the cost metrics on a high-level language to allow realistic experimentation. The entire agent design is encapsulated in a self-replicating quine which mutates the cost function based on the predictive value of the optimal policy choosing scheme. Thus, multiple agents with pareto-optimal QPT policies evolve using genetic programming, mimicking the development of physical theories each with different resource trade-offs. This formal framework is termed Quantum Knowledge Seeking Agent (QKSA). Despite its importance, few quantum reinforcement learning models exist in contrast to the current thrust in quantum machine learning. QKSA is the first proposal for a framework that resembles the classical URL models. Similar to how AIXI-tl is a resource-bounded active version of Solomonoff universal induction, QKSA is a resource-bounded participatory observer framework to the recently proposed algorithmic information-based reconstruction of quantum mechanics. QKSA can be applied for simulating and studying aspects of quantum information theory. Specifically, we demonstrate that it can be used to accelerate quantum variational algorithms which include tomographic reconstruction as its integral subroutine.

Quantum Accelerated Estimation of Algorithmic Information

Jun 01, 2020

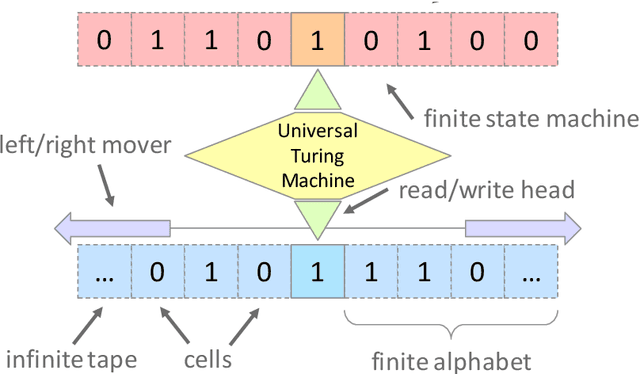

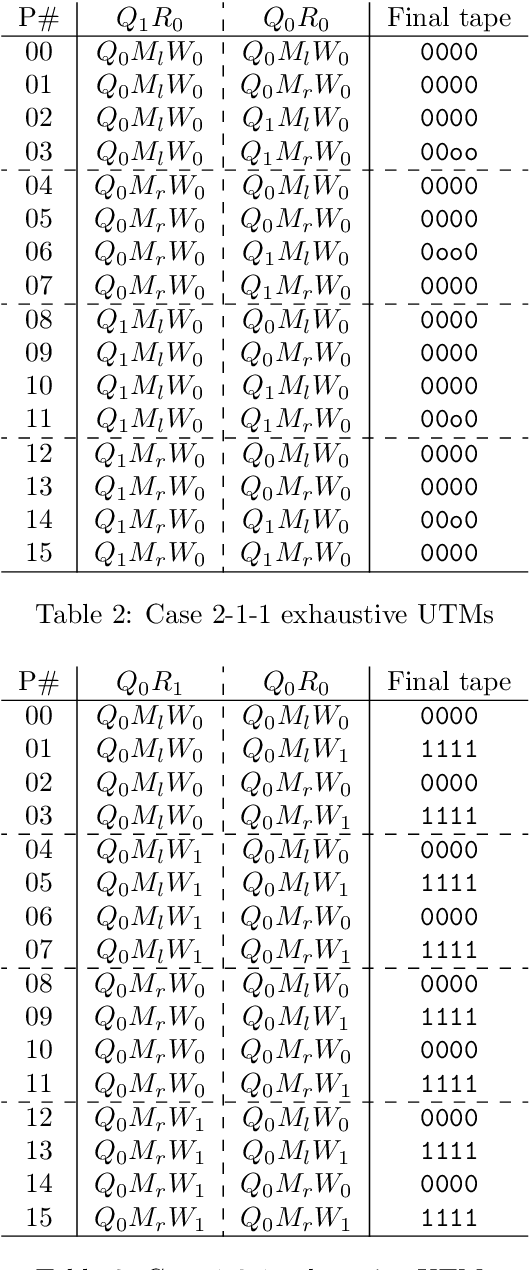

Abstract:In this research we present a quantum circuit for estimating algorithmic information metrics like the universal prior distribution. This accelerates inferring algorithmic structure in data for discovering causal generative models. The computation model is restricted in time and space resources to make it computable in approximating the target metrics. A classical exhaustive enumeration is shown for a few examples. The precise quantum circuit design that allows executing a superposition of automata is presented. As a use-case, an application framework for experimenting on DNA sequences for meta-biology is proposed. To our knowledge, this is the first time approximating algorithmic information is implemented for quantum computation. Our implementation on the OpenQL quantum programming language and the QX Simulator is copy-left and can be found on https://github.com/Advanced-Research-Centre/QuBio.

Evaluation of Parameterized Quantum Circuits: on the design, and the relation between classification accuracy, expressibility and entangling capability

Mar 22, 2020

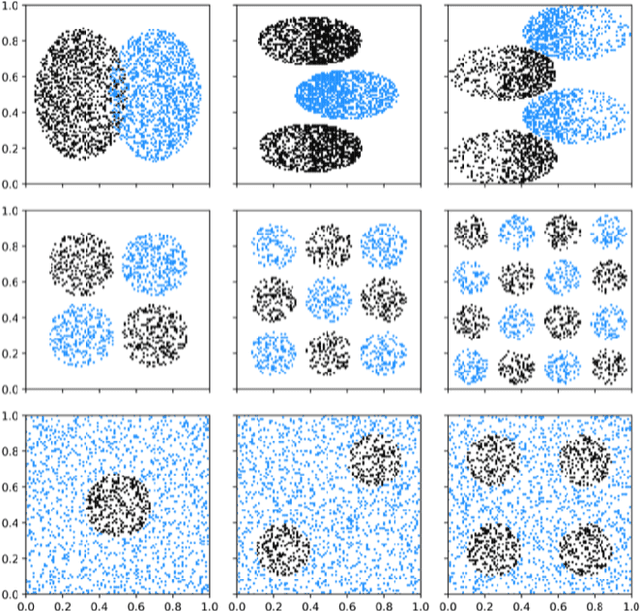

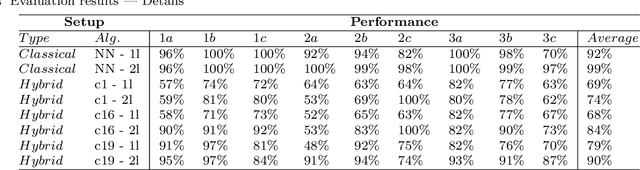

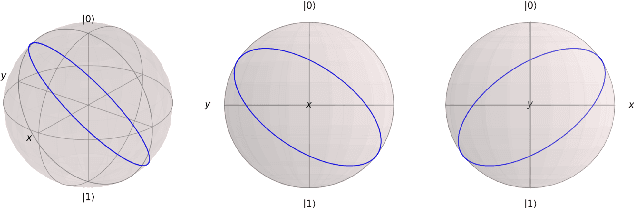

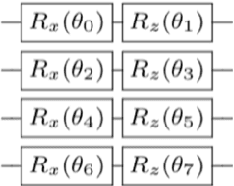

Abstract:Quantum computers promise improvements in terms of both computational speedup and increased accuracy. Relevant areas are optimization, chemistry and machine learning, of which we will focus on the latter. Much of the prior art focuses on determining computational speedup, but how do we know if a particular quantum circuit shows promise for achieving high classification accuracy? Previous work by Sim et al. proposed descriptors to characterize and compare Parameterized Quantum Circuits. In this work, we will investigate any potential relation between the classification accuracy and two of these descriptors, being expressibility and entangling capability. We will first investigate different types of gates in quantum circuits and the changes they incur on the decision boundary. From this, we will propose design criteria for constructing circuits. We will also numerically compare the classifications performance of various quantum circuits and their quantified measure of expressibility and entangling capability, as derived in previous work. From this, we conclude that the common approach to layer combinations of rotational gates and conditional rotational gates provides the best accuracy. We also show that, for our experiments on a limited number of circuits, a coarse-grained relationship exists between entangling capability and classification accuracy, as well as a more fine-grained correlation between expressibility and classification accuracy. Future research will need to be performed to quantify this relation.

Integration and Evaluation of Quantum Accelerators for Data-Driven User Functions

Jan 25, 2020

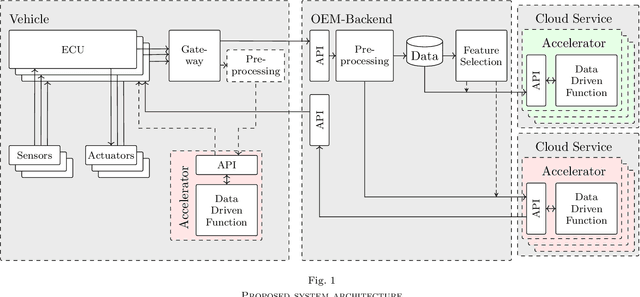

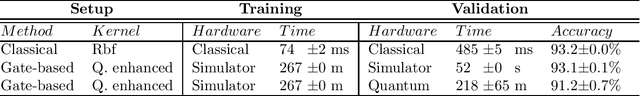

Abstract:Quantum computers hold great promise for accelerating computationally challenging algorithms on noisy intermediate-scale quantum (NISQ) devices in the upcoming years. Much attention of the current research is directed to algorithmic research on artificial data that is disconnected from live systems, such as optimization of systems or training of learning algorithms. In this paper we investigate the integration of quantum systems into industry-grade system architectures. In this work we propose a system architecture for the integration of quantum accelerators. In order to evaluate our proposed system architecture we implemented various algorithms including a classical system, a gate-based quantum accelerator and a quantum annealer. This algorithm automates user habits using data-driven functions trained on real-world data. This also includes an evaluation of the quantum enhanced kernel, that previously was only evaluated on artificial data. In our evaluation, we showed that the quantum-enhanced kernel performs at least equally well to a classical state-of-the-art kernel. We also showed a low reduction in accuracy and latency numbers within acceptable bounds when running on the gate-based IBM quantum accelerator. We, therefore, conclude it is feasible to integrate NISQ-era devices in industry-grade system architecture in preparation for future hardware improvements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge