Kleber Stangherlin

x-RAGE: eXtended Reality -- Action & Gesture Events Dataset

Oct 25, 2024

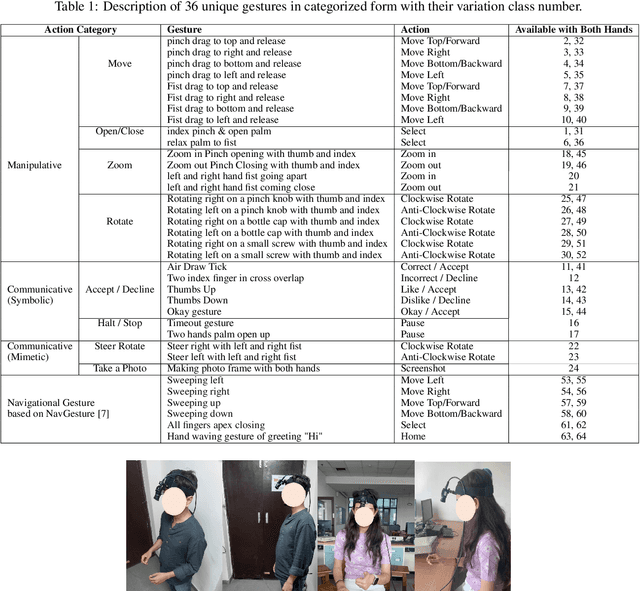

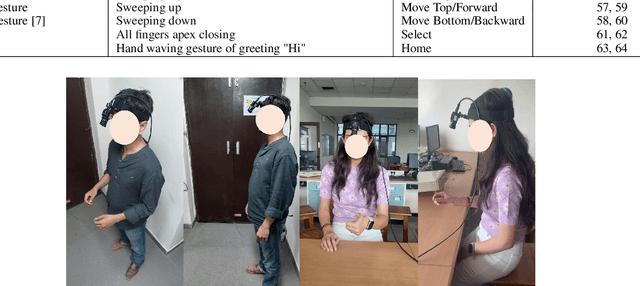

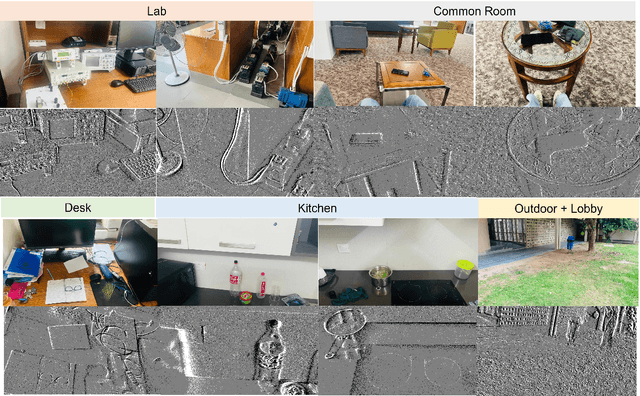

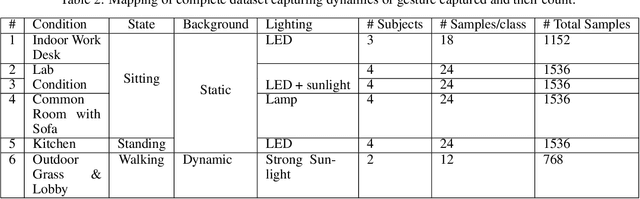

Abstract:With the emergence of the Metaverse and focus on wearable devices in the recent years gesture based human-computer interaction has gained significance. To enable gesture recognition for VR/AR headsets and glasses several datasets focusing on egocentric i.e. first-person view have emerged in recent years. However, standard frame-based vision suffers from limitations in data bandwidth requirements as well as ability to capture fast motions. To overcome these limitation bio-inspired approaches such as event-based cameras present an attractive alternative. In this work, we present the first event-camera based egocentric gesture dataset for enabling neuromorphic, low-power solutions for XR-centric gesture recognition. The dataset has been made available publicly at the following URL: https://gitlab.com/NVM_IITD_Research/xrage.

Hardware Architecture for Inplace Compute of Burrows-Wheeler Transform in a Single Iteration

Sep 05, 2022

Abstract:The Burrows-Wheeler transform (BWT) is used by the bzip2 family of compressors. In this paper, we present a hardware architecture that implements an inplace algorithm to compute the BWT. Our design does not have explicit storage for the suffix array, or output array. The performance of our implementation is fixed, and does not depend on the input string content. We use a register based character buffer in a scanchain configuration, such that the BWT is computed from right to left, as characters are loaded. Loading new characters is done every six cycles, producing a new output character from the previously computed block at the same rate. Our FGPA implementation does not use block ram instances, and achieves throughput of 66, 35, 18, and 15 MB/s for block sizes of 128 B, 1 kB, 4 kB, and 8 kB. We also report results for an ASIC implementation in 65 nm CMOS that achieves 161 MB/s when using block size of 128 B.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge