Dwijay Bane

x-RAGE: eXtended Reality -- Action & Gesture Events Dataset

Oct 25, 2024

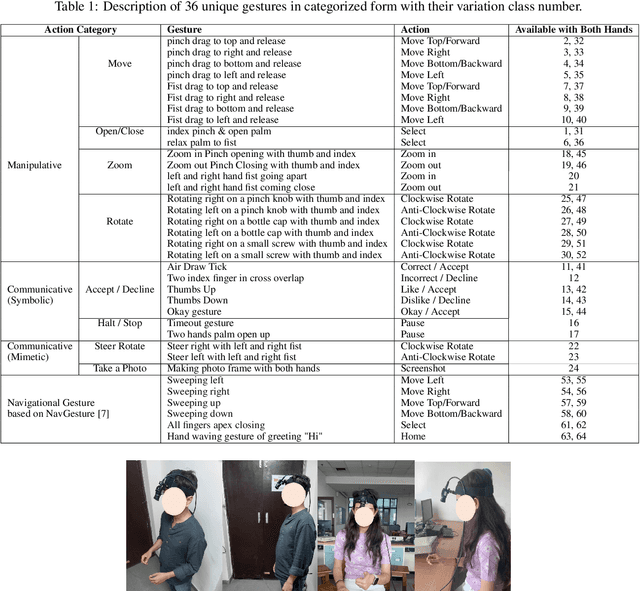

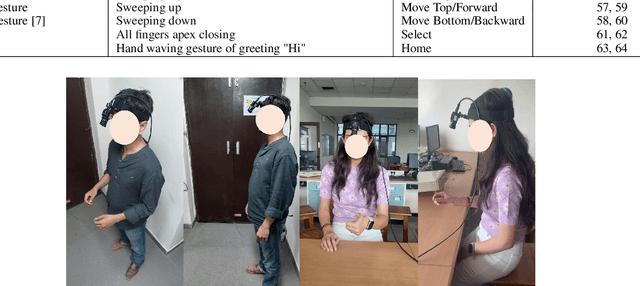

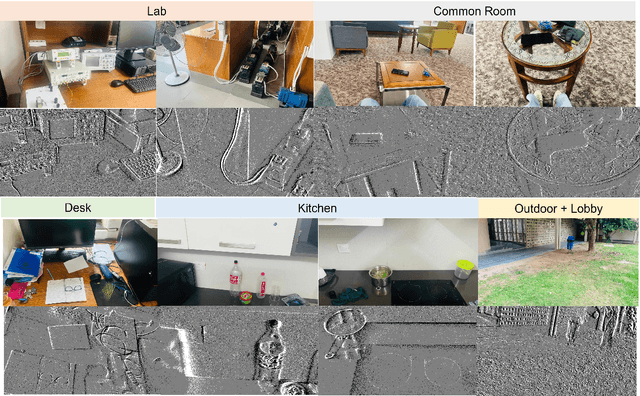

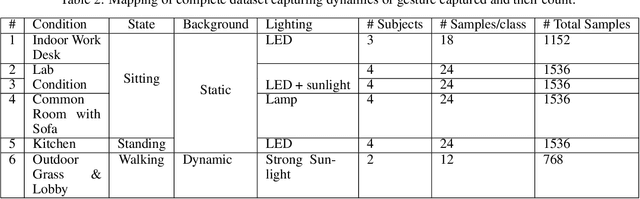

Abstract:With the emergence of the Metaverse and focus on wearable devices in the recent years gesture based human-computer interaction has gained significance. To enable gesture recognition for VR/AR headsets and glasses several datasets focusing on egocentric i.e. first-person view have emerged in recent years. However, standard frame-based vision suffers from limitations in data bandwidth requirements as well as ability to capture fast motions. To overcome these limitation bio-inspired approaches such as event-based cameras present an attractive alternative. In this work, we present the first event-camera based egocentric gesture dataset for enabling neuromorphic, low-power solutions for XR-centric gesture recognition. The dataset has been made available publicly at the following URL: https://gitlab.com/NVM_IITD_Research/xrage.

Non-Invasive Qualitative Vibration Analysis using Event Camera

Oct 18, 2024

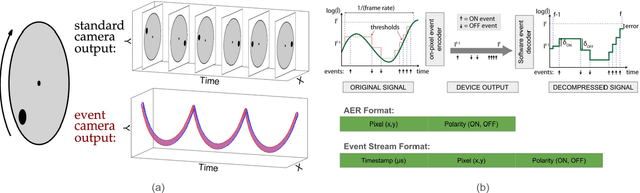

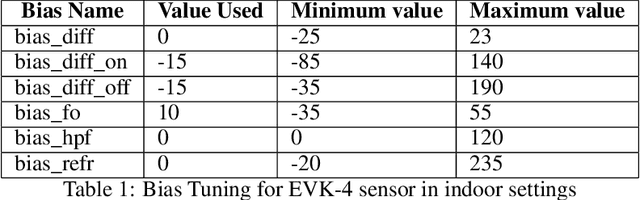

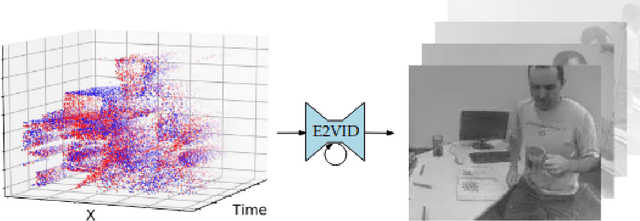

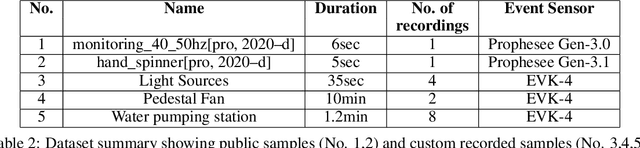

Abstract:This technical report investigates the application of event-based vision sensors in non-invasive qualitative vibration analysis, with a particular focus on frequency measurement and motion magnification. Event cameras, with their high temporal resolution and dynamic range, offer promising capabilities for real-time structural assessment and subtle motion analysis. Our study employs cutting-edge event-based vision techniques to explore real-world scenarios in frequency measurement in vibrational analysis and intensity reconstruction for motion magnification. In the former, event-based sensors demonstrated significant potential for real-time structural assessment. However, our work in motion magnification revealed considerable challenges, particularly in scenarios involving stationary cameras and isolated motion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge