Kirk Saunders

Smooth Trajectory Collision Avoidance through Deep Reinforcement Learning

Oct 12, 2022

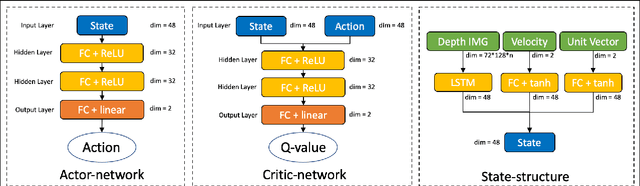

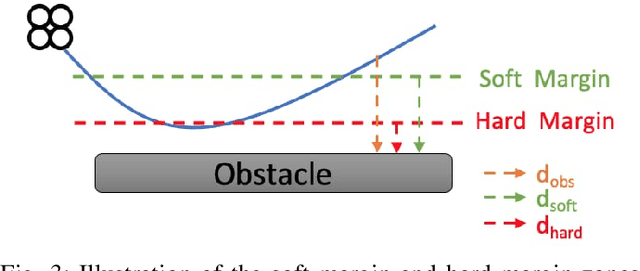

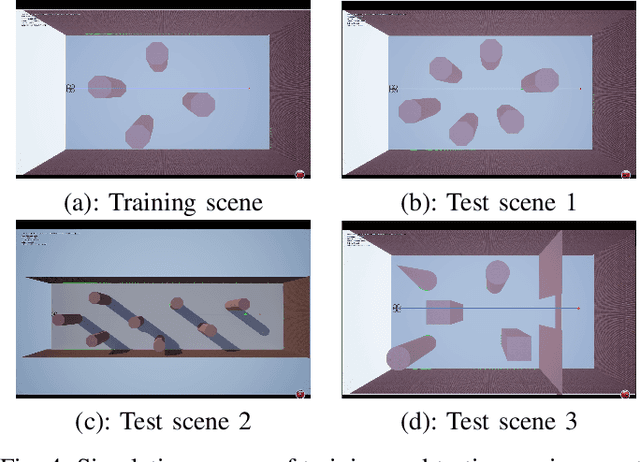

Abstract:Collision avoidance is a crucial task in vision-guided autonomous navigation. Solutions based on deep reinforcement learning (DRL) has become increasingly popular. In this work, we proposed several novel agent state and reward function designs to tackle two critical issues in DRL-based navigation solutions: 1) smoothness of the trained flight trajectories; and 2) model generalization to handle unseen environments. Formulated under a DRL framework, our model relies on margin reward and smoothness constraints to ensure UAVs fly smoothly while greatly reducing the chance of collision. The proposed smoothness reward minimizes a combination of first-order and second-order derivatives of flight trajectories, which can also drive the points to be evenly distributed, leading to stable flight speed. To enhance the agent's capability of handling new unseen environments, two practical setups are proposed to improve the invariance of both the state and reward function when deploying in different scenes. Experiments demonstrate the effectiveness of our overall design and individual components.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge