Keyang Ye

TR-Gaussians: High-fidelity Real-time Rendering of Planar Transmission and Reflection with 3D Gaussian Splatting

Nov 17, 2025Abstract:We propose Transmission-Reflection Gaussians (TR-Gaussians), a novel 3D-Gaussian-based representation for high-fidelity rendering of planar transmission and reflection, which are ubiquitous in indoor scenes. Our method combines 3D Gaussians with learnable reflection planes that explicitly model the glass planes with view-dependent reflectance strengths. Real scenes and transmission components are modeled by 3D Gaussians and the reflection components are modeled by the mirrored Gaussians with respect to the reflection plane. The transmission and reflection components are blended according to a Fresnel-based, view-dependent weighting scheme, allowing for faithful synthesis of complex appearance effects under varying viewpoints. To effectively optimize TR-Gaussians, we develop a multi-stage optimization framework incorporating color and geometry constraints and an opacity perturbation mechanism. Experiments on different datasets demonstrate that TR-Gaussians achieve real-time, high-fidelity novel view synthesis in scenes with planar transmission and reflection, and outperform state-of-the-art approaches both quantitatively and qualitatively.

When Gaussian Meets Surfel: Ultra-fast High-fidelity Radiance Field Rendering

Apr 24, 2025Abstract:We introduce Gaussian-enhanced Surfels (GESs), a bi-scale representation for radiance field rendering, wherein a set of 2D opaque surfels with view-dependent colors represent the coarse-scale geometry and appearance of scenes, and a few 3D Gaussians surrounding the surfels supplement fine-scale appearance details. The rendering with GESs consists of two passes -- surfels are first rasterized through a standard graphics pipeline to produce depth and color maps, and then Gaussians are splatted with depth testing and color accumulation on each pixel order independently. The optimization of GESs from multi-view images is performed through an elaborate coarse-to-fine procedure, faithfully capturing rich scene appearance. The entirely sorting-free rendering of GESs not only achieves very fast rates, but also produces view-consistent images, successfully avoiding popping artifacts under view changes. The basic GES representation can be easily extended to achieve anti-aliasing in rendering (Mip-GES), boosted rendering speeds (Speedy-GES) and compact storage (Compact-GES), and reconstruct better scene geometries by replacing 3D Gaussians with 2D Gaussians (2D-GES). Experimental results show that GESs advance the state-of-the-arts as a compelling representation for ultra-fast high-fidelity radiance field rendering.

Progressive Radiance Distillation for Inverse Rendering with Gaussian Splatting

Aug 14, 2024

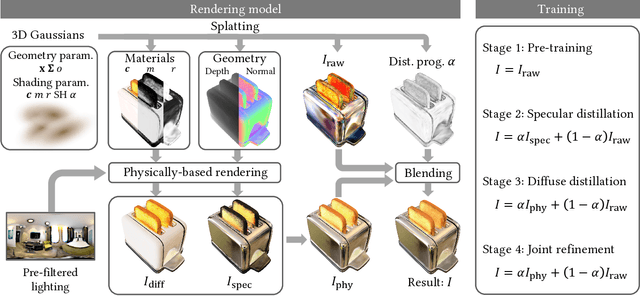

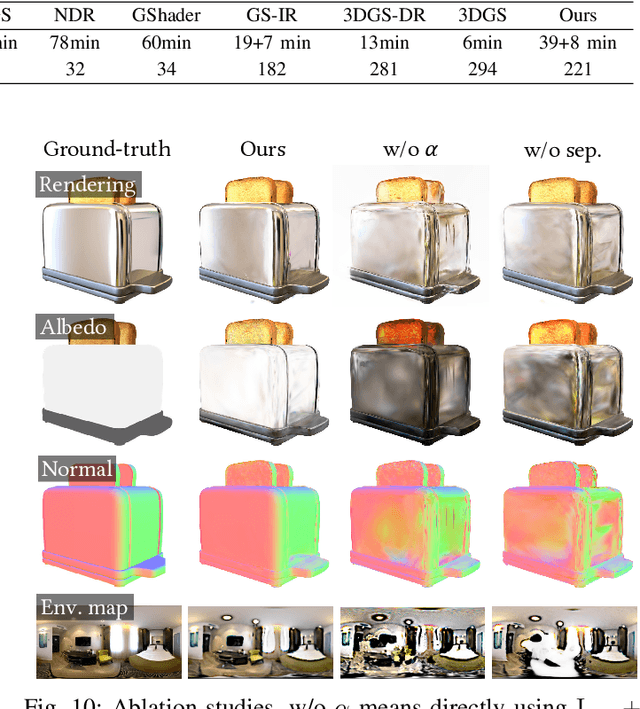

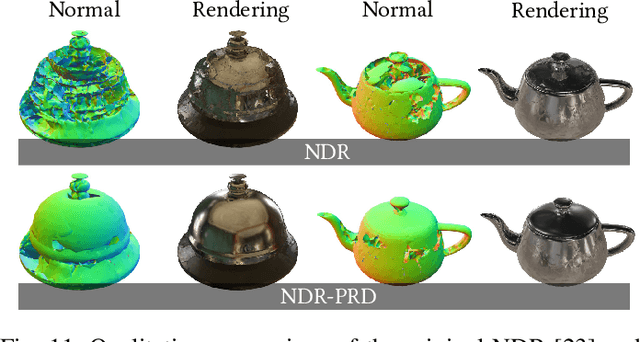

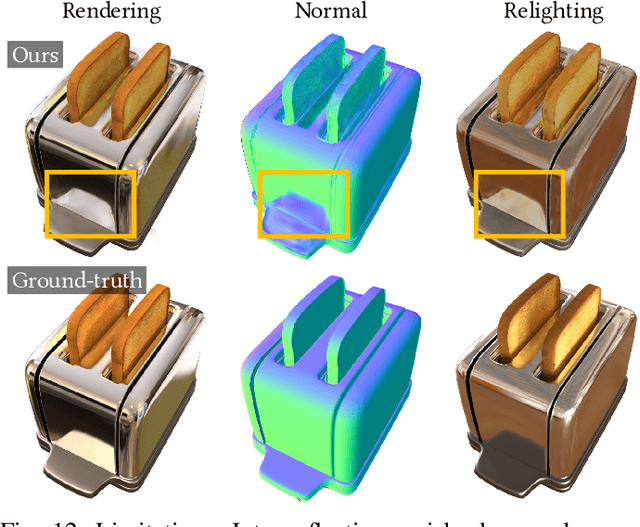

Abstract:We propose progressive radiance distillation, an inverse rendering method that combines physically-based rendering with Gaussian-based radiance field rendering using a distillation progress map. Taking multi-view images as input, our method starts from a pre-trained radiance field guidance, and distills physically-based light and material parameters from the radiance field using an image-fitting process. The distillation progress map is initialized to a small value, which favors radiance field rendering. During early iterations when fitted light and material parameters are far from convergence, the radiance field fallback ensures the sanity of image loss gradients and avoids local minima that attracts under-fit states. As fitted parameters converge, the physical model gradually takes over and the distillation progress increases correspondingly. In presence of light paths unmodeled by the physical model, the distillation progress never finishes on affected pixels and the learned radiance field stays in the final rendering. With this designed tolerance for physical model limitations, we prevent unmodeled color components from leaking into light and material parameters, alleviating relighting artifacts. Meanwhile, the remaining radiance field compensates for the limitations of the physical model, guaranteeing high-quality novel views synthesis. Experimental results demonstrate that our method significantly outperforms state-of-the-art techniques quality-wise in both novel view synthesis and relighting. The idea of progressive radiance distillation is not limited to Gaussian splatting. We show that it also has positive effects for prominently specular scenes when adapted to a mesh-based inverse rendering method.

3D Gaussian Splatting with Deferred Reflection

Apr 29, 2024Abstract:The advent of neural and Gaussian-based radiance field methods have achieved great success in the field of novel view synthesis. However, specular reflection remains non-trivial, as the high frequency radiance field is notoriously difficult to fit stably and accurately. We present a deferred shading method to effectively render specular reflection with Gaussian splatting. The key challenge comes from the environment map reflection model, which requires accurate surface normal while simultaneously bottlenecks normal estimation with discontinuous gradients. We leverage the per-pixel reflection gradients generated by deferred shading to bridge the optimization process of neighboring Gaussians, allowing nearly correct normal estimations to gradually propagate and eventually spread over all reflective objects. Our method significantly outperforms state-of-the-art techniques and concurrent work in synthesizing high-quality specular reflection effects, demonstrating a consistent improvement of peak signal-to-noise ratio (PSNR) for both synthetic and real-world scenes, while running at a frame rate almost identical to vanilla Gaussian splatting.

Animatable 3D Gaussians for High-fidelity Synthesis of Human Motions

Nov 27, 2023

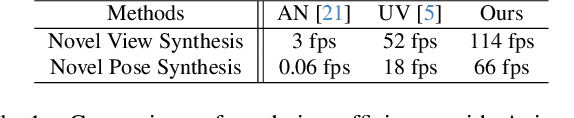

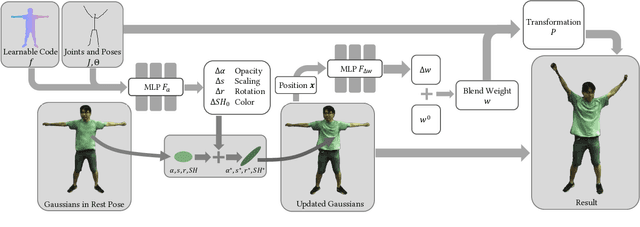

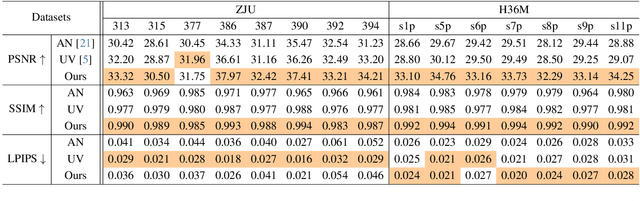

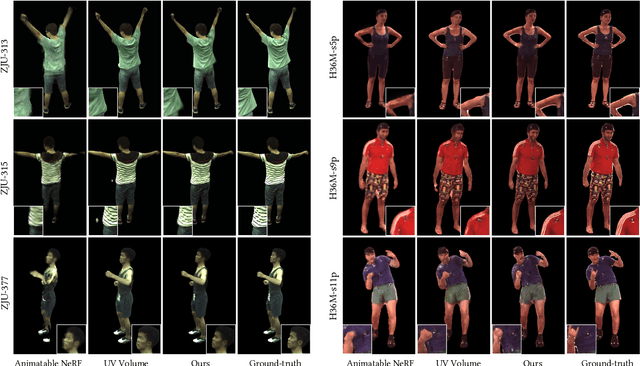

Abstract:We present a novel animatable 3D Gaussian model for rendering high-fidelity free-view human motions in real time. Compared to existing NeRF-based methods, the model owns better capability in synthesizing high-frequency details without the jittering problem across video frames. The core of our model is a novel augmented 3D Gaussian representation, which attaches each Gaussian with a learnable code. The learnable code serves as a pose-dependent appearance embedding for refining the erroneous appearance caused by geometric transformation of Gaussians, based on which an appearance refinement model is learned to produce residual Gaussian properties to match the appearance in target pose. To force the Gaussians to learn the foreground human only without background interference, we further design a novel alpha loss to explicitly constrain the Gaussians within the human body. We also propose to jointly optimize the human joint parameters to improve the appearance accuracy. The animatable 3D Gaussian model can be learned with shallow MLPs, so new human motions can be synthesized in real time (66 fps on avarage). Experiments show that our model has superior performance over NeRF-based methods.

A Real-time Method for Inserting Virtual Objects into Neural Radiance Fields

Oct 09, 2023

Abstract:We present the first real-time method for inserting a rigid virtual object into a neural radiance field, which produces realistic lighting and shadowing effects, as well as allows interactive manipulation of the object. By exploiting the rich information about lighting and geometry in a NeRF, our method overcomes several challenges of object insertion in augmented reality. For lighting estimation, we produce accurate, robust and 3D spatially-varying incident lighting that combines the near-field lighting from NeRF and an environment lighting to account for sources not covered by the NeRF. For occlusion, we blend the rendered virtual object with the background scene using an opacity map integrated from the NeRF. For shadows, with a precomputed field of spherical signed distance field, we query the visibility term for any point around the virtual object, and cast soft, detailed shadows onto 3D surfaces. Compared with state-of-the-art techniques, our approach can insert virtual object into scenes with superior fidelity, and has a great potential to be further applied to augmented reality systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge