Kevin H. Knuth

The Scientific Investigation of Unidentified Aerial Phenomena (UAP) Using Multimodal Ground-Based Observatories

May 31, 2023Abstract:(Abridged) Unidentified Aerial Phenomena (UAP) have resisted explanation and have received little formal scientific attention for 75 years. A primary objective of the Galileo Project is to build an integrated software and instrumentation system designed to conduct a multimodal census of aerial phenomena and to recognize anomalies. Here we present key motivations for the study of UAP and address historical objections to this research. We describe an approach for highlighting outlier events in the high-dimensional parameter space of our census measurements. We provide a detailed roadmap for deciding measurement requirements, as well as a science traceability matrix (STM) for connecting sought-after physical parameters to observables and instrument requirements. We also discuss potential strategies for deciding where to locate instruments for development, testing, and final deployment. Our instrument package is multimodal and multispectral, consisting of (1) wide-field cameras in multiple bands for targeting and tracking of aerial objects and deriving their positions and kinematics using triangulation; (2) narrow-field instruments including cameras for characterizing morphology, spectra, polarimetry, and photometry; (3) passive multistatic arrays of antennas and receivers for radar-derived range and kinematics; (4) radio spectrum analyzers to measure radio and microwave emissions; (5) microphones for sampling acoustic emissions in the infrasonic through ultrasonic frequency bands; and (6) environmental sensors for characterizing ambient conditions (temperature, pressure, humidity, and wind velocity), as well as quasistatic electric and magnetic fields, and energetic particles. The use of multispectral instruments and multiple sensor modalities will help to ensure that artifacts are recognized and that true detections are corroborated and verifiable.

* This paper is published in the Journal of Astronomical Instrumentation, 12(1), 2340006 (2023) https://doi.org/10.1142/S2251171723400068

Designing Intelligent Instruments

Feb 13, 2016

Abstract:Remote science operations require automated systems that can both act and react with minimal human intervention. One such vision is that of an intelligent instrument that collects data in an automated fashion, and based on what it learns, decides which new measurements to take. This innovation implements experimental design and unites it with data analysis in such a way that it completes the cycle of learning. This cycle is the basis of the Scientific Method. The three basic steps of this cycle are hypothesis generation, inquiry, and inference. Hypothesis generation is implemented by artificially supplying the instrument with a parameterized set of possible hypotheses that might be used to describe the physical system. The act of inquiry is handled by an inquiry engine that relies on Bayesian adaptive exploration where the optimal experiment is chosen as the one which maximizes the expected information gain. The inference engine is implemented using the nested sampling algorithm, which provides the inquiry engine with a set of posterior samples from which the expected information gain can be estimated. With these computational structures in place, the instrument will refine its hypotheses, and repeat the learning cycle by taking measurements until the system under study is described within a pre-specified tolerance. We will demonstrate our first attempts toward achieving this goal with an intelligent instrument constructed using the LEGO MINDSTORMS NXT robotics platform.

* 9 pages, 2 figures. Published in the MaxEnt 2007 Proceedings

Bayesian Evidence and Model Selection

Nov 23, 2015

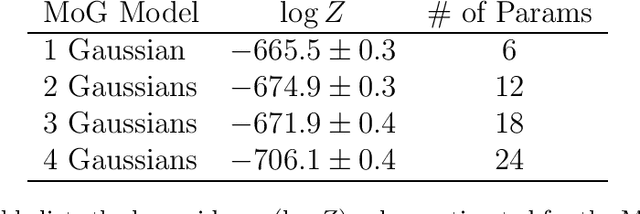

Abstract:In this paper we review the concepts of Bayesian evidence and Bayes factors, also known as log odds ratios, and their application to model selection. The theory is presented along with a discussion of analytic, approximate and numerical techniques. Specific attention is paid to the Laplace approximation, variational Bayes, importance sampling, thermodynamic integration, and nested sampling and its recent variants. Analogies to statistical physics, from which many of these techniques originate, are discussed in order to provide readers with deeper insights that may lead to new techniques. The utility of Bayesian model testing in the domain sciences is demonstrated by presenting four specific practical examples considered within the context of signal processing in the areas of signal detection, sensor characterization, scientific model selection and molecular force characterization.

* Arxiv version consists of 58 pages and 9 figures. Features theory, numerical methods and four applications

Convergent Bayesian formulations of blind source separation and electromagnetic source estimation

Jan 21, 2015Abstract:We consider two areas of research that have been developing in parallel over the last decade: blind source separation (BSS) and electromagnetic source estimation (ESE). BSS deals with the recovery of source signals when only mixtures of signals can be obtained from an array of detectors and the only prior knowledge consists of some information about the nature of the source signals. On the other hand, ESE utilizes knowledge of the electromagnetic forward problem to assign source signals to their respective generators, while information about the signals themselves is typically ignored. We demonstrate that these two techniques can be derived from the same starting point using the Bayesian formalism. This suggests a means by which new algorithms can be developed that utilize as much relevant information as possible. We also briefly mention some preliminary work that supports the value of integrating information used by these two techniques and review the kinds of information that may be useful in addressing the ESE problem.

* 10 pages, Presented at the 1998 MaxEnt Workshop in Garching, Germany. Knuth K.H., Vaughan H.G., Jr. 1999. Convergent Bayesian formulations of blind source separation and electromagnetic source estimation. In: W. von der Linden, V. Dose, R. Fischer and R. Preuss (eds.), Maximum Entropy and Bayesian Methods, Munich 1998, Dordrecht. Kluwer, pp. 217-226

Difficulties applying recent blind source separation techniques to EEG and MEG

Jan 21, 2015

Abstract:High temporal resolution measurements of human brain activity can be performed by recording the electric potentials on the scalp surface (electroencephalography, EEG), or by recording the magnetic fields near the surface of the head (magnetoencephalography, MEG). The analysis of the data is problematic due to the fact that multiple neural generators may be simultaneously active and the potentials and magnetic fields from these sources are superimposed on the detectors. It is highly desirable to un-mix the data into signals representing the behaviors of the original individual generators. This general problem is called blind source separation and several recent techniques utilizing maximum entropy, minimum mutual information, and maximum likelihood estimation have been applied. These techniques have had much success in separating signals such as natural sounds or speech, but appear to be ineffective when applied to EEG or MEG signals. Many of these techniques implicitly assume that the source distributions have a large kurtosis, whereas an analysis of EEG/MEG signals reveals that the distributions are multimodal. This suggests that more effective separation techniques could be designed for EEG and MEG signals.

Information-Theoretic Methods for Identifying Relationships among Climate Variables

Dec 19, 2014

Abstract:Information-theoretic quantities, such as entropy, are used to quantify the amount of information a given variable provides. Entropies can be used together to compute the mutual information, which quantifies the amount of information two variables share. However, accurately estimating these quantities from data is extremely challenging. We have developed a set of computational techniques that allow one to accurately compute marginal and joint entropies. These algorithms are probabilistic in nature and thus provide information on the uncertainty in our estimates, which enable us to establish statistical significance of our findings. We demonstrate these methods by identifying relations between cloud data from the International Satellite Cloud Climatology Project (ISCCP) and data from other sources, such as equatorial pacific sea surface temperatures (SST).

Bayesian Source Separation Applied to Identifying Complex Organic Molecules in Space

Mar 18, 2014

Abstract:Emission from a class of benzene-based molecules known as Polycyclic Aromatic Hydrocarbons (PAHs) dominates the infrared spectrum of star-forming regions. The observed emission appears to arise from the combined emission of numerous PAH species, each with its unique spectrum. Linear superposition of the PAH spectra identifies this problem as a source separation problem. It is, however, of a formidable class of source separation problems given that different PAH sources potentially number in the hundreds, even thousands, and there is only one measured spectral signal for a given astrophysical site. Fortunately, the source spectra of the PAHs are known, but the signal is also contaminated by other spectral sources. We describe our ongoing work in developing Bayesian source separation techniques relying on nested sampling in conjunction with an ON/OFF mechanism enabling simultaneous estimation of the probability that a particular PAH species is present and its contribution to the spectrum.

* 5 pages, 6 Figures, Presented at the IEEE Statistical Signal Processing Workshop, Madison WI, August 2007, 346-350

Informed Source Separation: A Bayesian Tutorial

Nov 13, 2013Abstract:Source separation problems are ubiquitous in the physical sciences; any situation where signals are superimposed calls for source separation to estimate the original signals. In this tutorial I will discuss the Bayesian approach to the source separation problem. This approach has a specific advantage in that it requires the designer to explicitly describe the signal model in addition to any other information or assumptions that go into the problem description. This leads naturally to the idea of informed source separation, where the algorithm design incorporates relevant information about the specific problem. This approach promises to enable researchers to design their own high-quality algorithms that are specifically tailored to the problem at hand.

Foundations of Inference

Jun 21, 2012

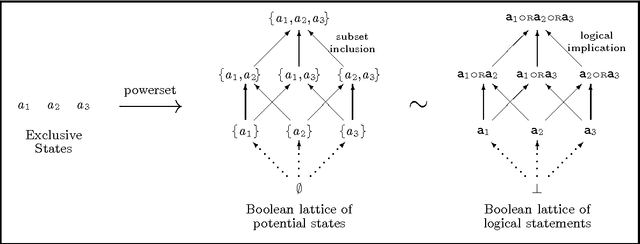

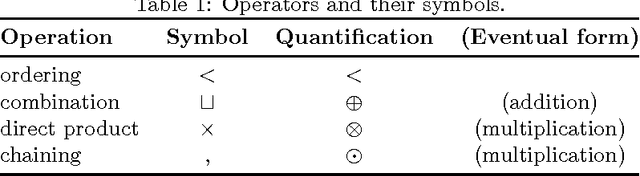

Abstract:We present a simple and clear foundation for finite inference that unites and significantly extends the approaches of Kolmogorov and Cox. Our approach is based on quantifying lattices of logical statements in a way that satisfies general lattice symmetries. With other applications such as measure theory in mind, our derivations assume minimal symmetries, relying on neither negation nor continuity nor differentiability. Each relevant symmetry corresponds to an axiom of quantification, and these axioms are used to derive a unique set of quantifying rules that form the familiar probability calculus. We also derive a unique quantification of divergence, entropy and information.

* This updated version of the paper has been published in the journal Axioms (please see journal reference). 28 pages, 9 figures

Evidence-Based Filters for Signal Detection: Application to Evoked Brain Responses

Jul 06, 2011

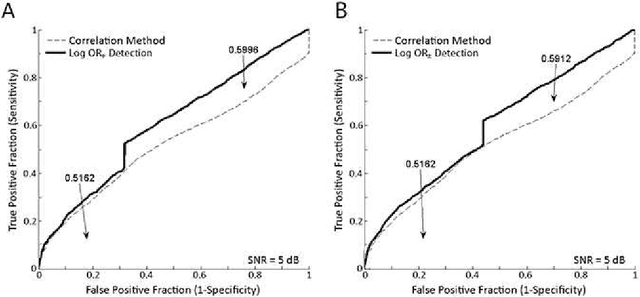

Abstract:Template-based signal detection most often relies on computing a correlation, or a dot product, between an incoming data stream and a signal template. Such a correlation results in an ongoing estimate of the magnitude of the signal in the data stream. However, it does not directly indicate the presence or absence of the signal. The problem is really one of model-testing, and the relevant quantity is the Bayesian evidence (marginal likelihood) of the signal model. Given a signal template and an ongoing data stream, we have developed an evidence-based filter that computes the Bayesian evidence that a signal is present in the data. We demonstrate this algorithm by applying it to brain-machine interface (BMI) data obtained by recording human brain electrical activity, or electroencephalography (EEG). A very popular and effective paradigm in EEG-based BMI is based on the detection of the P300 evoked brain response which is generated in response to particular sensory stimuli. The goal is to detect the presence of a P300 signal in ongoing EEG activity as accurately and as fast as possible. Our algorithm uses a subject-specific P300 template to compute the Bayesian evidence that a applying window of EEG data contains the signal. The efficacy of this algorithm is demonstrated by comparing receiver operating characteristic (ROC) curves of the evidence-based filter to the usual correlation method. Our results show a significant improvement in single-trial P300 detection. The evidence-based filter promises to improve the accuracy and speed of the detection of evoked brain responses in BMI applications as well the detection of template signals in more general signal processing applications

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge