Keqiang Fan

Discrepancy-based Diffusion Models for Lesion Detection in Brain MRI

May 08, 2024

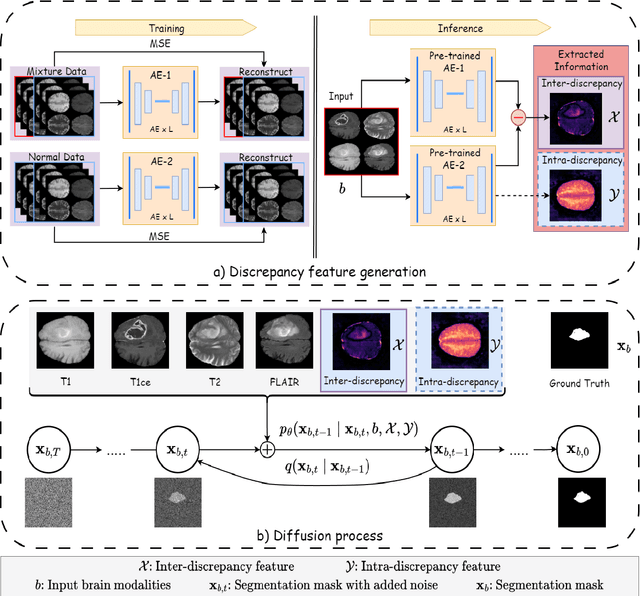

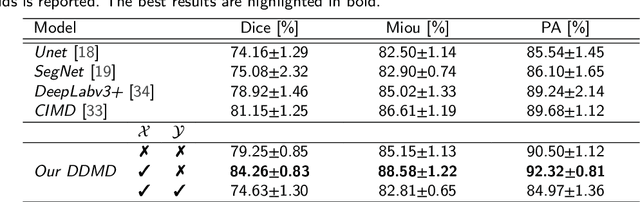

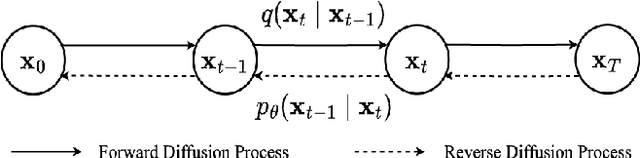

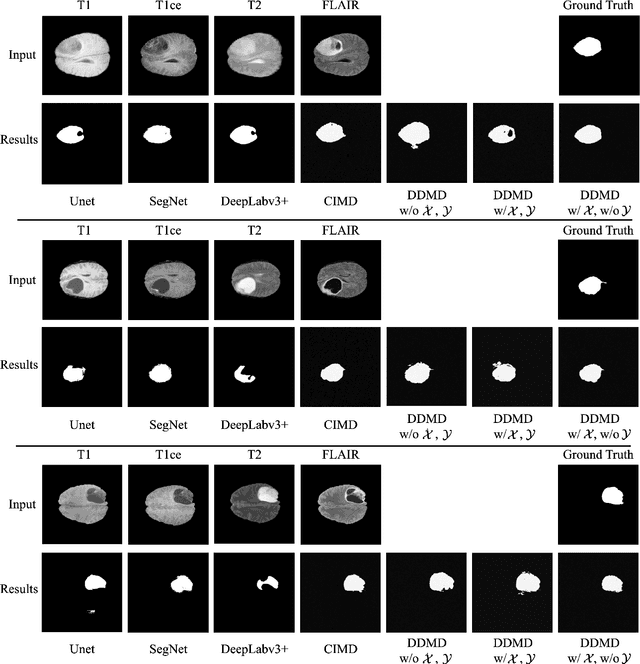

Abstract:Diffusion probabilistic models (DPMs) have exhibited significant effectiveness in computer vision tasks, particularly in image generation. However, their notable performance heavily relies on labelled datasets, which limits their application in medical images due to the associated high-cost annotations. Current DPM-related methods for lesion detection in medical imaging, which can be categorized into two distinct approaches, primarily rely on image-level annotations. The first approach, based on anomaly detection, involves learning reference healthy brain representations and identifying anomalies based on the difference in inference results. In contrast, the second approach, resembling a segmentation task, employs only the original brain multi-modalities as prior information for generating pixel-level annotations. In this paper, our proposed model - discrepancy distribution medical diffusion (DDMD) - for lesion detection in brain MRI introduces a novel framework by incorporating distinctive discrepancy features, deviating from the conventional direct reliance on image-level annotations or the original brain modalities. In our method, the inconsistency in image-level annotations is translated into distribution discrepancies among heterogeneous samples while preserving information within homogeneous samples. This property retains pixel-wise uncertainty and facilitates an implicit ensemble of segmentation, ultimately enhancing the overall detection performance. Thorough experiments conducted on the BRATS2020 benchmark dataset containing multimodal MRI scans for brain tumour detection demonstrate the great performance of our approach in comparison to state-of-the-art methods.

Non-negative Subspace Feature Representation for Few-shot Learning in Medical Imaging

Apr 04, 2024

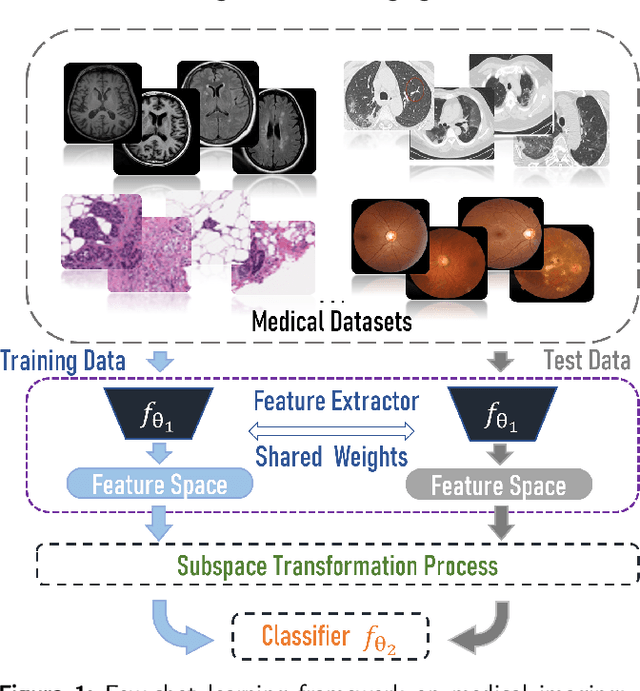

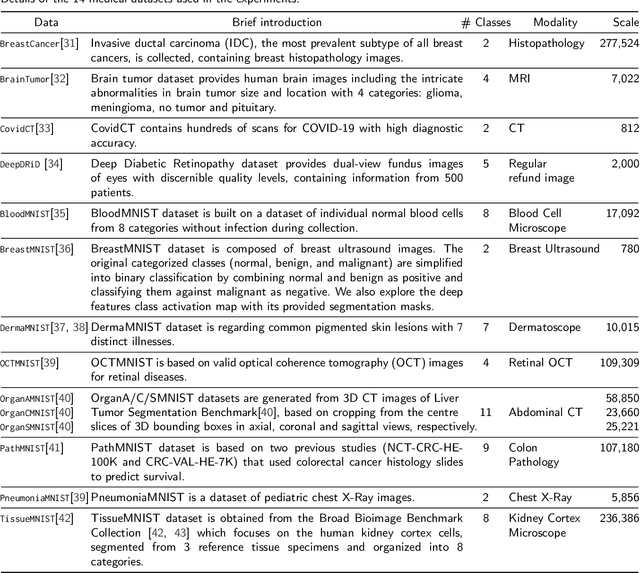

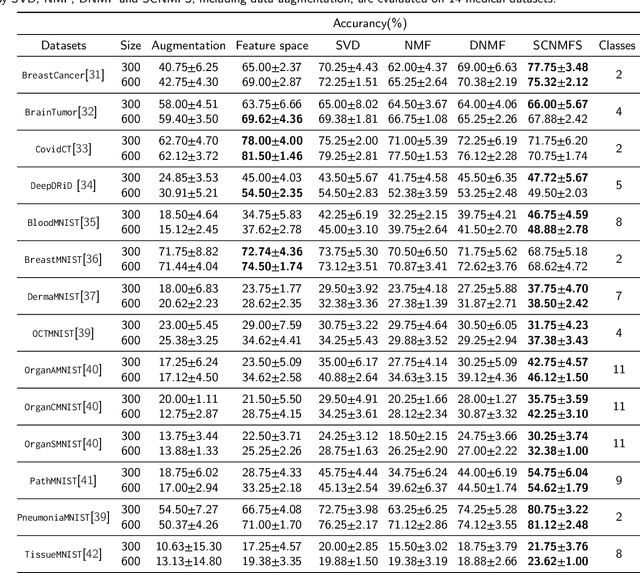

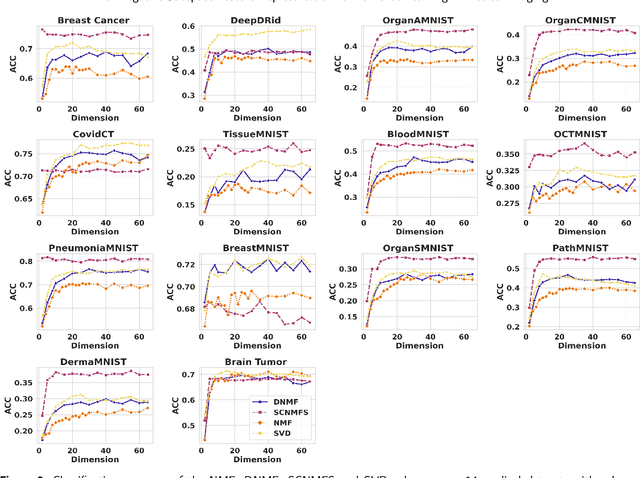

Abstract:Unlike typical visual scene recognition domains, in which massive datasets are accessible to deep neural networks, medical image interpretations are often obstructed by the paucity of data. In this paper, we investigate the effectiveness of data-based few-shot learning in medical imaging by exploring different data attribute representations in a low-dimensional space. We introduce different types of non-negative matrix factorization (NMF) in few-shot learning, addressing the data scarcity issue in medical image classification. Extensive empirical studies are conducted in terms of validating the effectiveness of NMF, especially its supervised variants (e.g., discriminative NMF, and supervised and constrained NMF with sparseness), and the comparison with principal component analysis (PCA), i.e., the collaborative representation-based dimensionality reduction technique derived from eigenvectors. With 14 different datasets covering 11 distinct illness categories, thorough experimental results and comparison with related techniques demonstrate that NMF is a competitive alternative to PCA for few-shot learning in medical imaging, and the supervised NMF algorithms are more discriminative in the subspace with greater effectiveness. Furthermore, we show that the part-based representation of NMF, especially its supervised variants, is dramatically impactful in detecting lesion areas in medical imaging with limited samples.

IIHT: Medical Report Generation with Image-to-Indicator Hierarchical Transformer

Aug 10, 2023

Abstract:Automated medical report generation has become increasingly important in medical analysis. It can produce computer-aided diagnosis descriptions and thus significantly alleviate the doctors' work. Inspired by the huge success of neural machine translation and image captioning, various deep learning methods have been proposed for medical report generation. However, due to the inherent properties of medical data, including data imbalance and the length and correlation between report sequences, the generated reports by existing methods may exhibit linguistic fluency but lack adequate clinical accuracy. In this work, we propose an image-to-indicator hierarchical transformer (IIHT) framework for medical report generation. It consists of three modules, i.e., a classifier module, an indicator expansion module and a generator module. The classifier module first extracts image features from the input medical images and produces disease-related indicators with their corresponding states. The disease-related indicators are subsequently utilised as input for the indicator expansion module, incorporating the "data-text-data" strategy. The transformer-based generator then leverages these extracted features along with image features as auxiliary information to generate final reports. Furthermore, the proposed IIHT method is feasible for radiologists to modify disease indicators in real-world scenarios and integrate the operations into the indicator expansion module for fluent and accurate medical report generation. Extensive experiments and comparisons with state-of-the-art methods under various evaluation metrics demonstrate the great performance of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge